-

Welcome to Overclockers Forums! Join us to reply in threads, receive reduced ads, and to customize your site experience!

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Project: Rackmount Overkill

- Thread starter Automata

- Start date

I heard that the expander does this, for some reason. Haven't checked on mine.

Hmmm, I'll have to decide how to relabel them then, need to make sure if a drive dies I'm positive which one it is before I yank it out of the enclosure, lol.

That Norco RPC470, you have at least one, right? Do you have the same problem I have, where you usually have to take the drive below or above a drive halfway out before the drive you actually want to take out will come out. I absolutely abhor this case at this point, especially since the bottom drive bays are useless if you have a full-size server motherboard.

- Joined

- May 15, 2006

- Thread Starter

- #803

- Joined

- Mar 13, 2006

Hmmm, I'll have to decide how to relabel them then, need to make sure if a drive dies I'm positive which one it is before I yank it out of the enclosure, lol.

That Norco RPC470, you have at least one, right? Do you have the same problem I have, where you usually have to take the drive below or above a drive halfway out before the drive you actually want to take out will come out. I absolutely abhor this case at this point, especially since the bottom drive bays are useless if you have a full-size server motherboard.

I have a 470 and replaced both carriers with hotswap bays.

I have a 470 and replaced both carriers with hotswap bays.

How much shorter would they be than the standard carriers with drives installed? If you have a full-size board there's about 1/8" of clearance, making those bottom drive bays useless.

I mean, this limits me from 15 to 13 2tb drives, which isn't a real problem for me at this point.....just saying.

- Joined

- Mar 13, 2006

How much shorter would they be than the standard carriers with drives installed? If you have a full-size board there's about 1/8" of clearance, making those bottom drive bays useless.

I mean, this limits me from 15 to 13 2tb drives, which isn't a real problem for me at this point.....just saying.

I have the fan array in the stock location on that 470, and can cram my hand in between enough to plug and unplug cables as needed.

- Joined

- May 15, 2006

- Thread Starter

- #808

Got the replacement Hitachi drive back, same model as the old ones. Glad they didn't decide to be nice and upgrade it!

The array is expanding to the additional drive now and holy is it slow. Going to take a total of 30 hours to go from a 6 drives to 7 (1TB/e).

is it slow. Going to take a total of 30 hours to go from a 6 drives to 7 (1TB/e).

The array is expanding to the additional drive now and holy

Got the replacement Hitachi drive back, same model as the old ones. Glad they didn't decide to be nice and upgrade it!

The array is expanding to the additional drive now and holyis it slow. Going to take a total of 30 hours to go from a 6 drives to 7 (1TB/e).

i swear my linux software raid rebuilds faster than this areca hardware raid.

- Joined

- May 15, 2006

- Thread Starter

- #810

I found an incredibly annoying issue with ext4 on CentOS 5.6. You can't resize an ext4 partition.

Seriously.

"parted" does not work with ext4 partition. "fdisk" doesn't work with large partitions.

I saw a suggestion to use "gparted" and it worked up until it issued a "e2fsck" command. On CentOS, they haven't merged ext4 tools into the older e2fsck utility. Instead, it is called "e4fsck" and it works perfectly fine. The problem is that gparted is not aware of this issue and I don't see a way to change it. So, no tools are available to resize ext4 partitions and I'm sitting 1TB short in my RAID array. Great!

With some quick thinking, I simply renamed the existing e2fsck utility and created a soft link to the new one. This allowed me to trick gparted into running e4fsck.

This will error out, stating that "resize2fs" cannot resize the file system (urg!), but it still resized the partition itself; which is all we need. Simply resize:

That is how you resize an ext4 partition on CentOS.

Seriously.

"parted" does not work with ext4 partition. "fdisk" doesn't work with large partitions.

I saw a suggestion to use "gparted" and it worked up until it issued a "e2fsck" command. On CentOS, they haven't merged ext4 tools into the older e2fsck utility. Instead, it is called "e4fsck" and it works perfectly fine. The problem is that gparted is not aware of this issue and I don't see a way to change it. So, no tools are available to resize ext4 partitions and I'm sitting 1TB short in my RAID array. Great!

With some quick thinking, I simply renamed the existing e2fsck utility and created a soft link to the new one. This allowed me to trick gparted into running e4fsck.

Code:

mv /sbin/e2fsck /sbin/e2fsck.bak

[COLOR=Gray]#Rename/move the existing e2fsck program[/COLOR]

chmod -x /sbin/e2fsck.bak

[COLOR=Gray]#Don't allow it to execute, just in case[/COLOR]

ln -s /sbin/e4fsck /sbin/e2fsck

[COLOR=Gray]#Create a link to the new utility[/COLOR]

gparted /dev/sda

[COLOR=Gray]#Run gparted on the new disk, will get past the check[/COLOR]

Code:

[root@thideras-server ~]# resize4fs /dev/sda1

resize4fs 1.41.12 (17-May-2010)

Resizing the filesystem on /dev/sda1 to 1219480088 (4k) blocks.

The filesystem on /dev/sda1 is now 1219480088 blocks long.

Last edited:

- Joined

- May 15, 2006

- Thread Starter

- #811

Just a software update this time. First, got the printer back up and running. I didn't configure it after the SSD died.

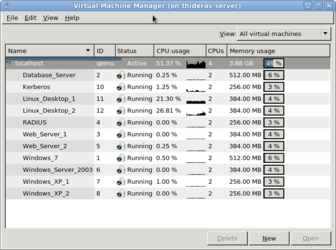

Been working on my VMs and are going to start putting them to work tonight. You can see a lot more VMs than I had with VMWare. The two web servers, database server and soon to be a reverse proxy are all related. The Windows XP and 2k3 ones are in a domain setup. The Windows 7 vm is for testing our software in an environment that we don't care about. The RADIUS server will be just that, a RADIUS server. The Kerberos server and "Linux_Desktop" entries are going to be for a test Kerberos environment; never even seen one.

With every last VM running, it uses 49% of my 8 GiB of RAM; so I got some room left. I could use these for another cluster (one that I'd actually figure out how to use), SCSI hosts, etc. With the release of Bulldozer, I hope to be able to upgrade my server so that I can add even more VMs!

Been working on my VMs and are going to start putting them to work tonight. You can see a lot more VMs than I had with VMWare. The two web servers, database server and soon to be a reverse proxy are all related. The Windows XP and 2k3 ones are in a domain setup. The Windows 7 vm is for testing our software in an environment that we don't care about. The RADIUS server will be just that, a RADIUS server. The Kerberos server and "Linux_Desktop" entries are going to be for a test Kerberos environment; never even seen one.

With every last VM running, it uses 49% of my 8 GiB of RAM; so I got some room left. I could use these for another cluster (one that I'd actually figure out how to use), SCSI hosts, etc. With the release of Bulldozer, I hope to be able to upgrade my server so that I can add even more VMs!

- Joined

- Jun 8, 2011

- Location

- Falls Church, VA

Very nice setup man. New member here and first post so I can follow  . I am really interested in trying out Astaro now.

. I am really interested in trying out Astaro now.

I am creating a thread with my setup and future build as well.

I am creating a thread with my setup and future build as well.

- Joined

- Jun 12, 2011

- Location

- South Dakota

Oh Mr. Thideras. You have come a long way since I saw you tinkering with your first server/footrest under your desk that you would beat with an angry fist when it shut off randomly!

- Joined

- May 15, 2006

- Thread Starter

- #814

Just bought two Dell PowerEdge 2650 servers. These have a 2u chassis with two Xeon (Single core Pentium 4 era) processors. I believe these use 3.5" 10k RPM SCSI drives with a Perc 3. I'll have more pictures when they arrive. Here is a quick breakdown:

This is not my image.

Just need to find two sets of rails.

#1

2.2ghz two xeon cpu's

Five 32GB 10,000rpm scsi HDD's

2GB RAM

Raid controller

#2

2.4ghz two xeon cpu's

four 73GB 10,000 scsi HDD's

1gig RAM

Raid controller

This is not my image.

Just need to find two sets of rails.

- Joined

- Jun 8, 2011

- Location

- Falls Church, VA

Just need to find two sets of rails.

I use these for my 2850's. The rapid rails are real nice and I also have the cable management arm attached to them.

http://cgi.ebay.com/Dell-PowerEdge-...047?pt=LH_DefaultDomain_0&hash=item1e651f686f

Here are the arms.

http://cgi.ebay.com/DELL-POWEREDGE-...323?pt=LH_DefaultDomain_0&hash=item53e5fe71db

- Joined

- May 15, 2006

- Thread Starter

- #816

Thanks for the link. I'll have to see if this fits my old Pentium 3 based Dell as well.I use these for my 2850's. The rapid rails are real nice and I also have the cable management arm attached to them.

http://cgi.ebay.com/Dell-PowerEdge-...047?pt=LH_DefaultDomain_0&hash=item1e651f686f

Here are the arms.

http://cgi.ebay.com/DELL-POWEREDGE-...323?pt=LH_DefaultDomain_0&hash=item53e5fe71db

- Joined

- May 15, 2006

- Thread Starter

- #817

Servers have arrived. I'll have some pictures later when I get to testing them. I have to DBAN the disks since they weren't erased!

Looking at the mount points on the server for rails, it looks like my other Dell server matches up perfectly. I should be able to rackmount all three servers!

Looking at the mount points on the server for rails, it looks like my other Dell server matches up perfectly. I should be able to rackmount all three servers!

- Joined

- May 15, 2006

- Thread Starter

- #820

Well, one server hates me.

So, I tore everything apart and reseated anything that could be reseated. Upon removing the PCIx expansion tray, I noticed there was an odd pattern in the pins. They were bent and shorting to adjacent pins. I tried correcting them, but they were bent far too much to recover.

After removing the broken pins and reseating everything that I possibly could, I put it back together and fired it up. I'm waiting on the rebuild now, roughly 10% through.

So, I tore everything apart and reseated anything that could be reseated. Upon removing the PCIx expansion tray, I noticed there was an odd pattern in the pins. They were bent and shorting to adjacent pins. I tried correcting them, but they were bent far too much to recover.

After removing the broken pins and reseating everything that I possibly could, I put it back together and fired it up. I'm waiting on the rebuild now, roughly 10% through.

Similar threads

- Replies

- 2

- Views

- 1K

- Replies

- 1

- Views

- 776

- Replies

- 7

- Views

- 3K