- Joined

- Oct 25, 2012

So I was thinking about this...

If dual chip VGA cards (590, 690, 7990, etc) share VRAM, that is, a 4GB 690 is really only 2GB, because both GPUs need VRAM.

So why doesn't my dual Xeon work this way? There is 12GB in each processor's memory bank, totaling 24GB, but it doesn't work the same way, why are CPU's able to access it's sister CPU's RAM, but GPUs are not?

If dual chip VGA cards (590, 690, 7990, etc) share VRAM, that is, a 4GB 690 is really only 2GB, because both GPUs need VRAM.

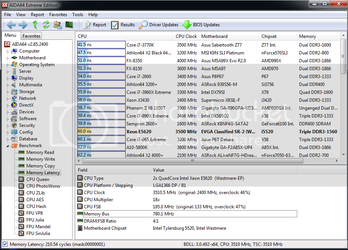

So why doesn't my dual Xeon work this way? There is 12GB in each processor's memory bank, totaling 24GB, but it doesn't work the same way, why are CPU's able to access it's sister CPU's RAM, but GPUs are not?