- Joined

- Nov 28, 2001

I'm trying to wrap my head around all the different network setups I could use with my ESXi box and am still confused on alot, mainly because all the information I find is written for a enterprise environment, and not a home LAN.

What I'm trying to do is setup my ESXi box to make best use of the 4 port Intel NIC card. I have 2 more ports on the motherboard that will be used as a WAN port for my firewall and ESXi management port, so that traffic is covered.

I want to use the 4 ports on the Intel card together in a way that ESXi and my Dell 5224 managed switch can utilize the NICs and bandwidth in a more balanced manner, so no single NIC port is saturated and holding up the rest of the network/PCs. I also want to setup a proper virtual network for the NFS and iSCSI targets that will be used by ESXi and various VMs on the same box.

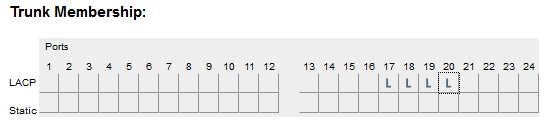

So far all I understand for the NIC teaming part, is that I will need to setup a vSwitch in ESXi with the 4 NIC ports bonded to it with the IP Hash setting. The Dell 5224 managed switch I have to set the specific ports I plan to connect the 4 port nic to in Gigabit mode, and here is where I get confused. I have not found anything specific to the Dell 5224, but what I have seen is that I need to enable Trunking, or 802.3ad, or both, along with possibly some other settings that may or may not be needed....

Can anyone clear this part up for me?

NFS/iSCSI is another matter. I do not know if a single dedicated vNIC in a VM (ESXi included) is enough for NFS and iSCSI duties or if multiple vNICs along with multiple vSwitches are best. I read that when network usage happens within the ESXi box, that it will happen at a much higher rate as it does not go through any part of the network itself (vNICs or vSwitch). I don't know the specifics, but ESXi essentially bypasses the network portion and directly copies memory addresses from one VM to another. This should mean that a VM with a NFS/iSCSI target to a VM on the same machine, shouldn't need more then 1 nic for the NFS/iSCSI. But so far I have not found any concrete answer on this, as the articles I've read deal with NFS/iSCSI target and VM linked to the target, being on seperate physical machines.

Anyone know the answer to this?

Depending on the answer above, I'll have even more questions about multipathing/MPIO with NFS/iSCSI, as it seems to be another issue that is dealt with in a enterprise environment in a couple of different manners.

What I'm trying to do is setup my ESXi box to make best use of the 4 port Intel NIC card. I have 2 more ports on the motherboard that will be used as a WAN port for my firewall and ESXi management port, so that traffic is covered.

I want to use the 4 ports on the Intel card together in a way that ESXi and my Dell 5224 managed switch can utilize the NICs and bandwidth in a more balanced manner, so no single NIC port is saturated and holding up the rest of the network/PCs. I also want to setup a proper virtual network for the NFS and iSCSI targets that will be used by ESXi and various VMs on the same box.

So far all I understand for the NIC teaming part, is that I will need to setup a vSwitch in ESXi with the 4 NIC ports bonded to it with the IP Hash setting. The Dell 5224 managed switch I have to set the specific ports I plan to connect the 4 port nic to in Gigabit mode, and here is where I get confused. I have not found anything specific to the Dell 5224, but what I have seen is that I need to enable Trunking, or 802.3ad, or both, along with possibly some other settings that may or may not be needed....

Can anyone clear this part up for me?

NFS/iSCSI is another matter. I do not know if a single dedicated vNIC in a VM (ESXi included) is enough for NFS and iSCSI duties or if multiple vNICs along with multiple vSwitches are best. I read that when network usage happens within the ESXi box, that it will happen at a much higher rate as it does not go through any part of the network itself (vNICs or vSwitch). I don't know the specifics, but ESXi essentially bypasses the network portion and directly copies memory addresses from one VM to another. This should mean that a VM with a NFS/iSCSI target to a VM on the same machine, shouldn't need more then 1 nic for the NFS/iSCSI. But so far I have not found any concrete answer on this, as the articles I've read deal with NFS/iSCSI target and VM linked to the target, being on seperate physical machines.

Anyone know the answer to this?

Depending on the answer above, I'll have even more questions about multipathing/MPIO with NFS/iSCSI, as it seems to be another issue that is dealt with in a enterprise environment in a couple of different manners.