- Thread Starter

- #41

To hell with the budget, I got the Xeon and the 970.

Well, that was a bad decision seeing the GTX 970 is really a 3.5GB card with fewer ROPs and less L2 cache than has been advertised for the last 5 months. Nvidia sucks.

Welcome to Overclockers Forums! Join us to reply in threads, receive reduced ads, and to customize your site experience!

To hell with the budget, I got the Xeon and the 970.

Well, that was a bad decision seeing the GTX 970 is really a 3.5GB card with fewer ROPs and less L2 cache than has been advertised for the last 5 months. Nvidia sucks.

It's actually not true. It still allocates 4GB and performance is still really good with the only difference that users ( who read news ) know about this fact. You just got this info and now you think it's some kind of issue but have you seen it on your PC ? 99% users won't even see it playing games. Not even mention it's barely a performance drop as people already tested:

http://www.guru3d.com/news-story/does-the-geforce-gtx-970-have-a-memory-allocation-bug.html

Regardless if there is issue or not, it's still best gfx card for the price on the market and I see no reason why not to buy it. GTX960 is a fail and GTX980 is much more expensive but not so much faster. On the AMD side you have noting interesting and won't be for next 2-3 months.

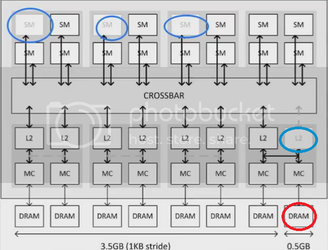

This is the issue with the 970, visually explained. Basically, due to "binning" or shall we say "gimping" by Nvidia, the 970 basically has 7 memory controllers with L2 cache to control 3.5GB of VRAM. And for the remaining 0.5GB, it has a single memory controller with no L2 cache, making that 0.5GB of VRAM slower. The "8th" memory controller borrows cache from the 7th memory controller.

The 970 is basically a gimped 980. It's been neutered. I think everyone knew that all along.

What the heck is Nvidia doing using a 256bit memory interface anyways? AMD uses a 512-bit interface on its high end gaming cards.

Nvidia promoted it to reviewers as a 980 with less CUDA cores, less texture units, and a slower clockrate. Not less ROPs, not less L2 cache, with a 224GB/s memory bandwidth instead of its acutal 196GB/s, and not with a segmented memory architecture that ensures it will age much worse than a 980 does. This card is gimped a lot more than their initial specs indicated. If they offer full refunds I'm going to get my $340 back and buy an R9 290x, as the power savings of Maxwell are meaningless to me.

What the heck is Nvidia doing using a 256bit memory interface anyways? AMD uses a 512-bit interface on its high end gaming cards.