So, I'm currently enjoying two Ubuntu builds with hi-end Nvidia cards, but I'm really a Linux noob and also a hi-end graphic craphics noob. So I am quite used to AMD Tahiti/Hawaii and low-end mobos and even GPU extenders - because even PCI 1x didn't really hurt the ppd. And I did get excited about 970 and Ubuntu and I run out buying a new mobo that I already knew without looking too much on PCI Express needs for Nvidia..

Anyways, I would like a Linux way of seeing current PCI Express speeds. The Nvidia X Server gives some nice numbers, but only max PCI Speed, not current - at least not that clearly.

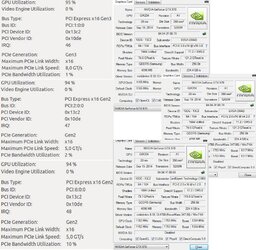

GPU-Z in Windows shows the real deal - there's the 1x extender I got going!

How can I see the PCI speeds (4x/x/16x) in Linux?

And btw I said questions - I have managed to overclock the first GPU with cool-bits, but how can I get that option on the other cards?

Anyways, I would like a Linux way of seeing current PCI Express speeds. The Nvidia X Server gives some nice numbers, but only max PCI Speed, not current - at least not that clearly.

GPU-Z in Windows shows the real deal - there's the 1x extender I got going!

How can I see the PCI speeds (4x/x/16x) in Linux?

And btw I said questions - I have managed to overclock the first GPU with cool-bits, but how can I get that option on the other cards?