- Joined

- Jun 24, 2014

- Location

- TX/CO

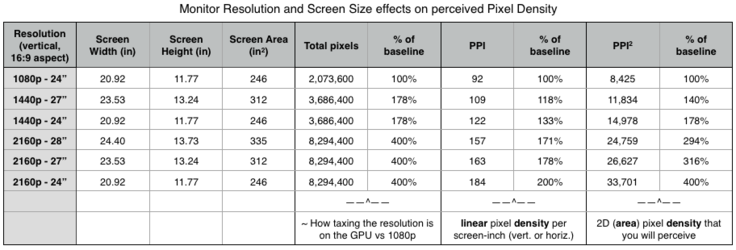

[Table] - Monitor Resolution & Screen Size impacts on perceived Pixel Density

--[Disclaimer: I use the phrase "image quality" in this thread as a placeholder to describe having a better viewing experience due to a higher pixel density, all else equal]--

I was looking into the idea of jumping from my current 1080p 24" monitor to 1440p, and I was curious as to how the jump in pixels would impact my perceived image quality compared to how much harder my GPU was going to have to work.

I created this chart to help me quantify the data and clear up some misconceptions I initially had.

Initially I was unknowingly calculating Pixels per inch [PPI], as that's the term I think we're all familiar with, but I was picturing Pixels per square inch [PPI^2] in my mind. That's why I was disappointed when I saw that jumping from a 24" 1080p monitor to a 27" 1440p one was only going to give me an 18% increase in pixel density while costing me 78% more workload on the GPU. This just didn't seem right to me; people rave about jumping from 1080 to 1440, and while I knew an increase in screen size area was going to exponentially drop pixel density the bigger the screen got (given a constant resolution), I didn't think it would be that bad.

I realized that the online calculators I was using were calculating linear pixel density, not 2D pixel density. So I found one that did both, double-checked its math by hand, and made the table above.

As far as I can figure, what we'll perceive as a higher image quality, etc., will be based on Pixels per square inch, not pixels per linear inch.

Argument: Hold constant screen size and aspect ratio (say 24" @ 16:9) ---> screen area is held constant. 4K will have 4 times the pixels of 1080p in this scenario, spread across the exact same amount of screen area. This naturally means there is 4 times the pixel density in this situation with the 4K resolution. If you're using PPI, however, as you'll see above in the table, it seems that you're only doubling your pixel density and thus image quality, however we know mathematically that you're quadrupling it. That's where PPI^2 comes into play -- it's an accurate measure of the pixel density you'll perceive.

Going back to the jump I was talking about: using PPI^2, I'd actually see a 40% increase in perceived pixel density with the 78% increase in GPU workload ---> much better

--[Disclaimer: I use the phrase "image quality" in this thread as a placeholder to describe having a better viewing experience due to a higher pixel density, all else equal]--

I was looking into the idea of jumping from my current 1080p 24" monitor to 1440p, and I was curious as to how the jump in pixels would impact my perceived image quality compared to how much harder my GPU was going to have to work.

I created this chart to help me quantify the data and clear up some misconceptions I initially had.

Initially I was unknowingly calculating Pixels per inch [PPI], as that's the term I think we're all familiar with, but I was picturing Pixels per square inch [PPI^2] in my mind. That's why I was disappointed when I saw that jumping from a 24" 1080p monitor to a 27" 1440p one was only going to give me an 18% increase in pixel density while costing me 78% more workload on the GPU. This just didn't seem right to me; people rave about jumping from 1080 to 1440, and while I knew an increase in screen size area was going to exponentially drop pixel density the bigger the screen got (given a constant resolution), I didn't think it would be that bad.

I realized that the online calculators I was using were calculating linear pixel density, not 2D pixel density. So I found one that did both, double-checked its math by hand, and made the table above.

As far as I can figure, what we'll perceive as a higher image quality, etc., will be based on Pixels per square inch, not pixels per linear inch.

Argument: Hold constant screen size and aspect ratio (say 24" @ 16:9) ---> screen area is held constant. 4K will have 4 times the pixels of 1080p in this scenario, spread across the exact same amount of screen area. This naturally means there is 4 times the pixel density in this situation with the 4K resolution. If you're using PPI, however, as you'll see above in the table, it seems that you're only doubling your pixel density and thus image quality, however we know mathematically that you're quadrupling it. That's where PPI^2 comes into play -- it's an accurate measure of the pixel density you'll perceive.

Going back to the jump I was talking about: using PPI^2, I'd actually see a 40% increase in perceived pixel density with the 78% increase in GPU workload ---> much better