-

Welcome to Overclockers Forums! Join us to reply in threads, receive reduced ads, and to customize your site experience!

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Ashes of the Singularity - benchmark

- Thread starter Kenrou

- Start date

- Joined

- Aug 14, 2014

- Thread Starter

- #2

- Joined

- Aug 5, 2002

Interesting results. I should test it on mine as post here if you don't mind. Hope to do it tonight.

I know DX12 is supposed to really shine when there is LOTS of units(entities) on the screen at once. Maybe the benchmark doesn't make that really shown as much.

Maybe in your case the CPU (AMD) is limiting the GPU from shinning more.

I know DX12 is supposed to really shine when there is LOTS of units(entities) on the screen at once. Maybe the benchmark doesn't make that really shown as much.

Maybe in your case the CPU (AMD) is limiting the GPU from shinning more.

Alaric

New Member

- Joined

- Dec 4, 2011

- Location

- Satan's Colon, US

I borrowed a link from ED http://www.techspot.com/review/1081-dx11-vs-dx12-ashes/ It's a good read and covers this very subject. Given the results shown , I would also suspect the CPU as the bottleneck.

- Joined

- Aug 5, 2002

Alaric, the link you mention above explains my experience to a T with the DX11 vs DX12 dealing with an AMD card.

Alright got some results....

First settings... Same for all the tests.

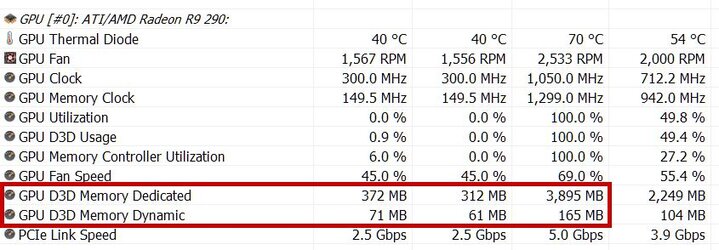

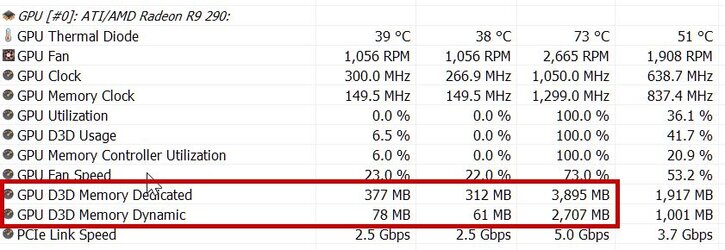

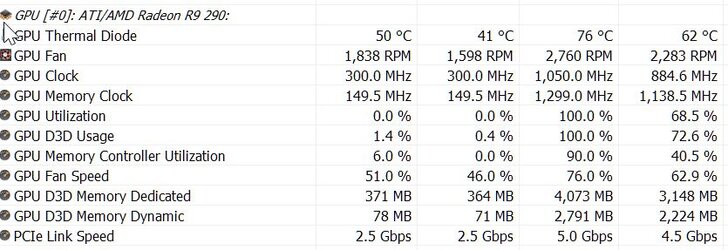

Second GPU Speeds, and Temp Results (3C higher with DX12... but it was run pretty close back to back)

Next up the GPU Sensor Results... as mentioned it utilizes Direct Memory with DX12.. quiet a bit of it.

Otherwise GPU utilization was identical... 100% across the board for both.

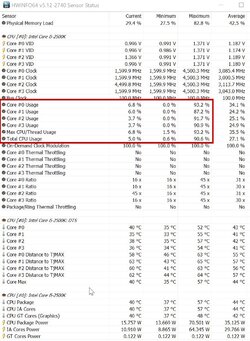

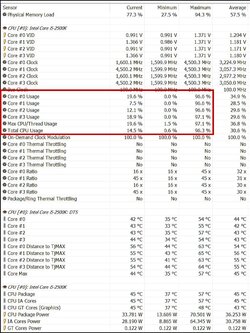

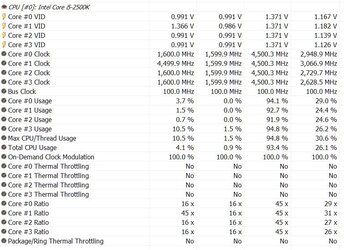

Now the CPU results, now I do have just a quad core i5 @ 4.5Ghz. Here is what I found interesting and inline with what DX12 is supposed to do. It utilizes the CPU cores more efficiently. Just shy of 6% more CPU utilization using DX12.

DX11

DX12

And now the real results... Lets just say, DX12 whoops DX11 and then some....

Alright got some results....

First settings... Same for all the tests.

Second GPU Speeds, and Temp Results (3C higher with DX12... but it was run pretty close back to back)

Next up the GPU Sensor Results... as mentioned it utilizes Direct Memory with DX12.. quiet a bit of it.

Otherwise GPU utilization was identical... 100% across the board for both.

Now the CPU results, now I do have just a quad core i5 @ 4.5Ghz. Here is what I found interesting and inline with what DX12 is supposed to do. It utilizes the CPU cores more efficiently. Just shy of 6% more CPU utilization using DX12.

DX11

DX12

And now the real results... Lets just say, DX12 whoops DX11 and then some....

- Joined

- Aug 5, 2002

Little extra fun.... Benched 4k same settings with just DX12

My GPU is the limit

CPU, utilization went down 93.4% overall, so right in the middle of DX11 and DX12 running at 1080p

GPU, well it got hotter, went up to 76C this time... memory utilization went up slightly buy Dynamic was a little down.

Now the bench, well it took a hit vs 1080, about half the FPS.... but it looked ALOT nicer on the screen.

My GPU is the limit

CPU, utilization went down 93.4% overall, so right in the middle of DX11 and DX12 running at 1080p

GPU, well it got hotter, went up to 76C this time... memory utilization went up slightly buy Dynamic was a little down.

Now the bench, well it took a hit vs 1080, about half the FPS.... but it looked ALOT nicer on the screen.

Alaric

New Member

- Joined

- Dec 4, 2011

- Location

- Satan's Colon, US

Nice! And this , added to various tests and reviews , and the article I linked , is why my next rig is 99% likely to be Team Blue. And I may jump ship to Green , too. Zen may close the gap by a lot (dumping the failed BD architecture) , but it isn't likely to be in time to counter the above results.

And AMD's bragging about their "advantage" with DX 12 just blew up in the CPU division's face. Way to shoot yourselves in the foot , guys. Geez. Looks like my hexacore , 4400 MHz , FX has a fine future in a HTPC. LOL

And AMD's bragging about their "advantage" with DX 12 just blew up in the CPU division's face. Way to shoot yourselves in the foot , guys. Geez. Looks like my hexacore , 4400 MHz , FX has a fine future in a HTPC. LOL

- Joined

- Aug 5, 2002

And my poor CPU is 5 years old this month.... I should build a new rig

Alaric

New Member

- Joined

- Dec 4, 2011

- Location

- Satan's Colon, US

And my FX is two years old. LOL

I saw deathman20's results and immediately thought of my poor results. If your sig is up to date you have effectively the same GPU as I do.

My system runs that benchmark at the same game settings around 28fps. So I went to bios to reset everything and ran the benchmark again with the same result.

Then I OCed GPU to 1100/1300 and ended up with 30fps.

Back to bios and OCed CPU to 4.8GHz and still 30fps.

There is something funny with that test and the FX processor.

DX11 1080p

DX12 1080p

DX12 4.8GHz - 1100/1300MHz 1080p

DX12 1440p

My system runs that benchmark at the same game settings around 28fps. So I went to bios to reset everything and ran the benchmark again with the same result.

Then I OCed GPU to 1100/1300 and ended up with 30fps.

Back to bios and OCed CPU to 4.8GHz and still 30fps.

There is something funny with that test and the FX processor.

DX11 1080p

DX12 1080p

DX12 4.8GHz - 1100/1300MHz 1080p

DX12 1440p

Alaric

New Member

- Joined

- Dec 4, 2011

- Location

- Satan's Colon, US

The FX is really that far behind. If you follow the link in the fourth post there is a good read that covers the situation pretty well. DX 12 is no friend to the FX. Zen should be an improvement. And I should be rich and good lookin' , and that hasn't worked out very well either.

- Joined

- Feb 26, 2013

- Location

- Southwest Michigan

Alaric

New Member

- Joined

- Dec 4, 2011

- Location

- Satan's Colon, US

Is it DX12 or is it (CPU) just being used more with DX12 so it is showing its inherent weaknesses more?

Wait, that is like the, 'if a tree falls in the forest and nobody is there, does it make a sound' conundrum...

What he said.

Last edited:

FX might be sluggish but it isn't that slow. With better multicore support it should fare quite well.

Looking at the linked Techspot article there isn't that much of a difference in performance with high quality settings for both AMD GPUs or CPUs compared to NVIDIA or Intel.

Intel CPUs really don't pull ahead unless you lower the game quality settings to medium.

Looking at the linked Techspot article there isn't that much of a difference in performance with high quality settings for both AMD GPUs or CPUs compared to NVIDIA or Intel.

Intel CPUs really don't pull ahead unless you lower the game quality settings to medium.

Alaric

New Member

- Joined

- Dec 4, 2011

- Location

- Satan's Colon, US

I would venture a guess that medium settings will be the most common among casual gamers , people who won't spend $500+ on a discrete graphics solution. We get a lot of people at OC that are looking for a mid priced card ($200-$250) that will "Play current titles" at medium settings. DX 12 was supposed to make that a lot more accessible. For AMD fans that may turn out to be a big Oops!.

Again though , we're talking one title and one review/test. Another DX 12 game may run better on FX chips. Not really enough info to make a declaration , but if it's all we have when I upgrade , I'm lookin' at you Blue.

ED must be loving this. Lately I've been defending W 10 and Intel. But facts is facts.

Again though , we're talking one title and one review/test. Another DX 12 game may run better on FX chips. Not really enough info to make a declaration , but if it's all we have when I upgrade , I'm lookin' at you Blue.

ED must be loving this. Lately I've been defending W 10 and Intel. But facts is facts.

I would venture a guess that medium settings will be the most common among casual gamers , people who won't spend $500+ on a discrete graphics solution. We get a lot of people at OC that are looking for a mid priced card ($200-$250) that will "Play current titles" at medium settings. DX 12 was supposed to make that a lot more accessible. For AMD fans that may turn out to be a big Oops!.

You will still reach playable framerates with medium settings. If you would take a look at the article and those results, the FX CPU manages 55-60fps which is enough for a strategy title.

If your GPU is the limiting factor your CPU choice will matter less. I think you haven't really thought out what you write.

Alaric

New Member

- Joined

- Dec 4, 2011

- Location

- Satan's Colon, US

I didn't say the FX octacores were unplayable. They are , in every case shown , behind the i3 in that one article. By 40 fps in one instance. That's a LOT. And at 1080p , medium quality , which is probably a pretty fair representation of how the game will be played by a lot of folks. I wasn't trying to say DX 12 is here , sell your FX chips. However , building/buying a new rig at this time I wouldn't touch an FX. Game development and implementation of DX 12 isn't likely to favor the FX any more , either. The more the CPU is utilized , the bigger the disadvantage.

I like my FX , but spending money on one right now for gaming wouldn't be a decision I'd make. YMMV.

I like my FX , but spending money on one right now for gaming wouldn't be a decision I'd make. YMMV.

I didn't say the FX octacores were unplayable. They are , in every case shown , behind the i3 in that one article. By 40 fps in one instance. That's a LOT. And at 1080p , medium quality , which is probably a pretty fair representation of how the game will be played by a lot of folks.

I get that but, if you have the GPU power to run this game at around 60fps max quality, why would you play it at medium quality?

If you have GPU that pushes 60 fps with medium settings it doesn't really matter if your CPU could do 90fps with 980ti since your gpu is not up for it.

Similar threads

- Replies

- 14

- Views

- 1K