Just wanted to put a note on some great recommendations for hardware that isn't bleeding edge but will still provide great performance for a ZFS all-in-one build.

A ZFS all-in-one is a machine on which you install ESX, passing through (via vt-d or iommu) a SATA adapter to a virtual machine and virtualizing your NAS. More into here:

http://www.napp-it.org/napp-it/all-in-one/index_en.html

Two builds which you can do rather cheaply on server grade hardware:

Build #1 - s1156 i7

Supermicro X8SIL-F motherboard (32Gb RDIMM, s1156). This motherboard will use a standard 1156 heatsink if you pry off the stock Supermicro fan hardware (which accepts a more expensive supermicro cooler that is NOT necessary). You should be able to find one of these on ebay for around $70. Grab one with IPMI if you can find one.

Xeon x3440 processor. you will not necessarily want x3430 as it does not have HT. I would go with at minimum an x3440. I believe you do not want normal corei3 parts as they do not support vt-d (important to passthrough your sata controller). You should be able to find one of these on ebay for around $55.

You can use unbuffered (max 16Gb) or Registered ECC (max 32Gb) memory, I would recommend the latter. Now the X8SIL is VERY picky about memory, so I only use Kingston KVR1333D3Q8R9S/8G DIMMs. You should be able to find these at around $60/dimm (don't pay more)

IBM M1015 SATA controller. This is the controller that you will pass through your SATA disks on to your NAS VM. You can find these for anywhere from $70-$100 on ebay or on about any decent size forum. It is a SATA3 controller, and you just run IT firmware on it and allow your NAS VM to do any raid calculations.

You do not need a special PSU, anything around the 500w will be MORE than enough.

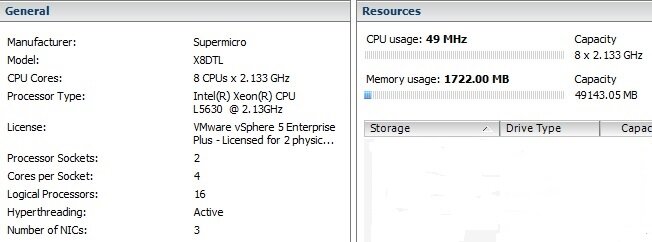

Build #2 - dual s1366 i7

Supermicro X8DTL-i motherboard. I think you can use a standard s1366 heatsink but I have always used the Supermicro heatsinks, the active cooling ones. The only caveat with the active coolers as that you're limited on orientation. You can't have them both exhausting air the same direction. But if you with a Lxxxx series CPU they won't be generating a ton of heat. this motherboard is one of very few dual s1366 motheboards that is still using ATX form factor, this is a HUGE selling point. No server case needed! You can find these regularly on ebay for around $150, which is a steal. *** be sure to ask the seller about BIOS update. I just purchased an X8DTL that would not support the L5630 CPU until I ran a BIOS update. Luckily I had a Xeon 5504 sitting around, which I can send out if needed if you have the same issue***

Xeon L5630 processor. This is a 40w i7 w/ HT, and you can run two on this motherboard. 16 logical cores, more than enough for many VMs on an ESX box. You should be able to find these for <$40 (per CPU) on ebay. ***you can substitute L5520 which is also an i7 w/ HT for a few dollars cheaper, but this is slightly older CPU technology than L5630, and it's a 60w part***

Memory - this motherboard will take up to 96Gb of RDIMM (if using 16Gb DIMMs). I would recommend 8Gb ECC registered DIMMS, which you can normally find for <$50 per DIMM on ebay. 6x8Gb would give you 48Gb of ram.

Same M1015 controller as recommended previously

No special PSU requirement, but it is worth noting that only one of the 2x 8-pin CPU connectors on the motherboard needs to be used in the above described setup.

Build #3 dual s1356 Xeon e5

Tyan S7045GM4NR - EATX. Dual s1356, 2x SATA II & 2x mini-SAS

(2) Supermicro SNK p0035AP4 heatsink/fan assemblies

(2) Xeon E5-2418L w/ HT

Memory - 12x 8Gb DDR3 ECC Registered. Supports UDIMM, RDIMM, LRDIMM so lots of options here

Same M1015 controller as recommended previously

No special PSU requirement, but it is worth noting that only one of the 2x 8-pin CPU connectors on the motherboard needs to be used in the above described setup.

Updated 3/1/15

A ZFS all-in-one is a machine on which you install ESX, passing through (via vt-d or iommu) a SATA adapter to a virtual machine and virtualizing your NAS. More into here:

http://www.napp-it.org/napp-it/all-in-one/index_en.html

Two builds which you can do rather cheaply on server grade hardware:

Build #1 - s1156 i7

Supermicro X8SIL-F motherboard (32Gb RDIMM, s1156). This motherboard will use a standard 1156 heatsink if you pry off the stock Supermicro fan hardware (which accepts a more expensive supermicro cooler that is NOT necessary). You should be able to find one of these on ebay for around $70. Grab one with IPMI if you can find one.

Xeon x3440 processor. you will not necessarily want x3430 as it does not have HT. I would go with at minimum an x3440. I believe you do not want normal corei3 parts as they do not support vt-d (important to passthrough your sata controller). You should be able to find one of these on ebay for around $55.

You can use unbuffered (max 16Gb) or Registered ECC (max 32Gb) memory, I would recommend the latter. Now the X8SIL is VERY picky about memory, so I only use Kingston KVR1333D3Q8R9S/8G DIMMs. You should be able to find these at around $60/dimm (don't pay more)

IBM M1015 SATA controller. This is the controller that you will pass through your SATA disks on to your NAS VM. You can find these for anywhere from $70-$100 on ebay or on about any decent size forum. It is a SATA3 controller, and you just run IT firmware on it and allow your NAS VM to do any raid calculations.

You do not need a special PSU, anything around the 500w will be MORE than enough.

Build #2 - dual s1366 i7

Supermicro X8DTL-i motherboard. I think you can use a standard s1366 heatsink but I have always used the Supermicro heatsinks, the active cooling ones. The only caveat with the active coolers as that you're limited on orientation. You can't have them both exhausting air the same direction. But if you with a Lxxxx series CPU they won't be generating a ton of heat. this motherboard is one of very few dual s1366 motheboards that is still using ATX form factor, this is a HUGE selling point. No server case needed! You can find these regularly on ebay for around $150, which is a steal. *** be sure to ask the seller about BIOS update. I just purchased an X8DTL that would not support the L5630 CPU until I ran a BIOS update. Luckily I had a Xeon 5504 sitting around, which I can send out if needed if you have the same issue***

Xeon L5630 processor. This is a 40w i7 w/ HT, and you can run two on this motherboard. 16 logical cores, more than enough for many VMs on an ESX box. You should be able to find these for <$40 (per CPU) on ebay. ***you can substitute L5520 which is also an i7 w/ HT for a few dollars cheaper, but this is slightly older CPU technology than L5630, and it's a 60w part***

Memory - this motherboard will take up to 96Gb of RDIMM (if using 16Gb DIMMs). I would recommend 8Gb ECC registered DIMMS, which you can normally find for <$50 per DIMM on ebay. 6x8Gb would give you 48Gb of ram.

Same M1015 controller as recommended previously

No special PSU requirement, but it is worth noting that only one of the 2x 8-pin CPU connectors on the motherboard needs to be used in the above described setup.

Build #3 dual s1356 Xeon e5

Tyan S7045GM4NR - EATX. Dual s1356, 2x SATA II & 2x mini-SAS

(2) Supermicro SNK p0035AP4 heatsink/fan assemblies

(2) Xeon E5-2418L w/ HT

Memory - 12x 8Gb DDR3 ECC Registered. Supports UDIMM, RDIMM, LRDIMM so lots of options here

Same M1015 controller as recommended previously

No special PSU requirement, but it is worth noting that only one of the 2x 8-pin CPU connectors on the motherboard needs to be used in the above described setup.

Updated 3/1/15

Last edited: