- Joined

- Jun 8, 2005

Gentoo 2.6.20 patched with kerrighed 2.3.0.

Now, this isn't for me, but for another member who needs help in this. Now, he got a cluster of PCs and he got a FAH client on all of them. He wants to compress all data going in and out of FAH.

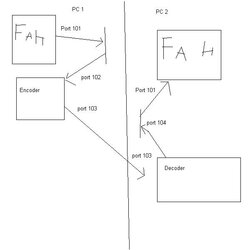

I was thinking among the lines of least resistance: two iptable entries, one routing all FAH sends to another port where a program encodes it. Another entry routes all FAH receives to a decoder which then passes it to FAH.

Will the following solution work?

PseudoCode

And here is a diagram:

Now, this isn't for me, but for another member who needs help in this. Now, he got a cluster of PCs and he got a FAH client on all of them. He wants to compress all data going in and out of FAH.

I was thinking among the lines of least resistance: two iptable entries, one routing all FAH sends to another port where a program encodes it. Another entry routes all FAH receives to a decoder which then passes it to FAH.

Will the following solution work?

PseudoCode

Code:

int main()

{

//register ports

char *buffer = (char*) malloc(sizeof(char)*4096);

while(true)

{

//if recieve data on port 1

//then

sendData(port2,(int)encode(buffer), buffer);

//encode(buffer) returns number of ints after encoding

//if recieves data on port 2

//then

sendData(port1,(int)decode(buffer), bufffer);

// will have to do some playing around with messages split.

}

}And here is a diagram: