- Joined

- Apr 30, 2008

- Location

- Birmingham, UK

APRIL FOOLS

Welcome to Overclockers Forums! Join us to reply in threads, receive reduced ads, and to customize your site experience!

voodoo stuff was awesome.

i dont want more gpus on the same pcb, i want more gpus on a single chip, dualcore like.

Anandtech said:NVIDIA calls an individual SP a single processing core, which is actually true. It is a fully pipelined, single-issue, in-order microprocessor complete with two ALUs and a FPU.

It doesn't make any sense to make a 'dual-core' GPU. From a design standpoint it only makes sense to add more TPC's and more SP's per TPC...until they come up with some other design architecture.

...

The only thing holding back the GPU designs from more SPs is heat and chip yields (due to the dies being so large). Dual-core GPUs are ancient history. We now have 240-core and 800-core GPUs.

GPUs are not CPUs. GPUs are already made up of several simple 'cores'.

The GT200 is already kind of like a dual-core G92. It has almost twice the number of 'cores'.

Graphics processing is highly parallel by nature and that is why we see such nice increases when the number of shader processors is increased as long as there is enough data (high rez and high details) to fill those shader processors. This is unlike CPUs where the extra cores are not as easy to utilize due to the difficulty in parallel programming.

It doesn't make any sense to make a 'dual-core' GPU. From a design standpoint it only makes sense to add more TPC's and more SP's per TPC...until they come up with some other design architecture.

There are already 10 Texture/Processor Clusters (TPCs) in the GTX285 with 240 Shader Processors & 60 Special Function Units (SFUs) w/n those TPCs.

According to Anandtech:

Link

The only thing holding back the GPU designs from more SPs is heat and chip yields (due to the dies being so large). Dual-core GPUs are ancient history. We now have 240-core and 800-core GPUs.

Let's see another side of the picture.

From an engineering and marketing prospective, I want to create a small, cheap die that will fit into the $250-400 range. This would is supposedly the price range most people spend for a video card.

If I want to produce the best performing card, I just have to add a second core. It would mean I don't have to create unnecessary number of dies and it would save time and cost of manufacturing. Think of 4870 (single small die) and 4870X2 (2 small dies) instead of 4870 and 4875 (1 large die).

Does anyone agree with me?

It would mean I don't have to create unnecessary number of dies and it would save time and cost of manufacturing.

Think of 4870 (single small die) and 4870X2 (2 small dies) instead of 4870 and 4875 (1 large die).

think you missed my point. If you put both dies from the 4870X2 together on 1 die you would have a 1600 core chip, not a dual-core.

That's why a GT200 die is almost like (2) G92 dies put together.

Right now if you put 1600 ATI cores or 480 nVidia cores on 1 die you have several problems. The die is too large, yields are terrible, and the heat concentration is too great. We will eventually get there as the lithography process shrinks, but as I said before, we are already well beyond dual-core GPUs. Next stop; 1000+ core GPUs.

With die design it is best to create as many dies from a single wafer as possible. The less dies you create from a single wafer the more expensive they are to produce, and you also end up w/ more defective dies.

I lost you here. All these cards have the same die. How is one small and one large? And what is the 4875?

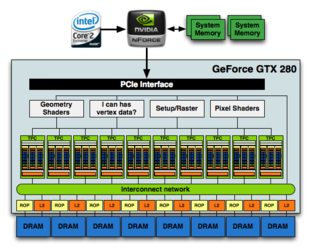

wait, did that pic say "can I has vertex data"

when did the lolcats start making GPU's

So why did you say "It doesn't make any sense to make a 'dual-core' GPU." I lost you there!

Because that's a huge step-backwards. We already have 240-core GPU's. A 2-core GPU would be a huge step-backwards unless each core can process 120+ threads simultaneously.

I don't get your logic. Perhaps that's because I have not seen a production line before.

Correct. I haven't seen one either, but they teach you this stuff in Computer Engineering.

Why would it be more expensive to create 2 different sets of dies and why would it result in more defective dies?

Creating 2 different sets of dies is inherently more expensive than creating 1. That should be obvious. More Engineering time, more testing...more problems.

The bigger the die size the less dies you can fit on a single wafer. Each wafer takes a particular time to process, so that labor time is now spread across less dies making each die more expensive. Once the design is done its all about how many dies you can squeeze from each wafer. There are also going to be a statistical number of transistors that don't get created right, and do not function. The chances of one or more of those errors affecting a particular die go up dramatically when the number of dies per chip goes down.

Imagine a 12" silicon wafer and it costs $1,000 to have that wafer etched. Let's say it can fit 100 of die A or 25 of die B. Let's say on average there are 4 errors per wafer. So, those 4 errors take out 4 dies or less on the A wafer leaving you w/ 96+ good dies to sell. B wafer does the same so you end up w/ 21+ wafers to sell. Wafer A costs you $10-10.41/die, and wafer B costs you $40-47.62/die.

This is my reasoning: It's cheaper to program the machine/robot to create 1 type of die and put 2 of them on a same PCB than to create 2 different dies to produce similar end results.

And, once the dies are larger, there is a higher chance of getting one circuitry wrong, thus resulting in a defective die.

Correct, but these seems to go against what you said above. Or were you answering your own question?

Yes, the 4870 and 4870X2 have the same die. I think ATI did this because of the above reason. 4875 is just an imaginary card. Sorry for not mentioning it earlier. The 4875 performs equal to the 4870X2 but it consist of only 1 large die. You know what I mean now?

Ok...4875 is imaginary. ATI could put 1600 cores on a single die right now. It's just that you'd need water to cool it at a minimum, and they would have a lot more defective dies. No one would be able to afford it either. It would cost a good bit more than 2 of the current dies.

*I hope this is seen as a constructive argument and not a flamewar/bait. I've already got a Profile Warning for "Insulted Other Member(s)/Flamebaiting" so please don't report me as I don't wish to get another one. Btw, I don't know why I got it. I merely said "we recommend overclocking as this is an overclocking forum."