- Joined

- Oct 31, 2002

hey guys. ive been out of the computing scene for a while now.. i built my last system in 2007 i believe, and ive been rocking that with almost no maint since.

Im looking to replace the c2d stuff this year with amd fx83xx stuff which will also promote a bump in my cooling system to take the extra 6 cores lol.

right now i run a pretty typical inline system with a T

-swiftech apogee 1U

-Lian D5 w 6 years continuous

-BIP3 w 3x panaflo 120x38mm fans @7volts. very quiet.

cooling just the cpu alone i run the c2d6750 at stock clocks at ambient at all times. full load even. with my old 7900 gt in that loop it would somtimes go a few degrees over ambient.

I havent had a chance to test my radeon 5850 in that loop as id have to modify my aquaextreme mp1 to fit. i probably will never get rid of that block, just find other uses for it by modifiying the holddown. same with my apogee 1u.

seems there hasnt been too much development in liquid cooling block technology other than the mass marketization of it so who knows.

I do know for sure ill be dumping a LOT more heat into the new system so at the very least ill be adding a second rad and running them in series. this does increase the duty on the main pump though and im considering the following to keep overall flowrates high and temps down.

soooo. not sure if anyone has messed with this idea in the desktop scene.. im going to build a new desk/workstation as i FINALLY have a office room in my house. i dont go to lanparties and i rarely work on my computers unless stuff is broken. this liquid cooling has run 6 years almost non stop with nothing more than a top off on the T line a few times/year.

absolute reliability swiftech!

so the new system will be built into the desk in some way. I will probably design a testing bench sytel mainboard tray in the desks top most drawer space with a space below for all the water cooling components.

the trick, i want to be able to remove/service/install new hardware without upsetting the watercooling system or having to drain/rebuild it as i have had to in the past with the inline series system most people run.

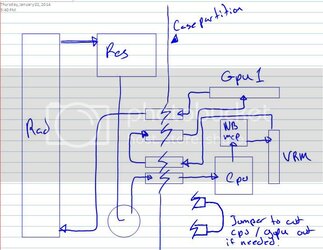

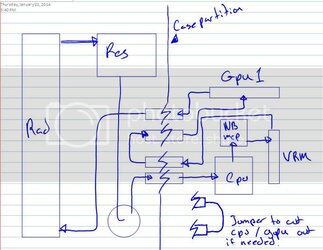

essentially i want to run dual loops. a system that will simply circulate hot and cold water between two static resivoirs.

The components to be cooled will draw cool water from the cool res, and deposit it to be cooled in the hot res. this would mean each device needs its own pump and loop setup for this which is no big deal.

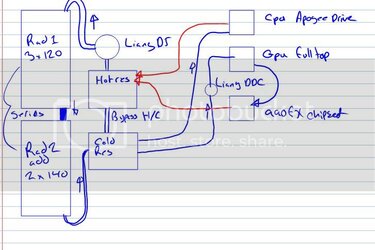

I was thikning of using an apogee drive 2 for the cpu as this is half the work done right there. the other systems, gpu and mainboard could have their own loops with one pump between them but i might adapt an apogee drive pump to work in this environment as well by making a new sandwich plate for the pump and the gpu block.

simply plumbing this to work is no big deal, the trick is to make it serviceable without being super awkward to add/delete parts from ect.

im thinking of using a block to block transfer for all the loops. having one big block being cooled by the rad system which could only use one res then, and have all the other systems tap into that block with smaller blocks of their own. what im curious about is the efficiency of transfering heat between two water systems via copper blocks.

the most efficient is to obviously draw from the res itself, but if i could just unbolt the cpu loop block from the overall system block and pull it off without any leaks, isnt that great for future servicing?

just ideas being thrown around at this point. i might even investigate the posibility of using peltiers to transfer heat between two peices of copper. allowing the heat to pass from the component block to the main system more efficiently. though pelts arent that efficient for energy consumption. they also induce almost double the heat load as they only transfer as much heat wattage as you put in in energy wattage.

OBVIOUSLY, just sticking to a simple series system with a monster pump is the easiest cleanest way to do it. but im thinking back to all the many hours of building, bleeding systems in the past. its no fun with a T line system.

Im looking to replace the c2d stuff this year with amd fx83xx stuff which will also promote a bump in my cooling system to take the extra 6 cores lol.

right now i run a pretty typical inline system with a T

-swiftech apogee 1U

-Lian D5 w 6 years continuous

-BIP3 w 3x panaflo 120x38mm fans @7volts. very quiet.

cooling just the cpu alone i run the c2d6750 at stock clocks at ambient at all times. full load even. with my old 7900 gt in that loop it would somtimes go a few degrees over ambient.

I havent had a chance to test my radeon 5850 in that loop as id have to modify my aquaextreme mp1 to fit. i probably will never get rid of that block, just find other uses for it by modifiying the holddown. same with my apogee 1u.

seems there hasnt been too much development in liquid cooling block technology other than the mass marketization of it so who knows.

I do know for sure ill be dumping a LOT more heat into the new system so at the very least ill be adding a second rad and running them in series. this does increase the duty on the main pump though and im considering the following to keep overall flowrates high and temps down.

soooo. not sure if anyone has messed with this idea in the desktop scene.. im going to build a new desk/workstation as i FINALLY have a office room in my house. i dont go to lanparties and i rarely work on my computers unless stuff is broken. this liquid cooling has run 6 years almost non stop with nothing more than a top off on the T line a few times/year.

absolute reliability swiftech!

so the new system will be built into the desk in some way. I will probably design a testing bench sytel mainboard tray in the desks top most drawer space with a space below for all the water cooling components.

the trick, i want to be able to remove/service/install new hardware without upsetting the watercooling system or having to drain/rebuild it as i have had to in the past with the inline series system most people run.

essentially i want to run dual loops. a system that will simply circulate hot and cold water between two static resivoirs.

The components to be cooled will draw cool water from the cool res, and deposit it to be cooled in the hot res. this would mean each device needs its own pump and loop setup for this which is no big deal.

I was thikning of using an apogee drive 2 for the cpu as this is half the work done right there. the other systems, gpu and mainboard could have their own loops with one pump between them but i might adapt an apogee drive pump to work in this environment as well by making a new sandwich plate for the pump and the gpu block.

simply plumbing this to work is no big deal, the trick is to make it serviceable without being super awkward to add/delete parts from ect.

im thinking of using a block to block transfer for all the loops. having one big block being cooled by the rad system which could only use one res then, and have all the other systems tap into that block with smaller blocks of their own. what im curious about is the efficiency of transfering heat between two water systems via copper blocks.

the most efficient is to obviously draw from the res itself, but if i could just unbolt the cpu loop block from the overall system block and pull it off without any leaks, isnt that great for future servicing?

just ideas being thrown around at this point. i might even investigate the posibility of using peltiers to transfer heat between two peices of copper. allowing the heat to pass from the component block to the main system more efficiently. though pelts arent that efficient for energy consumption. they also induce almost double the heat load as they only transfer as much heat wattage as you put in in energy wattage.

OBVIOUSLY, just sticking to a simple series system with a monster pump is the easiest cleanest way to do it. but im thinking back to all the many hours of building, bleeding systems in the past. its no fun with a T line system.