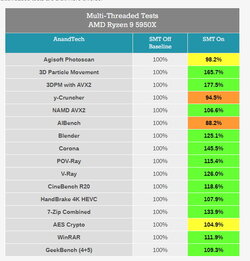

Starting with the two tests that scored statistically worse with SMT2 enabled: yCruncher and AIBench. Both tests are memory-bound and compute-bound in parts, where the memory bandwidth per thread can become a limiting factor in overall run-time. yCruncher is arguably a math synthetic benchmark, and AIBench is still early-beta AI workloads for Windows, so quite far away from real world use cases.

Most of the rest of the benchmarks are between a +5% to +35% gain, which includes a number of our rendering tests, molecular dynamics, video encoding, compression, and cryptography. This is where we can see both threads on each core interleaving inside the buffers and execution units, which is the goal of an SMT design. There are still some bottlenecks in the system affecting both threads getting absolute full access, which could be buffer size, retire rate, op-queue limitations, memory limitations, etc – each benchmark is likely different.

The two outliers are 3DPM/3DPMavx, and Corona. These three are 45%+, with 3DPM going 66%+. Both of these tests are very light on the cache and memory requirements, and use the increased Zen3 execution port distribution to good use. These benchmarks are compute heavy as well, so splitting some of that memory access and compute in the core helps SMT2 designs mix those operations to a greater effect. The fact that 3DPM in AVX2 mode gets a higher benefit might be down to coalescing operations for an AVX2 load/store implementation – there is less waiting to pull data from the caches, and less contention, which adds to some extra performance

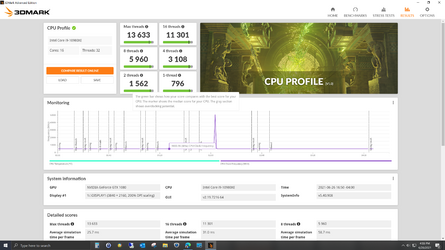

I found out my i9-7980xe was not busted

I found out my i9-7980xe was not busted  Does it use that much power? The video card was not OCed. It was also ~ itÂ’s 7yr warranty

Does it use that much power? The video card was not OCed. It was also ~ itÂ’s 7yr warranty