- Joined

- Aug 23, 2007

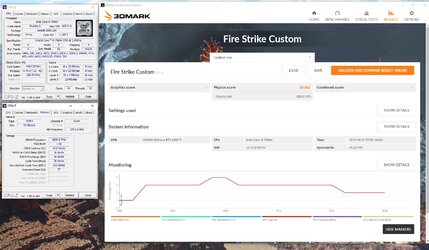

Currently I'm running an 8700k delid @ 5.3Ghz full custom loop w/ 3 radiators cooling it. I do some gaming (Battlefield V, Planetside 2, maybe ArcheAge) but lately I am learning more about video editing as my wife is an actress and some of her auditions are "self tapes" that we record, edit (sound, color correct, cuts, etc) and I am starting a youtube channel for my own investment firm business. I have been using the Note 10 Plus for recording and editing videos on the go and it's surprisingly powerful for that, but for more marketing based videos I'll be using the PC.

So if I'm say 10-20% gaming, 20-40% video editing, and the rest is just random PLEX transcoding (about 15TB of movies/tv shows) and MS Office applications for work.

Last bit, yes I plan on overclocking until it smokes.

AMD seems like it's not all that fun and OC capable in general, meanwhile the word on the street is the i9-10980XE is hitting 5.1Ghz ALL CORE on water. If that is true then the 10980XE will be simply a gaming/rendering monster.

Any thoughts? I'm leaning toward the Gigabyte X299X Master w/ the i9-10980XE.

(yes, either are overkill but which is more overkill for my needs so I'm happy)

So if I'm say 10-20% gaming, 20-40% video editing, and the rest is just random PLEX transcoding (about 15TB of movies/tv shows) and MS Office applications for work.

Last bit, yes I plan on overclocking until it smokes.

AMD seems like it's not all that fun and OC capable in general, meanwhile the word on the street is the i9-10980XE is hitting 5.1Ghz ALL CORE on water. If that is true then the 10980XE will be simply a gaming/rendering monster.

Any thoughts? I'm leaning toward the Gigabyte X299X Master w/ the i9-10980XE.

(yes, either are overkill but which is more overkill for my needs so I'm happy)