- Joined

- Aug 14, 2014

- Thread Starter

- #41

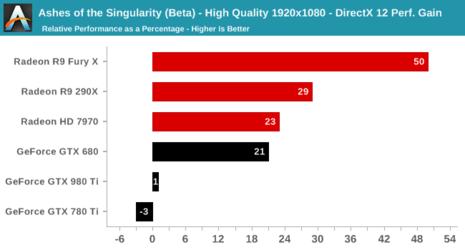

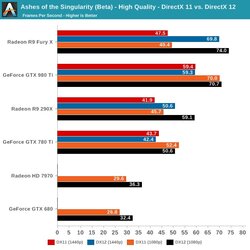

If Ashes if anything to go by, this will revitalize strategy and fps games simply for the sheer amount of crap you can put on the screen, but not seeing much use outside of it (in gaming) unless you have very heavy workloads/textures. Haven't played Farmville in years, maybe ill go back to it