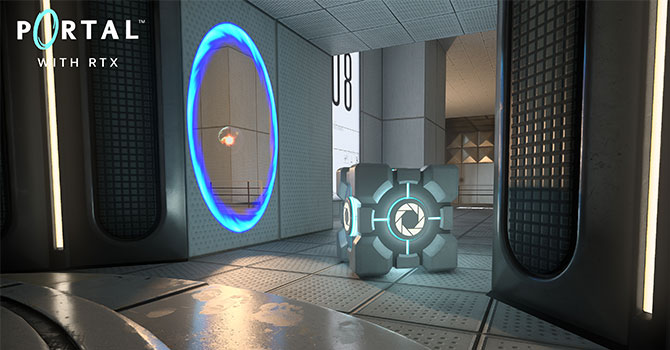

My mate at Uni used to do ray tracing we're talking mid 90s. This is the kind of stuff you would see: -

I'm having a PC built for me. The guy building it for me has a VR centre, PC running a mix of RTX3080 & 3090s on Vive & Pro headsets. I played a Half-Life Alyx on it, loved it, amazing experience . In his office he had an RTX3090 PC which he plays on once customers are helmeted up and playing in the small cubicles. Asked him to put a ray-tracing game on, he put on Cyber Punk & Control on he switched on RTX but the graphics didn't become like the above, tbh I couldn't tell the difference between normal & RTX, I was expecting a HUUUGE difference, like the movie CGI effects Lord of The Ring, Gladiator - the shots of the Colosseum from the outside. Why wasn't it like that? 3090s a beefy card...It turned out to be a damp squib...

I'm having a PC built for me. The guy building it for me has a VR centre, PC running a mix of RTX3080 & 3090s on Vive & Pro headsets. I played a Half-Life Alyx on it, loved it, amazing experience . In his office he had an RTX3090 PC which he plays on once customers are helmeted up and playing in the small cubicles. Asked him to put a ray-tracing game on, he put on Cyber Punk & Control on he switched on RTX but the graphics didn't become like the above, tbh I couldn't tell the difference between normal & RTX, I was expecting a HUUUGE difference, like the movie CGI effects Lord of The Ring, Gladiator - the shots of the Colosseum from the outside. Why wasn't it like that? 3090s a beefy card...It turned out to be a damp squib...

Last edited: