-

Welcome to Overclockers Forums! Join us to reply in threads, receive reduced ads, and to customize your site experience!

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Why did Intel give up on development of i7-5775c type CPU's?

- Thread starter magellan

- Start date

- Joined

- Mar 7, 2008

Coincidentally I was just thinking of digging out my 5775C from my file server and using it to do a quad core build. See how it does on modern games. Downloading Win10 USB for it now! I loved it at the time.

The fight vs AMD is more complicated. We all know about Intel's fab woes, and they're reaching the end of their recovery plan now. The next two years will be very interesting as it Intel should finally be back on a competitive process vs AMD once again. Arrow Lake is expected to be the next desktop architecture in 2024, and may be made on Intel's 20A process. If they can achieve this without delay, it could give them a process advantage over AMD's Zen 5 which might at best be on TSMC N3 or variation thereof.

I'd also note that AMD's 3D parts are a kinda workaround for a more fundamental problem: insufficient ram bandwidth. Core execution potential has been growing far faster than the ability to feed it. Big caches help a lot but are not a universal solution.

The fight vs AMD is more complicated. We all know about Intel's fab woes, and they're reaching the end of their recovery plan now. The next two years will be very interesting as it Intel should finally be back on a competitive process vs AMD once again. Arrow Lake is expected to be the next desktop architecture in 2024, and may be made on Intel's 20A process. If they can achieve this without delay, it could give them a process advantage over AMD's Zen 5 which might at best be on TSMC N3 or variation thereof.

I'd also note that AMD's 3D parts are a kinda workaround for a more fundamental problem: insufficient ram bandwidth. Core execution potential has been growing far faster than the ability to feed it. Big caches help a lot but are not a universal solution.

- Joined

- Jul 20, 2002

- Thread Starter

- #5

@mackerel

If U do any benches of modern games w/your i7-5775c could you please post the results here? I'd be particularly interested in 99th percentile FPS.

I like AMD's solution because it seems more elegant than pumping out more Mhz. and increasing power demands.

If I could afford to upgrade the AMD x3d parts would be my go-to.

If U do any benches of modern games w/your i7-5775c could you please post the results here? I'd be particularly interested in 99th percentile FPS.

I like AMD's solution because it seems more elegant than pumping out more Mhz. and increasing power demands.

If I could afford to upgrade the AMD x3d parts would be my go-to.

- Joined

- Mar 7, 2008

Again, Intel are making the best of what they have. It will be a balance of what they can offer at reasonable cost. I have wondered what if a modern L4 could be implemented, on both Intel and AMD. On Intel, it would require a much higher bandwidth solution than that on 5775C which was rated around 50GB/s, or comparable to dual channel 3200 ram, even if it had better latency. On AMD I'd be more interested in a big L4 than the 3D adding to L3 as it currently does. There is tradeoff here, but my reasoning is that currently AMD CPUs are best viewed as multiple subunits which don't scale well with workloads working on the same data set. Having a L4 cache at IOD level instead of large L3 at CCD level would give better unified memory access on socket.

Intel better do something to match the AMD x3d parts because higher clocks and more power draw don't seem to be working anymore.

If you're a gamer, primarily, that's a good idea. If you aren't, then you're losing some performance versus a non x3d part. Average increase across 21 games at 1080p (techpowerup) was less than 3%.... though pricing and power use are different. The 14700k is a good comparison. There it has mkre of a lead.If I could afford to upgrade the AMD x3d parts would be my go-to

Remember, you don't get the benefits across all games at all times and there is a clock speed difference that slows things down in some productivity applications. I believe it's much limited in overclocking too.

These are solid chips, but there are drawbacks to them.

- Joined

- Mar 7, 2008

I'm in the process of setting it up now. The physical build is done, but the slow process of downloading stuff to benchmark will take some time.If U do any benches of modern games w/your i7-5775c could you please post the results here? I'd be particularly interested in 99th percentile FPS.

My tentative list of software I'm installing:

3DMark - might not bother as synthetics bore me

Call of Duty: MW2

Cities Skylines 2 - very CPU demanding for the sim, especially at bigger populations

Final Fantasy XIV: Endwalker Benchmark

Forspoken Demo - FSR3 FG support

SOTTR - bit old now but still has good technology support

Watch Dogs: Legion

Games above are either have a stand alone benchmark or have a benchmark built in. Forspoken does not meet that but is interesting enough as it has FSR3 FG support. Debated throwing in GTAV but I feel it is too old. It does feel like most games do not bother with a demo or stand alone benchmark these days. Anything not on this list I'll consider but if it is a recent AAA paid game assume I don't have it.

System:

i7-5775C stock

DDR3 2400 2x8GB (I think they're 2R modules too)

Some Z97 mobo

960GB SATA SSD

I need to double check but I think I'm running it on my TV at 1080p 120Hz. Don't think 4k60 is as interesting for this era GPU.

- Joined

- Jul 20, 2002

- Thread Starter

- #10

For some reason I thought all Broadwells were DDR4 capable. Is it only the Broadwell-E's (and maybe the Xeons) that are DDR4 capable?

If only Intel had manufactured a Broadwell-E w/eDRAM.

I hope someone at Intel is at least looking at the idea of re-incorporating eDRAMs into the CPU designs.

If only Intel had manufactured a Broadwell-E w/eDRAM.

I hope someone at Intel is at least looking at the idea of re-incorporating eDRAMs into the CPU designs.

- Joined

- Mar 7, 2008

Think about the main reason why the eDRAM was added in the first place. These are mainly mobile focused CPUs and it was to give them an iGPU performance boost. At the time those iGPUs were the highest performing. For Broadwell desktop, they basically didn't bother making a dedicated offering, and just put the mobile offering on desktop package. I think eDRAM did go a bit further into mobile Skylake era but it kinda fizzled out for whatever reason.I hope someone at Intel is at least looking at the idea of re-incorporating eDRAMs into the CPU designs.

The eDRAM was rated at 50GB/s which is comparable to dual channel 3200 ram. Late DDR4 era ram matched it, and DDR5 smashes it already. Of course, they could go for something faster if they were to return to it today. I'm not sure if it was more helpful for bandwidth or latency though.

I don't think eDRAM will make it in the enterprise offerings. They get HBM to play with instead. I also not sure about it making a return to consumer. Not writing it off, but at the end of the day it will always come down to a cost - value tradeoff and it will be challenging to make a good case for it vs other technologies. Additional cache or similar doesn't have to be eDRAM, and other technologies may offer better value. Both AMD and nvidia dGPUs feature bigger internal caches than before, although it is likely still far too expensive to implement for an APU.

Oh, forgot to say, downloads are still going. I found two other games to add to the list:

Death Stranding - Looks nice. Will walk around the starting area for perf numbers

Ghostwire Tokyo - never tried it, got it free recently

- Joined

- Aug 14, 2014

Testing the Intel i7-5775C quad-core CPU in 10 popular and demanding games in 2023, using an RTX 3070 discrete GPU.

In the history of Intel's modern CPUs, only one generation has been largely forgotten. The 5000 series, known by its architectural name of Broadwell, didn't see many versions released at retail. Most of those that did release were found in laptops, or in the 6000 series HEDT and v4 Xeon ranges. There were only two models of desktop CPU available for sale; in this video, I look at the i7-5775C; a CPU that managed to be innovative even within the confines of the quad-core CPU paradigm of 2015, but which Intel promptly forgot about shortly after release. Can its much-vaunted 128MB "L4 cache" help Broadwell find new life in 2023?

In the history of Intel's modern CPUs, only one generation has been largely forgotten. The 5000 series, known by its architectural name of Broadwell, didn't see many versions released at retail. Most of those that did release were found in laptops, or in the 6000 series HEDT and v4 Xeon ranges. There were only two models of desktop CPU available for sale; in this video, I look at the i7-5775C; a CPU that managed to be innovative even within the confines of the quad-core CPU paradigm of 2015, but which Intel promptly forgot about shortly after release. Can its much-vaunted 128MB "L4 cache" help Broadwell find new life in 2023?

- Joined

- Jul 20, 2002

- Thread Starter

- #13

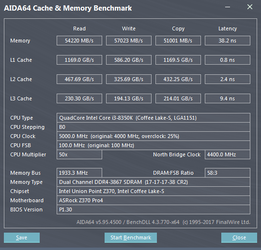

I have my DDR4 running at 3983Mhz. w/my 9700k and I can't even get 40GB/s out of it, much less 50GB/s:

C:\Windows\system32>winsat mem

Windows System Assessment Tool

> Running: Feature Enumeration ''

> Run Time 00:00:00.00

> Running: System memory performance assessment ''

> Run Time 00:00:05.28

> Memory Performance 38988.96 MB/s

> Dshow Video Encode Time 0.00000 s

> Dshow Video Decode Time 0.00000 s

> Media Foundation Decode Time 0.00000 s

> Total Run Time 00:00:06.02

In Kenrou's video the i7-5775c actually had its 0.1% lows decline considerably when enabling the eDRAM in Battlefield V @ 4:21 (from 26.3 to 15.6, a 40% DROP in FPS).

I wonder why?

C:\Windows\system32>winsat mem

Windows System Assessment Tool

> Running: Feature Enumeration ''

> Run Time 00:00:00.00

> Running: System memory performance assessment ''

> Run Time 00:00:05.28

> Memory Performance 38988.96 MB/s

> Dshow Video Encode Time 0.00000 s

> Dshow Video Decode Time 0.00000 s

> Media Foundation Decode Time 0.00000 s

> Total Run Time 00:00:06.02

In Kenrou's video the i7-5775c actually had its 0.1% lows decline considerably when enabling the eDRAM in Battlefield V @ 4:21 (from 26.3 to 15.6, a 40% DROP in FPS).

I wonder why?

Last edited:

- Joined

- Jul 20, 2002

- Thread Starter

- #14

There was an eDRAM part as late as Coffeelake, but it was mobile only: the i7-8559U:

https://ark.intel.com/content/www/u...-8559u-processor-8m-cache-up-to-4-50-ghz.html

https://ark.intel.com/content/www/u...-8559u-processor-8m-cache-up-to-4-50-ghz.html

Have you caught on to the theme yet?but it was mobile only: the i7-8559U:

- Joined

- Aug 14, 2014

It's battlefield V, not a game exactly known for fluid PVP, maybe he got a random lag spike or too many cheaters (looking at the North American servers) that introduced latency/jitter/stutter in that particular benchmarkIn Kenrou's video the i7-5775c actually had its 0.1% lows decline considerably when enabling the eDRAM in Battlefield V @ 4:21 (from 26.3 to 15.6, a 40% DROP in FPS).

I wonder why?

- Joined

- Mar 7, 2008

The numbers I gave are the theoretical peak values. There's no guarantee they'll ever be hit in practice.I have my DDR4 running at 3983Mhz. w/my 9700k and I can't even get 40GB/s out of it, much less 50GB/s:

I'm not familiar with Windows test results. Most commonly I see Aida64 numbers, but that requires the paid version.

This is a run I did a 6 years ago. It was a full manual overclock/tweak of ram timings. It was so painful I never did it again. At that speed the theoretical max is about 60GB/s. I got 54GB/s read and 57GB/s write. I'll take it. If you just run XMP expect much worse.

I'd be very cautious of 0.1% lows unless it is repeated several times and proven to be repeatable. It can be very sensitive to random other things going on with the system. Personally I prefer to look at most at 1% lows as there's a bit more of a buffer.It's battlefield V, not a game exactly known for fluid PVP, maybe he got a random lag spike or too many cheaters (looking at the North American servers) that introduced latency/jitter/stutter in that particular benchmark

I haven't watched the video yet but did skim it to check out some charts. I didn't know you can turn off the eDRAM, or just forgot. Such a long time ago since I played with that system. It would double my testing but I am curious in its effect.

I think I've got all but one download done for my own proposed testing, so I could start it tomorrow. I have played a little Honkai Star Rail, which isn't the most demanding game. It runs fine at default 4k60 High with CPU typically not exceeding 30% or so. Genshin Impact was a little more stressful. I was running at 1080p60 and CPU occasionally crossed 50%. Didn't try it at 4k at all since my intention was to test everything at 1080p anyway.

- Joined

- Mar 7, 2008

Testing may be delayed somewhat. I've been trying out the system and basically the 1080Ti can't get enough air in that system. Not sure what to do yet. I could swap it with a 3070, then I could try RT game settings too. 3070 is lower power and the cooler on it is overkill as I think they reused the same one from 3080. I don't think I'm enthusiastic enough to extract the mobo and run open bench.

- Joined

- Jun 6, 2002

i got a 5675c still waiting to replace the 4690Kf in a build. main reason i wanted one for so long is the Iris pro with eDram. my understanding at the time (back then) was that the iris pro would tap into the 128mb for part of its graphics memory. found this to be a interesting read back in the day testing...

www.pcmag.com

www.pcmag.com

Intel Core i7-5775C Review

Intel's highest-end "Broadwell" desktop CPU delivers the best integrated graphics we've seen from a socketed processor, as well as very good raw compute performance. (Caveat: Pending next-generation CPUs and support for an end-of-life socket may narrow its appeal for some buyers.)

Similar threads

- Replies

- 3

- Views

- 201

- Replies

- 3

- Views

- 989