As far as I can tell...nothing too strange is going on with the site in question. Only slightly tricky is that the images are not in the same "directory" structure, and are links off the server. Plenty of options that should work, including using things like DownloadThemAll and running that on each page manually.

Anyway, using

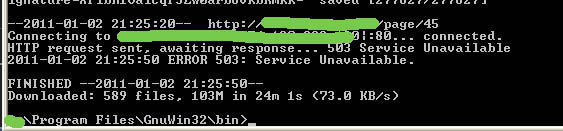

wget:

wget.exe -p -o c627627.txt <website> grabs everything required for that one page to display, including images.

wget.exe -r -p -np -o c627627.txt <website> goes through every page on that site and grabs everything required to display each page

Explanation of arguments:

-p = grab everything needed to display a page

-o c627627.txt = output log to file

-r = follow links (recursive, breadth-first) and grab stuff that's there (and thus dangerous)

-np = cannot ascend to parent "directory", this also stops traversing links to other sites.

For example, a recursive retrieval of

http:// example.web.com/page/1 would only visit pages that started with

http:// example.web.com/page/

Finally, for the site in question, images are downloaded, but have a really screwed up extension that you'll probably want to change.