- Joined

- Mar 7, 2008

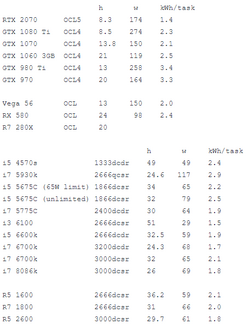

I've done a bunch of testing. CPUs are stock, ram is probably over stock in many cases.

dc = dual channel

qc = quad channel

sr = single rank

dr = dual rank

The test is using genefer which looks for prime numbers in a particular format. The software is available for both CPU and GPU, and recently multi-thread support was added to CPU which makes them interesting again. Times are in hours estimated for 304208^2097152+1 which is representative of the current search range. In case you wonder what the OCL number is, this indicates which method is used. The software will do a mini-bench at the start to find out what is best for the hardware used at the time. The maths behind it is too complicated for me, but it could be said that they're not doing exactly the same calculation, but aiming to go for the best path to the result.

For GPU tests I left them a short time to warm up before taking the estimate as they all downclock as they get hotter. I can't say for sure I left it long enough for it to stop slowing.

Power is as reported in software. It should be noted the different scenarios are NOT comparable with each other. That is, we have 4 cases: nvidia GPU, AMD GPU, AMD CPU, Intel CPU.

nvidia GPU - I understand this is the total power taken by the card

AMD GPU - I understand this is only the power taken by the GPU, so doesn't include ram, VRM efficiency etc.

AMD CPU - this is the reported core+SoC total power

Intel CPU - this is the reported socket power

I also worked out kWh/unit as a kind of performance per watt metric, which will obviously still be limited by how those watts are reported.

General observations:

On nvidia GPUs, Turing is more efficient than Pascal, which is more efficient than Maxwell. Not really any surprise there. The Turing test (pun not intended) picked OCL5, whereas Pascal/Maxwell picked OCL4. The 970 did momentarily switch to OCL5, which made the time estimate jump up to 24 hours. The software may do this if it suspects a bad calculation due to hardware errors, as different methods do work in different ways and some are safer than others. The 970 in question was an EVGA card with core factory overclocked. Ram was still stock. I didn't try un-overclocking to see if it got rid of the transfer switch.

On AMD GPUs, the ones I have at least all picked OCL transform. 280X is faster than 580X, but it didn't report power so I don't know how well it does there. Vega did well in general. Without double checking, I think OCL uses FP64, which would be a strong point for the 280X.

On Intel CPUs, it isn't a big surprise but it seems many of these CPUs are held back by ram bandwidth. Look at the two 6700ks for example. One takes about 30% longer due to the slightly slower ram, and not having dual rank. It is unclear if Broadwell L4 is helping here, but I'm leaning towards it is comparing against the 6600k. Note the 5675C was tested twice, as the mobo used has a bug that means it hard limits to 65W TDP and I can only relax it via software after boot.

On AMD CPUs, feels like a similar story to Intel, in that I could use better ram in those systems.