- Joined

- Aug 14, 2014

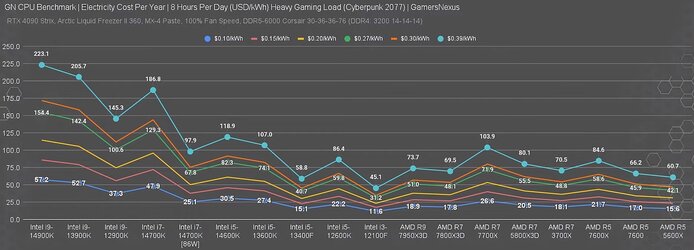

Damn, some of these charts are brutal...

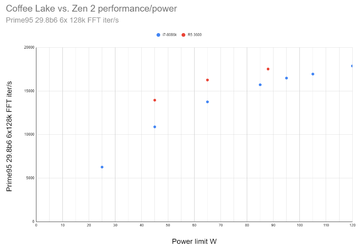

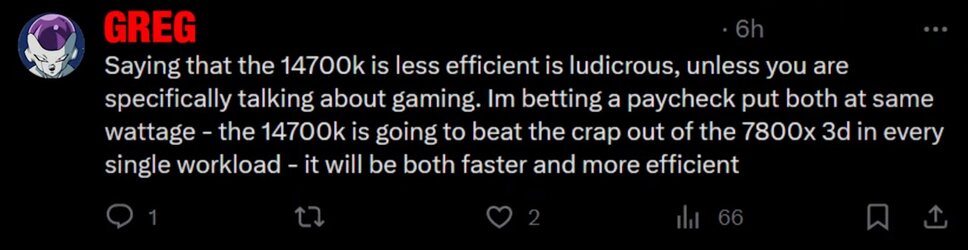

"A lot of you have requested that we run power consumption tests for gaming on CPUs, so we've finally done that! And alongside fulfilling that request, we also wanted to tackle a comment from Greg, our recurring antagonist commenter, who "requested" CPU efficiency testing. These benchmarks look at the efficiency and raw power consumption of Intel vs. AMD CPUs. There's a particular focus on the AMD Ryzen 7 7800X3D and Intel Core i7-14700K and 14900K CPUs, as these are the most recent and directly comparable / best gaming CPUs."

Intel:

AMD ⇾ Hold my beer:

"A lot of you have requested that we run power consumption tests for gaming on CPUs, so we've finally done that! And alongside fulfilling that request, we also wanted to tackle a comment from Greg, our recurring antagonist commenter, who "requested" CPU efficiency testing. These benchmarks look at the efficiency and raw power consumption of Intel vs. AMD CPUs. There's a particular focus on the AMD Ryzen 7 7800X3D and Intel Core i7-14700K and 14900K CPUs, as these are the most recent and directly comparable / best gaming CPUs."

Intel:

AMD ⇾ Hold my beer: