-

Welcome to Overclockers Forums! Join us to reply in threads, receive reduced ads, and to customize your site experience!

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Nvidia RTX 4080 SUPER Review vs 4080, 7900 XTX & more!

- Thread starter Kenrou

- Start date

- Joined

- Jan 4, 2024

- Location

- Indiana

I don't know about all that now.They could just eliminate all this e-waste and go back to basic **** + titans with extra vram for workloads instead of ****/****s/****ti/whatever extra models will be invented in the future...

Or, continue to separate church and state (consumer vs pro cards).... I like that betterThey could just eliminate all this e-waste and go back to basic **** + titans with extra vram for workloads instead of ****/****s/****ti/whatever extra models will be invented in the future...

. People bitched about the Titan costing too much or didn't have the same hutzpah as the pro cards. No GPU maker can win, lol.

. People bitched about the Titan costing too much or didn't have the same hutzpah as the pro cards. No GPU maker can win, lol.The Ti/Super's don't phase me on the consumer side. The Super's are just a mid-cycle refresh.

Honestly, I don't mind the number of GPU SKUs............ I do despise the number of Motherboard SKUs... THAT is e-waste, lol!!!Exactly, they are completely pointless e-waste. Make 3 basic models, make 3 models for workloads with extra vram and start working on the next gen. So much waste in this industry it's mind boggling...

3 GPU SKUs, though, feel paltry and leaves HUGE gaps between the SKUs (performance-wise). I like 5 with a refresh between as that covers the three basic resolutions/performance points and your High Hz/FPS crowd between. Then have the pro cards. For NV desktop, it's 4060, 4070, 4070 Ti, 4080, 4090. Hell, they already have 5, lol. Maybe it's just me, but I like the product stack after the refresh too.... what it does to pricing of the originals, especially.

- Joined

- Jan 4, 2024

- Location

- Indiana

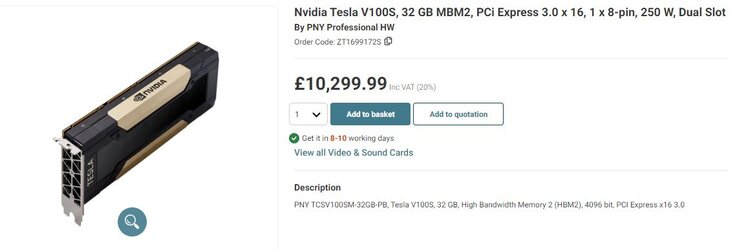

Can it fold?View attachment 365181

That's a turing based card, come down in price. At work I had the 16GB version, which was retailing at £12,000 at the time. I'd just x2 2060 or 2080 or 3090 or the Turing Titan...

- Joined

- Mar 7, 2008

You have unrealistic expectations then. You can game at 4k, and I did, on a 3070. You will have to make settings choices depending on what you prioritise. For starters, max/ultra might be out unless it is a relatively simple game. Medium-High is a maybe depending on the game, helped out in large part with upscaling. At least most modern games support some form of that now.It's not like I'm the Elon Musk of the French Riviera... 4K monitors and televisions are COMMON now.

You should be able to game decently at that resolution with just about any video card mid-level and up.

Current gen consoles output at 4k on rough equivalent to a 2070. Ok, frame rates might be a bit more "cinematic" than PC players are used to. You have to make your choices based on what you actually have.

At about 200 currency units less cost.EDIT: I mean COME ON... You get a 4080 Super and, CONGRATULATIONS!, ...you've just beaten a regular 4080 by one frame...

Whoever kicked off the e-waste toxicity is e-waste. 3 models is nowhere near enough to cover gaming alone, never mind non-gaming use cases.Exactly, they are completely pointless e-waste. Make 3 basic models, make 3 models for workloads with extra vram and start working on the next gen. So much waste in this industry it's mind boggling...

I'd see following as a good lineup to have:

1, sub-75W no power connector - lower 50 tier (may skip some generations - this area overlaps with APU perf but APUs can never replace these)

2, entry level gaming - 50 tier

3, mainstream-value gaming - 60 tier

4, mainstream-performance gaming - 70 tier

5, high end gaming - 80 tier

6, halo product - 90 tier, previously Titans

Maybe at most we can drop the Ti but it and Supers are ok for a refresh during a cycle.

Besides, where's the waste? It is only a waste if it is not selling and has to be scrapped. It sells, so by definition it is not a waste.

Another thing I hate about the tech "community" are those that think products/features shouldn't exist because they don't have a use for them, or fail to see there are uses for them. It isn't all or nothing.

That's Volta. It never made consumer cards. I did see a Titan V in a shop last year, almost tempted to get it as a collector's item but it was too expensive.That's a turing based card, come down in price. At work I had the 16GB version, which was retailing at £12,000 at the time. I'd just x2 2060 or 2080 or 3090 or the Turing Titan...

Don't the higher end pro GPUs come with ECC ram too? A glitch while gaming might be a few odd pixels that disappear as quickly as they appear, but matter more for professional uses.

- Joined

- Aug 14, 2014

- Thread Starter

- #29

Whoever kicked off the e-waste toxicity is e-waste. 3 models is nowhere near enough to cover gaming alone, never mind non-gaming use cases.

I'd see following as a good lineup to have:

1, sub-75W no power connector - lower 50 tier (may skip some generations - this area overlaps with APU perf but APUs can never replace these)

2, entry level gaming - 50 tier

3, mainstream-value gaming - 60 tier

4, mainstream-performance gaming - 70 tier

5, high end gaming - 80 tier

6, halo product - 90 tier, previously Titans

Maybe at most we can drop the Ti but it and Supers are ok for a refresh during a cycle.

Besides, where's the waste? It is only a waste if it is not selling and has to be scrapped. It sells, so by definition it is not a waste.

I see the waste when I see them still in the shelves or warehouses unused years after being released and then sold for pennies, thrown in the scrap or finally used in those dell "gaming" garbage heaps they use to scam people that know nothing about computers. What's the point of a refresh if the effort could be better used instead to improve the next gen? This is pure capitalism at work, flood the market with something that does 1%-5% better when the initial release is more than good enough to hold you up to the next release. It's been more than proved that just because people buy it doesn't mean it's a good deal. Or a good idea.

Granted 3 is maybe a tad limited, but I wouldn't go above 5 at the most, and even then there's some that could objectively be removed and prices adjusted accordingly.

- Joined

- Mar 7, 2008

Being on the shelves is a good and normal state of affairs. Don't let the shortage era distort the view that if it is in stock, it isn't selling. There was a period after the mining crash where there was some older gen stock hanging around but apart from that, things feel back to old normal now. If I can get GPUs for pennies, I'm up for it, if you could point me in the right direction.I see the waste when I see them still in the shelves or warehouses unused years after being released and then sold for pennies, thrown in the scrap or finally used in those dell "gaming" garbage heaps they use to scam people that know nothing about computers.

The people designing the next gen are not the people who are producing the refresh. The refresh design is essentially already done. Configure the silicon appropriately, add new definitions in the driver package along with the firmware. Rest is collateral.What's the point of a refresh if the effort could be better used instead to improve the next gen?

- Joined

- Feb 25, 2004

- Location

- N of splat W of Torin

I'm loling at Rainless post. I have 6 cards 4070 Ti or higher and none of them are being used for gaming. Hardly anyone games at 4K.

Even my laptop has a 4070...

Even my laptop has a 4070...

My TV can only do 60Hz Freesync at 4K, 120Hz at 1440P. 1440->4K it's not massive leap in terms of 'feel' even on 55inch TV. VHS->DVD was obvious, as was 1080P to SD TVs. I think it's pretty much the same with hi-res music compared to CD quality, not a big enough leap in terms of 'feel'...I'm loling at Rainless post. I have 6 cards 4070 Ti or higher and none of them are being used for gaming. Hardly anyone games at 4K.

Even my laptop has a 4070...

- Joined

- Mar 7, 2008

I use a 4k 55" TV for gaming. I don't have the hardware to render native 4k output at a decent frame rate, so upscaling fills in that gap very well. On a 4k display, DLSS quality is rendering at 1440p. Benefit of integrated upscalers is they can apply to some parts of the game and not others. Often UI elements can be rendered at native so you still have a better experience than merely running 1440p.

I do find 4k to be a tricky resolution. It's been standard on most TVs for a long time now so chances are if you have a modern-ish TV you have a 4k display. Not everyone connects a PC to their TV but consoles have to deal with it. If consoles can do it, comparable PCs can do it.

BTW I saw an Asus ROG Strix whatever 4080 not-Super used at a local store today, at the bottom end of current NEW price of 4080/Supers. Could use a bit more power than a 4070 and the store warranty is probably worth more than Asus warranty too

I do find 4k to be a tricky resolution. It's been standard on most TVs for a long time now so chances are if you have a modern-ish TV you have a 4k display. Not everyone connects a PC to their TV but consoles have to deal with it. If consoles can do it, comparable PCs can do it.

BTW I saw an Asus ROG Strix whatever 4080 not-Super used at a local store today, at the bottom end of current NEW price of 4080/Supers. Could use a bit more power than a 4070 and the store warranty is probably worth more than Asus warranty too

It's still difficult to get 4K movie streaming up to standard of 4K Blu-ray. It's (4K Blu-ray) better but not a lot in it; depends on your internet speed and how much of the bandwidth other people are using...Upscaling/frame generation I had thought it was for integrated graphics/APUs. I want the 'real deal'; real frames and real pixels. If I spend big money, I want a real beef burger none of that fake artificial meat. For me consoles are McDonalds...I use a 4k 55" TV for gaming. I don't have the hardware to render native 4k output at a decent frame rate, so upscaling fills in that gap very well. On a 4k display, DLSS quality is rendering at 1440p. Benefit of integrated upscalers is they can apply to some parts of the game and not others. Often UI elements can be rendered at native so you still have a better experience than merely running 1440p.

I do find 4k to be a tricky resolution. It's been standard on most TVs for a long time now so chances are if you have a modern-ish TV you have a 4k display. Not everyone connects a PC to their TV but consoles have to deal with it. If consoles can do it, comparable PCs can do it.

BTW I saw an Asus ROG Strix whatever 4080 not-Super used at a local store today, at the bottom end of current NEW price of 4080/Supers. Could use a bit more power than a 4070 and the store warranty is probably worth more than Asus warranty too

Last edited:

- Joined

- Mar 7, 2008

I had to look this up. This is likely to be bitrate limited. Following data might be out of date. Bluray could be between 92-144Mbps. Apple streaming averages up to 30Mbps on the higher end, with other services like Netflix and Prime somewhere lower. Sony apparently have a premium service at 80Mbps, so in theory that should be close.It's still difficult to get 4K movie streaming up to standard of 4K Blu-ray. It's (4K Blu-ray) better but not a lot in it; depends on your internet speed and how much of the bandwidth other people are using...

Upscaling/FG are tools like other settings to balance visual quality and performance. Even if you have a 4090 you could choose to deploy it depending on your intentions.Upscaling/frame generation I had thought it was for integrated graphics/APUs. I want the 'real deal'; real frames and real pixels. If I spend big money, I want a real beef burger none of that fake artificial meat. For me consoles are McDonalds...

From what you wrote, do you use 1440p mode on your 4k TV? Something has to upscale in the process, if not in your computer then the display has to do it. IMO using DLSS to scale 1440p to 4k and displaying that probably looks better than sending native 1440p to a 4k display. FSR has more problems so I might not say the same for that. I actually used XeSS in the game I'm playing right now because it looks even better than DLSS. This may vary from title to title so I wouldn't hold it as a rule.

I'm still not routinely using FG yet. The game I'm playing right now doesn't really gain a lot of FPS from it for whatever reason. Both NV and AMD solutions here seem to work similarly, although AMD's is again held back by more obvious problems in their upscaler.

Hmmm you're probably right I saw video on the tube with LukeFZ FSR2FSR3 mod - can convert any FSR2 game into FSR3 frame creation and upscaling - to play Cyberpunk 2077 Overdrive path tracing @4K with decent frame-rates (late 40s-early 50s) on an 7900XTX. Don't think I'll bother on my overclocked/undervolted 6900XT, which is close to a 3080 in ray tracing, 4070Ti Super in raster 3D...I had to look this up. This is likely to be bitrate limited. Following data might be out of date. Bluray could be between 92-144Mbps. Apple streaming averages up to 30Mbps on the higher end, with other services like Netflix and Prime somewhere lower. Sony apparently have a premium service at 80Mbps, so in theory that should be close.

Upscaling/FG are tools like other settings to balance visual quality and performance. Even if you have a 4090 you could choose to deploy it depending on your intentions.

From what you wrote, do you use 1440p mode on your 4k TV? Something has to upscale in the process, if not in your computer then the display has to do it. IMO using DLSS to scale 1440p to 4k and displaying that probably looks better than sending native 1440p to a 4k display. FSR has more problems so I might not say the same for that. I actually used XeSS in the game I'm playing right now because it looks even better than DLSS. This may vary from title to title so I wouldn't hold it as a rule.

I'm still not routinely using FG yet. The game I'm playing right now doesn't really gain a lot of FPS from it for whatever reason. Both NV and AMD solutions here seem to work similarly, although AMD's is again held back by more obvious problems in their upscaler.

Last edited:

- Joined

- Jan 4, 2024

- Location

- Indiana

I so agree I play 1440p and I am going to get the 4070 TI. The $$ is good and so is the performance in 1440p.I'm loling at Rainless post. I have 6 cards 4070 Ti or higher and none of them are being used for gaming. Hardly anyone games at 4K.

Even my laptop has a 4070...

Similar threads

- Replies

- 9

- Views

- 952

- Replies

- 7

- Views

- 1K

- Replies

- 0

- Views

- 1K