- Joined

- Mar 7, 2008

I tested. I tested some more. Had a mug of tea, then went back for more testing. Then I made some charts and here are the results!

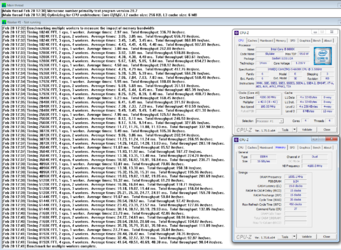

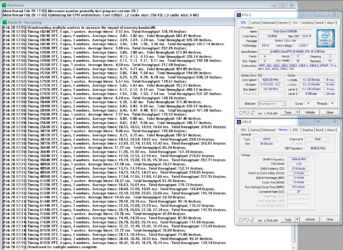

Test system:

CPU: i7-6700k at 4.2 GHz, ring at 4.1 GHz, HT off

Mobo: MSI Gaming Pro, bios 1.7

GPU: 9500 GT (just to make sure no ram bandwidth is stolen by integrated graphics)

RAM: for the results presented I use two types

G.Skill F4-3333C16-4GRRD Ripjaws 4, 4x4GB kit

G.Skill F4-3200C16-8GVK Ripjaws V, 2x8GB kit

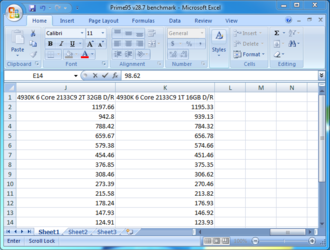

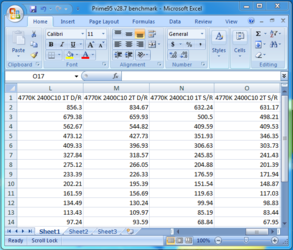

Testing was performed using Prime95 28.7 built in benchmark in Windows 7 64-bit. Each setting was run once, after the PC had been given time to settle down after rebooting. All test configurations had the ram in dual channel mode. Timing values listed are ordered CAS-RCD-RP-RAS as commonly shown in most software.

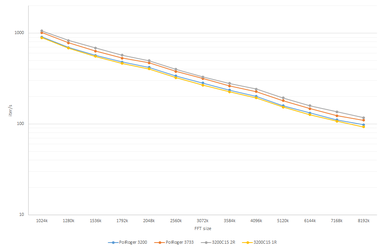

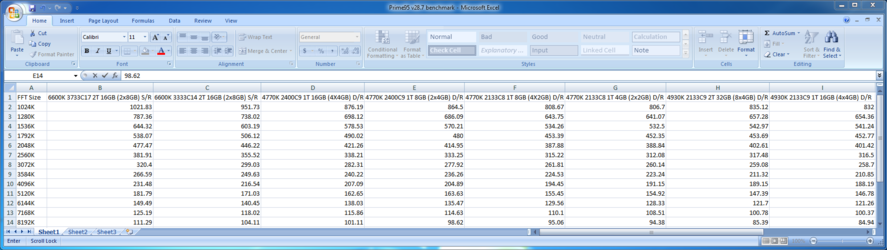

Most testing was with all 4 modules of the Ripjaws 4 kit fitted, for reasons discussed later. This ram is known from previous experience not to boot in this mobo at 3333 with 4 modules fitted, so I tested at common ram speeds from 2133 to 3200. To not complicate matters with timings, these were fixed at 16-18-18-38 for scaling tests, which may disadvantage the slower speeds since the values would typically be lower in practice. Latency will be considered separately later.

As the clock increases we see no significant difference in performance. This is not ram limited.

There is a slight increase in performance here as ram clocks go up, but not much.

Now we are starting to see something happen.

And here we see a clear relation with speed and performance.

Here we alter the display a bit so we can compare ram settings. 3 speeds are tested. Actually, two of these are not exciting. At 3200 the results for 15-16-16-36 and 16-18-18-38 are practically identical. At 2800, 14-16-16-36 and 16-18-18-38 gave a 1% average advantage to C14, but this is so small it is hard to say if this could just be measurement variation. It gets a little more interesting at 2133, where 3 speeds were tested: 14-14-14-35, 15-15-15-35, and 16-18-18-38. The last one is on average 4% slower than the other two, which were the same. This may be an area for future research, although it seems ram speed is more important to performance. Timings might get you a little more as a secondary optimisation.

Putting the ram to one side, how does CPU speed affect performance? These 4 lines show the combinations of CPU at 3.5 and 4.2 GHz, with 1 or 4 workers active.

With one worker, the scaling is near perfect with the faster CPU 19% faster, compared to 20% for ideal clock scaling.

With 4 workers, it would seem the ram is the limit. We only see 4% increase for the 20% clock increase. This may present opportunities for power saving as the higher clock doesn't help here. It may be interesting to see how scaling applies over a wider range of CPU speeds.

And finally, this is the cause of some unexpected behaviour I saw. I had two comparable systems, but I saw a massive performance difference between them which I struggled to explain. I tried various things and even wrongly blamed the mobo for being rubbish, but it would seem module rank has a major influence. This isn't so commonly discussed or even specified. I found Thaiphoon Burner as free software that can read this. The Ripjaws 4 modules are single rank, and the Ripjaws 5 module is dual rank (caution: other parts in the series may vary!). General consensus seems to be that having higher ranks can slightly increase bandwidth, at the cost of slightly higher latency.

This chart is going to take some explaining. The chart again shows the 4 worker throughput. The grey line is the Ripjaws V kit, and light blue line is Ripjaws 4 kit with 4 modules fitted, both at 3200. So on each memory channel is a total of 2 rank, and performance is so identical you can't see the light blue line under the grey line! So far so good? Let's take two of the Ripjaws 4 modules out, leaving it running in dual channel mode. Logically, this shouldn't make a difference. It is still 2 channels, running at the same clock and timings. Nope. We see a 19% drop in performance (orange line). This is massive! How massive? The yellow and blue lines are 4 modules running at 2666 and 2400 respectively, and they go neatly either side of the orange line. That is quite a performance drop!

The tentative conclusion from this is that, it seems it is worth having the higher rank modules, or running more modules to do so, otherwise you will reduce your potential significantly. Unfortunately it doesn't seem that easy to find out what rank a module is before buying it.

Ideally more testing could be done to make sure it is the rank, and not something else. I'd need for example 8GB modules with single rank to make sure the module capacity isn't in some way influencing it. Or alternatively, 4GB modules with dual rank.

I have quite a lot of data from this testing, so if there are different ways the data could be cut, I could have a go at showing it.

Test system:

CPU: i7-6700k at 4.2 GHz, ring at 4.1 GHz, HT off

Mobo: MSI Gaming Pro, bios 1.7

GPU: 9500 GT (just to make sure no ram bandwidth is stolen by integrated graphics)

RAM: for the results presented I use two types

G.Skill F4-3333C16-4GRRD Ripjaws 4, 4x4GB kit

G.Skill F4-3200C16-8GVK Ripjaws V, 2x8GB kit

Testing was performed using Prime95 28.7 built in benchmark in Windows 7 64-bit. Each setting was run once, after the PC had been given time to settle down after rebooting. All test configurations had the ram in dual channel mode. Timing values listed are ordered CAS-RCD-RP-RAS as commonly shown in most software.

Most testing was with all 4 modules of the Ripjaws 4 kit fitted, for reasons discussed later. This ram is known from previous experience not to boot in this mobo at 3333 with 4 modules fitted, so I tested at common ram speeds from 2133 to 3200. To not complicate matters with timings, these were fixed at 16-18-18-38 for scaling tests, which may disadvantage the slower speeds since the values would typically be lower in practice. Latency will be considered separately later.

As the clock increases we see no significant difference in performance. This is not ram limited.

There is a slight increase in performance here as ram clocks go up, but not much.

Now we are starting to see something happen.

And here we see a clear relation with speed and performance.

Here we alter the display a bit so we can compare ram settings. 3 speeds are tested. Actually, two of these are not exciting. At 3200 the results for 15-16-16-36 and 16-18-18-38 are practically identical. At 2800, 14-16-16-36 and 16-18-18-38 gave a 1% average advantage to C14, but this is so small it is hard to say if this could just be measurement variation. It gets a little more interesting at 2133, where 3 speeds were tested: 14-14-14-35, 15-15-15-35, and 16-18-18-38. The last one is on average 4% slower than the other two, which were the same. This may be an area for future research, although it seems ram speed is more important to performance. Timings might get you a little more as a secondary optimisation.

Putting the ram to one side, how does CPU speed affect performance? These 4 lines show the combinations of CPU at 3.5 and 4.2 GHz, with 1 or 4 workers active.

With one worker, the scaling is near perfect with the faster CPU 19% faster, compared to 20% for ideal clock scaling.

With 4 workers, it would seem the ram is the limit. We only see 4% increase for the 20% clock increase. This may present opportunities for power saving as the higher clock doesn't help here. It may be interesting to see how scaling applies over a wider range of CPU speeds.

And finally, this is the cause of some unexpected behaviour I saw. I had two comparable systems, but I saw a massive performance difference between them which I struggled to explain. I tried various things and even wrongly blamed the mobo for being rubbish, but it would seem module rank has a major influence. This isn't so commonly discussed or even specified. I found Thaiphoon Burner as free software that can read this. The Ripjaws 4 modules are single rank, and the Ripjaws 5 module is dual rank (caution: other parts in the series may vary!). General consensus seems to be that having higher ranks can slightly increase bandwidth, at the cost of slightly higher latency.

This chart is going to take some explaining. The chart again shows the 4 worker throughput. The grey line is the Ripjaws V kit, and light blue line is Ripjaws 4 kit with 4 modules fitted, both at 3200. So on each memory channel is a total of 2 rank, and performance is so identical you can't see the light blue line under the grey line! So far so good? Let's take two of the Ripjaws 4 modules out, leaving it running in dual channel mode. Logically, this shouldn't make a difference. It is still 2 channels, running at the same clock and timings. Nope. We see a 19% drop in performance (orange line). This is massive! How massive? The yellow and blue lines are 4 modules running at 2666 and 2400 respectively, and they go neatly either side of the orange line. That is quite a performance drop!

The tentative conclusion from this is that, it seems it is worth having the higher rank modules, or running more modules to do so, otherwise you will reduce your potential significantly. Unfortunately it doesn't seem that easy to find out what rank a module is before buying it.

Ideally more testing could be done to make sure it is the rank, and not something else. I'd need for example 8GB modules with single rank to make sure the module capacity isn't in some way influencing it. Or alternatively, 4GB modules with dual rank.

I have quite a lot of data from this testing, so if there are different ways the data could be cut, I could have a go at showing it.