Hi, I've recently upgraded my MSI 1080 to an Aorus 1080ti because I managed to get a pretty good deal on it. It's a used one so I ran a lot of stress testings and everything looks good. One weird issue that I've been having is that it refuses to work with HDMI. Allow me to explain the situation:

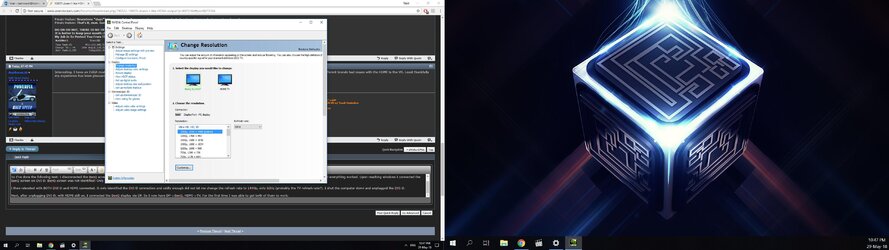

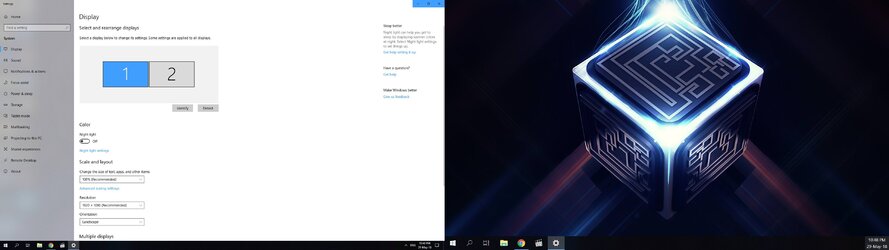

My 1080ti connects to a BenQ 144Hz 1080p screen with a DVI-D Cable. It works well and reaches the designated resolution and refresh rate. I can also connect it using a DisplayPort cable, and it also works with the screen, but I prefer using the DVI-D one. I recently tried connecting the GPU to an HDMI TV using an HDMI-HDMI cable and got no signal, it should be noted that the TV works with a laptop & worked with my old 1080 using the same cable. I thought that's odd so I did another test, this time tried connecting to my BenQ screen using the HDMI cable (it has HDMI input as well) and again got no signal. So I then thought "Well, OK, I guess maybe the HDMI output of the GPU is bad", and I bought a DisplayPort->HDMI Adapter (Display port goes into the GPU) annnd... no signal. I have no additional devices with DP output so I cannot test the adapter.

Allow me to sum-up the problem and my attempted solutions:

The Problem: Unable to connect 1080ti to HDMI TV.

Attempted Solutions:

1. Connect TV to GPU with different HDMI cable - failed

2. Connect other devices to TV with HDMI - worked

3. Connect a different screen to GPU with HDMI - failed

4. Connect a different screen to GPU with DVI-D/DP - worked

5. Connect TV to GPU with a DP-HDMI adapter - failed

Just wondering if someone has ever run into that sort of thing?

My 1080ti connects to a BenQ 144Hz 1080p screen with a DVI-D Cable. It works well and reaches the designated resolution and refresh rate. I can also connect it using a DisplayPort cable, and it also works with the screen, but I prefer using the DVI-D one. I recently tried connecting the GPU to an HDMI TV using an HDMI-HDMI cable and got no signal, it should be noted that the TV works with a laptop & worked with my old 1080 using the same cable. I thought that's odd so I did another test, this time tried connecting to my BenQ screen using the HDMI cable (it has HDMI input as well) and again got no signal. So I then thought "Well, OK, I guess maybe the HDMI output of the GPU is bad", and I bought a DisplayPort->HDMI Adapter (Display port goes into the GPU) annnd... no signal. I have no additional devices with DP output so I cannot test the adapter.

Allow me to sum-up the problem and my attempted solutions:

The Problem: Unable to connect 1080ti to HDMI TV.

Attempted Solutions:

1. Connect TV to GPU with different HDMI cable - failed

2. Connect other devices to TV with HDMI - worked

3. Connect a different screen to GPU with HDMI - failed

4. Connect a different screen to GPU with DVI-D/DP - worked

5. Connect TV to GPU with a DP-HDMI adapter - failed

Just wondering if someone has ever run into that sort of thing?