I run 5x 250GB Samsung 970 Evo/NVMe R0 in my gaming PC ... just because I had 5x 250GB SSD after tests that aren't worth selling and the motherboard has 5x M.2 3.0/4.0 without any weird add-on cards. Btw. it's Gigabyte Z690 Master. If not the RAM support then I would say it's a great mobo

-

Welcome to Overclockers Forums! Join us to reply in threads, receive reduced ads, and to customize your site experience!

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Article on RAID0 for SSDs

- Thread starter SteveLord

- Start date

You'll throw in a random/unsolicited barb towards z690 gigabyte, but not share your RAID results?I run 5x 250GB Samsung 970 Evo/NVMe R0 in my gaming PC ... just because I had 5x 250GB SSD after tests that aren't worth selling and the motherboard has 5x M.2 3.0/4.0 without any weird add-on cards. Btw. it's Gigabyte Z690 Master. If not the RAM support then I would say it's a great mobo

- Joined

- Feb 18, 2010

I run 4 pcie ssd's in raid0 using Intel's raid on CPU with the key. It does in fact increase performance substantially compared to just a single drive. When simply relying on a built in raid controller on a board, you are potentially limiting your overall performance depending on the bandwidth of the controller, quality of the controller, and if there is any cache involved. Most people will never reach the threshold in normal use to require such storage. But if you run multiple virtual machines, or do heavy rendering workloads, it can be very beneficial.

I run 4 pcie ssd's in raid0 using Intel's raid on CPU with the key. It does in fact increase performance substantially compared to just a single drive. When simply relying on a built in raid controller on a board, you are potentially limiting your overall performance depending on the bandwidth of the controller, quality of the controller, and if there is any cache involved. Most people will never reach the threshold in normal use to require such storage. But if you run multiple virtual machines, or do heavy rendering workloads, it can be very beneficial.

For sure there are tasks where RAID is a better option. However, there is one problem with VROC. It performs really bad in low queue random operations what is important when you use a lot of small files. If you run CrystalDiskMark then you will see that RAID0/VROC performs worse in these tasks than a single NVMe SSD, or even worse than a single SATA SSD (depends on motherboard and used SSD). It's also only scaling well up to 3-4 SSD in sequential bandwidth. I was testing it in all ways with ASUS Hyper card and directly on the motherboard. I was also arguing with Intel and I proved them wrong, as their support was clueless about their own solutions. There is only very limited info about it on Intel website and in their documents.

The latest desktop Intel chipsets have improved NVMe RAID mode. I have 5x NVMe R0 on Z690 and it's scaling well up to all 5 SSD with ~90MB/s low queue 4k read (VROC on X299 was ~30MB/s on 4, the same SSD). So low queue 4K bandwidth is also nothing special as it's not so much better than a single NVMe SSD but it's much better than with VROC.

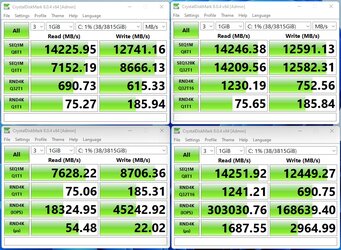

I just reinstalled OS on 2xNVMe in R0. Here are results in CrystalDiskMark on 2x2TB NVMe PCIe 4.0 SSD / Innogrit controller in RAID0, Z690 and Win11. Win11 still has problems with performance so expect better results on Win10 or Server 2019. Results on the OS drive so can be a bit lower than expected.

Default/Default, Default/NVMe, NVMe/Real World Performance, NVMe/Peak Performance

- Joined

- Oct 16, 2014

- Location

- Allagash, Maine

This disk array has the capability of saturating a 10Gb network LAN connection when transferring a single large file?

10GbE is about ~1.25GB/s so it's not so much but it will never run at the max bandwidth. In my tests, 10GbE LAN runs at about 8.5-9Gbps max. In theory, the array is 10 times faster, but in reality, there are other things that will slow it down. It will be still much above the 10GbE LAN bandwidth.

PCIe 5.0 SSD that will be out in a couple of months will offer a max bandwidth of about the same as 2x PCIe 4.0 in RAID0. Probably more in the next generations of SSD controllers, but hard to say how high it will go. There were first results some time ago and sadly, at least Phison controllers in their PCIe 5.0 SSD are not performing better in random operations than the current PCIe 4.0 SSD.

PCIe 5.0 SSD that will be out in a couple of months will offer a max bandwidth of about the same as 2x PCIe 4.0 in RAID0. Probably more in the next generations of SSD controllers, but hard to say how high it will go. There were first results some time ago and sadly, at least Phison controllers in their PCIe 5.0 SSD are not performing better in random operations than the current PCIe 4.0 SSD.

- Joined

- Oct 16, 2014

- Location

- Allagash, Maine

So basically a single pcie 5.0 nvme storage device has the potential to saturate a 10Gb LAN connection with a single file and also without the need for a RAID disk array?

Also, what could I use as a comparison in real world use distinguishing between sequential rw numbers and random rw numbers?

Also, what could I use as a comparison in real world use distinguishing between sequential rw numbers and random rw numbers?

Run PCMark 10 or 3DMark storage tests. They perform mixed load tests based on popular applications so can say something about the real-world performance. The fastest single NVMe PCIe 4.0 x4 SSD in the 3DMark storage test can make about 700MB/s and this is a mixed test.

In theory, a single NVMe PCIe 3.0 x4 SSD is still twice as fast as the 10GbE in sequential bandwidth and also in most random operations.

In theory, a single NVMe PCIe 3.0 x4 SSD is still twice as fast as the 10GbE in sequential bandwidth and also in most random operations.

- Joined

- Jan 27, 2011

- Location

- Beautiful Sunny Winfield

I ran 4 850 EVOs in a RAID0 for a bit. First it was using a RAID controler, but that one didn't support TRIM so I switched to ZFS RAID0 (linux, of course.) It worked fine. Until one of the drives failed. It was the kind of failure where it would power up OK and then stop responding some time later. That allowed me to backup everything. (My regular backups only cover "important" stuff.) Samsung replaced it with a refurb 860 EVO and I bought another 850 EVO and now run them in RAIDZ1. In theory that gives me the same performance of a single drive so I gave up some.

Recently at work 2x 2TB Samsung 980 Pro died. Both were working together in RAID1 for about a year and had maybe 150TB written (far from the max value and still 100% health). Samsung replaced both but the RMA took over a month. A weird problem as the OS was showing corrupted file system error and it wasn't possible to do anything with these drives (even full erase via BIOS didn't work).

I generally dislike RAID on AMD. There are sometimes weird problems and it's not possible to recover the data, even on RAID1 without proper drivers and chipset. With Intel it's much easier as one of the RAID 1/10 drives is still visible on another PC with standard drivers built-in the OS.

There is also one other solution. If you don't need a bootable array then Windows dynamic volumes are performing better than Intel VROC, perform about the same as Intel/AMD RAID on desktop motherboards and you can move drives to another PC simply putting them online as the driver is in the OS. I didn't check if it supports TRIM but I think that yes as drives are visible as single and the used driver is standard AHCI.

I generally dislike RAID on AMD. There are sometimes weird problems and it's not possible to recover the data, even on RAID1 without proper drivers and chipset. With Intel it's much easier as one of the RAID 1/10 drives is still visible on another PC with standard drivers built-in the OS.

There is also one other solution. If you don't need a bootable array then Windows dynamic volumes are performing better than Intel VROC, perform about the same as Intel/AMD RAID on desktop motherboards and you can move drives to another PC simply putting them online as the driver is in the OS. I didn't check if it supports TRIM but I think that yes as drives are visible as single and the used driver is standard AHCI.

- Joined

- Feb 7, 2003

You can boot from windows dynamic. Several times now I have set up RAID1 within windows disk management and dynamic disks. Boots fine.

- Joined

- Feb 7, 2003

Yes exactly. That is correct.Yes but afaik you can't create a dynamic volume and install Windows on that volume. You can install Windows on a single drive and add it to the dynamic volume group and then it will boot + have its copy.

Similar threads

- Replies

- 6

- Views

- 2K

- Replies

- 11

- Views

- 15K