- Joined

- Sep 30, 2021

Hi all.

Please bare with me.

I have an ASUS ROG Z690-E with an Intel 12900K. Its main M.2 slot ( labelled as M.2_1 ) & the main x16 PCI-X slot are Gen 5.

However when that main x16 PCI-X Gen 5 slot is occupied, which I have a GPU installed in there, & the main M.2 Gen 5 slot is also occupied simultaneously, which I have an M.2 installed in, then this forces the main x16 PCI-X Gen 5 to run at x8 PCI-X Gen 5, which would be equal to x16 PCI-X Gen 4. My GPU is max x16 PCI-X Gen 4 anyways so no problem.

But I'm a bit confused. GPU-Z says I'm running at only x8 PCI-X Gen 4, & lets me know I'm not running at full potential speed, which I've attached a screenshot of.

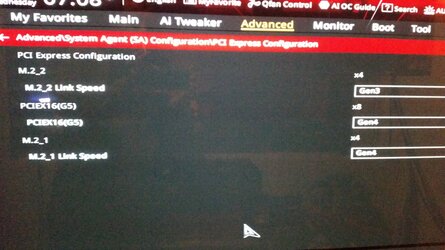

My BIOS screenshot shows where I tell it what Gen to run in (the "PCIEX16(G5)" in the pic is my main 16x PCI-X Gen 5 slot I'm referring to) .

I set this setting to Gen 4 first but when I saw GPU-Z saying I'm only running at x8 PCI-X Gen 4, I then changed it to Gen 5, but GPU-Z still reports the same x8 PCI-X Gen 4 connection either way.

How do I get it to assign me my 8x PCI-X Gen 5//16x PCI-X Gen 4 ?? Or is there a nice way to find out or run a test to get what actual speed/Gen my GPU is running on other than just GPU-Z ??

The MB manual simply says "1 x PCIe 5.0 x16 slot == When M.2_1 is occupied with SSD, PCIEX16(G5) will run x8 mode only".

Appreciate your time!

Please bare with me.

I have an ASUS ROG Z690-E with an Intel 12900K. Its main M.2 slot ( labelled as M.2_1 ) & the main x16 PCI-X slot are Gen 5.

However when that main x16 PCI-X Gen 5 slot is occupied, which I have a GPU installed in there, & the main M.2 Gen 5 slot is also occupied simultaneously, which I have an M.2 installed in, then this forces the main x16 PCI-X Gen 5 to run at x8 PCI-X Gen 5, which would be equal to x16 PCI-X Gen 4. My GPU is max x16 PCI-X Gen 4 anyways so no problem.

But I'm a bit confused. GPU-Z says I'm running at only x8 PCI-X Gen 4, & lets me know I'm not running at full potential speed, which I've attached a screenshot of.

My BIOS screenshot shows where I tell it what Gen to run in (the "PCIEX16(G5)" in the pic is my main 16x PCI-X Gen 5 slot I'm referring to) .

I set this setting to Gen 4 first but when I saw GPU-Z saying I'm only running at x8 PCI-X Gen 4, I then changed it to Gen 5, but GPU-Z still reports the same x8 PCI-X Gen 4 connection either way.

How do I get it to assign me my 8x PCI-X Gen 5//16x PCI-X Gen 4 ?? Or is there a nice way to find out or run a test to get what actual speed/Gen my GPU is running on other than just GPU-Z ??

The MB manual simply says "1 x PCIe 5.0 x16 slot == When M.2_1 is occupied with SSD, PCIEX16(G5) will run x8 mode only".

Appreciate your time!