Hi,

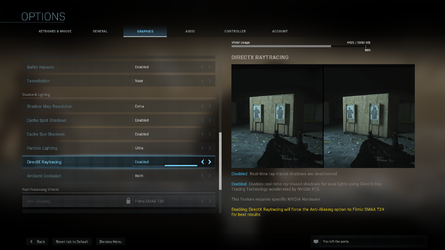

Just bought Modern warfare Remastered in the Steam sale. I'm trying to switch on ray-tracing but cannot find any options under graphics - MSI Tomahawk, MSI 6900XT Gaming X Trio + 5800X3D + 16 GB DDR4 drivers are up-to-date...Can anybody help?

Thanks

Haider

Just bought Modern warfare Remastered in the Steam sale. I'm trying to switch on ray-tracing but cannot find any options under graphics - MSI Tomahawk, MSI 6900XT Gaming X Trio + 5800X3D + 16 GB DDR4 drivers are up-to-date...Can anybody help?

Thanks

Haider

6***/7*** AMD cards are rasterization monsters and Nvidia cards have higher driver overhead, so the only place you're missing out on is Ray tracing, which to be fair, only a handful of game do well, so your 6900xt should be more than good enough for many years to come...

6***/7*** AMD cards are rasterization monsters and Nvidia cards have higher driver overhead, so the only place you're missing out on is Ray tracing, which to be fair, only a handful of game do well, so your 6900xt should be more than good enough for many years to come...