- Joined

- Oct 18, 2005

- Location

- Chicago Burbs

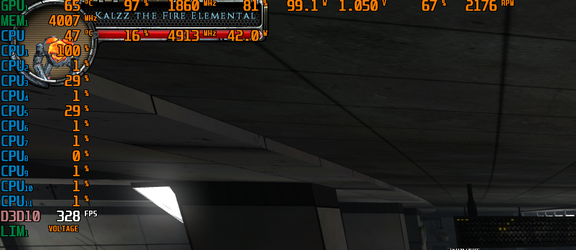

In my ever-ubiquitous quest to be fully prepared for summer bake sessions in my room by minimizing unnecessary heat wattage being put out by my new system, I've turned my attention now to my video card, a lowly (by your standards) PNY GTX 1060 3GB. With the jump from 7700k to 12700k, I've been playing Hellgate: London lately, sustained FPS went from 65-93 to about 250-430, and low spikes in massive battles went from the 29-43 range (WITH annoying blows to game responsiveness in the worst cases) to around 130-190 with absolute flawless responsiveness. It should be noted this game is single thread, and the 12700k has a 44% single thread boost over the 7700k. Under a 7700k regime, this card was fully saturating a CPU core and only using part of its GPU capacity. Under the new regime, it appears the 1060 GTX and one of the P-cores of the CPU are taking turns bottlenecking each other, generally hovering around fully saturated on each, the ideal scenario if there ever was one.

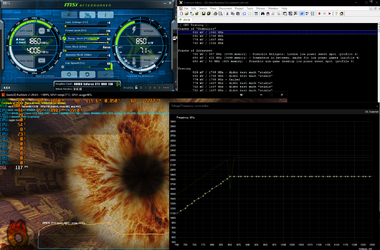

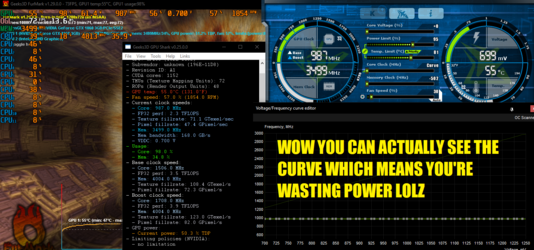

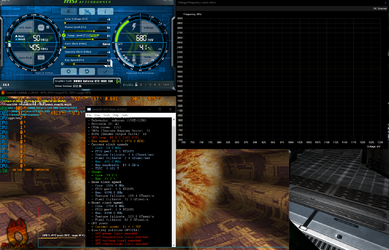

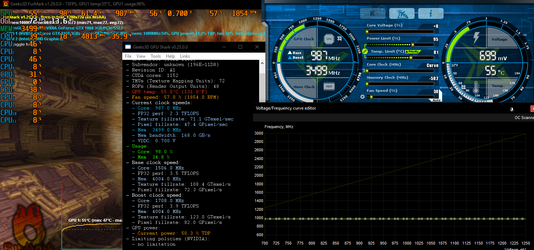

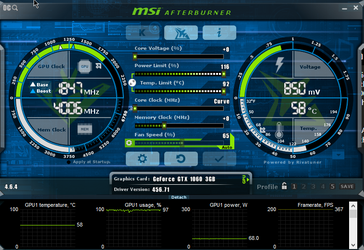

Embarrassingly enough, I've never played around with the video card settings, and now I think I should have. By now I could have saved a lot on power bills and heat misery from former summers As I speak, I'm testing it in the game running at close to its peak stock settings frequency when under load of 1847 MHz, but instead of the normal voltage it applies, 1047 mV, it's at 850 mV. And I still haven't found the undervolt limit! To me this is insane. It's literally going from using around 110 watts to 69 watts, WITHOUT UNDERCLOCKING. What's even more insane about this is that if I just keep the stock settings but set the power limit slider to 50%, it uses just a few less watts, and will run at much lower GPU frequency. To be fair, given the relatively balanced nature of the GPU/CPU power for this game, even at that lowered frequency, reduction of FPS and such isn't more than 10-15%, which is negligible for this scenario.

As I speak, I'm testing it in the game running at close to its peak stock settings frequency when under load of 1847 MHz, but instead of the normal voltage it applies, 1047 mV, it's at 850 mV. And I still haven't found the undervolt limit! To me this is insane. It's literally going from using around 110 watts to 69 watts, WITHOUT UNDERCLOCKING. What's even more insane about this is that if I just keep the stock settings but set the power limit slider to 50%, it uses just a few less watts, and will run at much lower GPU frequency. To be fair, given the relatively balanced nature of the GPU/CPU power for this game, even at that lowered frequency, reduction of FPS and such isn't more than 10-15%, which is negligible for this scenario.

As long as my games run "too fast" my ultimate goal is not just undervolting but underclocking, to minimize power draw. However, I still plan to experiment with undervolting at normal clockrates and even overclock rates if possible, as I'm sure at some point I will need to actually take advantage of the full performance of the card.

There are some things I'm wondering about.

1. Memory clock. I'm confused on this because it seems to be listed everywhere in terms of GBPS, as 8 or 9. I've also seen some people say their memory OCed to the, say for example, 9600 MHz range. And yet I've seen others say their memory OC was to the, typical example, 4700 MHz range. I'm almost wondering if the memory clock shown here is actually underclocked to begin with. Either way I play on testing how the memory clock affects performance/power usage.

2. What would a more average vs best-luck undervolt be for this GPU @ stock, underclock, overclock? I kind of feel like I'm having my cake and eating it too here. It just seems too good to be true, but maybe these results are typical, which in itself calls to question why potentially millions of cards are wasting so much power. Granted, the burden of QA can rise dramatically even if there's such big headroom for tighter settings.

3. What is your preferred method to test the stability of undervolts/OCs? For starters I've just been using Hellgate: London and the Heaven benchmark. They both appear to be taxing the GPU the same in terms of utilization and wattage, even though the game runs between 200-450 FPS, and Heaven, with the settings I'm using, is running between 40 and 95 FPS or so. Would furmark be a better test? I imagine with different GPU generations these things can change.

4. Have you found that sometimes in implementing custom undervolt/OC voltage/frequency curves, stability loss occurs in low-load situations, such as not having any 3D apps/games running, and the GPU closer to idle? I would like to push the power usage at idle and low load situations as far down as possible, especially given #5.

5. I have 4 monitors connected to this card, which seems to always use around 25-27 watts even at relative idle. When I only had one monitor connected, it was only using around 6-12 watts at idle. In both situations the GPU usage hovers close to 0 at all times. I tend to wonder if the power usage numbers are thus always being inflated because of this. This also means there is less headroom to reach the power limit if I set it low. It also seems like before using multiple displays on this, the card was downclocking a lot on GPU/memory at idle, but afterward it wasn't, which I see no good reason for.

6. If you have any other general insights that might be useful to me about this endeavor, let me know.

Embarrassingly enough, I've never played around with the video card settings, and now I think I should have. By now I could have saved a lot on power bills and heat misery from former summers

As I speak, I'm testing it in the game running at close to its peak stock settings frequency when under load of 1847 MHz, but instead of the normal voltage it applies, 1047 mV, it's at 850 mV. And I still haven't found the undervolt limit! To me this is insane. It's literally going from using around 110 watts to 69 watts, WITHOUT UNDERCLOCKING. What's even more insane about this is that if I just keep the stock settings but set the power limit slider to 50%, it uses just a few less watts, and will run at much lower GPU frequency. To be fair, given the relatively balanced nature of the GPU/CPU power for this game, even at that lowered frequency, reduction of FPS and such isn't more than 10-15%, which is negligible for this scenario.

As I speak, I'm testing it in the game running at close to its peak stock settings frequency when under load of 1847 MHz, but instead of the normal voltage it applies, 1047 mV, it's at 850 mV. And I still haven't found the undervolt limit! To me this is insane. It's literally going from using around 110 watts to 69 watts, WITHOUT UNDERCLOCKING. What's even more insane about this is that if I just keep the stock settings but set the power limit slider to 50%, it uses just a few less watts, and will run at much lower GPU frequency. To be fair, given the relatively balanced nature of the GPU/CPU power for this game, even at that lowered frequency, reduction of FPS and such isn't more than 10-15%, which is negligible for this scenario.As long as my games run "too fast" my ultimate goal is not just undervolting but underclocking, to minimize power draw. However, I still plan to experiment with undervolting at normal clockrates and even overclock rates if possible, as I'm sure at some point I will need to actually take advantage of the full performance of the card.

There are some things I'm wondering about.

1. Memory clock. I'm confused on this because it seems to be listed everywhere in terms of GBPS, as 8 or 9. I've also seen some people say their memory OCed to the, say for example, 9600 MHz range. And yet I've seen others say their memory OC was to the, typical example, 4700 MHz range. I'm almost wondering if the memory clock shown here is actually underclocked to begin with. Either way I play on testing how the memory clock affects performance/power usage.

2. What would a more average vs best-luck undervolt be for this GPU @ stock, underclock, overclock? I kind of feel like I'm having my cake and eating it too here. It just seems too good to be true, but maybe these results are typical, which in itself calls to question why potentially millions of cards are wasting so much power. Granted, the burden of QA can rise dramatically even if there's such big headroom for tighter settings.

3. What is your preferred method to test the stability of undervolts/OCs? For starters I've just been using Hellgate: London and the Heaven benchmark. They both appear to be taxing the GPU the same in terms of utilization and wattage, even though the game runs between 200-450 FPS, and Heaven, with the settings I'm using, is running between 40 and 95 FPS or so. Would furmark be a better test? I imagine with different GPU generations these things can change.

4. Have you found that sometimes in implementing custom undervolt/OC voltage/frequency curves, stability loss occurs in low-load situations, such as not having any 3D apps/games running, and the GPU closer to idle? I would like to push the power usage at idle and low load situations as far down as possible, especially given #5.

5. I have 4 monitors connected to this card, which seems to always use around 25-27 watts even at relative idle. When I only had one monitor connected, it was only using around 6-12 watts at idle. In both situations the GPU usage hovers close to 0 at all times. I tend to wonder if the power usage numbers are thus always being inflated because of this. This also means there is less headroom to reach the power limit if I set it low. It also seems like before using multiple displays on this, the card was downclocking a lot on GPU/memory at idle, but afterward it wasn't, which I see no good reason for.

6. If you have any other general insights that might be useful to me about this endeavor, let me know.