- Joined

- Sep 25, 2015

-

Welcome to Overclockers Forums! Join us to reply in threads, receive reduced ads, and to customize your site experience!

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

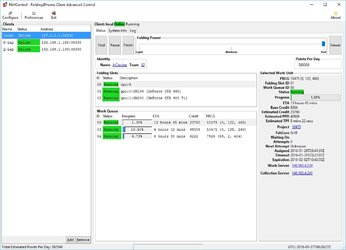

Up and Running

- Thread starter JrClocker

- Start date

- Joined

- Sep 25, 2015

- Thread Starter

- #3

won't running all the cores hurt your Ti some? I've heard you need one core for the NVidia's to run well. I'm not liking that 588k, means you can catch me, not good, for me, for the team its great, keep up the good work and fold on.

Is it 1 core per GPU, or 1 thread per GPU?

I bumped my main CPU to 7/12 threads (should get close to 30K ppd now). With this setting, my processor is running at 80% load...(7+2)/12 = 75%.

My PPD fluctuates...when I have a heavy gaming session, I have to turn off the 980 Ti!

The 660 puts off a lot of heat though...but hey it was just sitting there not doing anything.

Crossed 2,000,000 points last night!

- Joined

- Jan 10, 2012

I always run with one "core" free per gpu and ignore "threads" 99.9% of the time.

- Joined

- Sep 25, 2015

- Thread Starter

- #5

Hrm - well running the CPU folding at 7/12 threads means that I am using 4/6 cores (rounding up)...that leaves 1 core free for each GPU.

Should I try dropping the number of threads on the CPU folding, or is this good? (The machine is running 80% on the CPU right now.)

Should I try dropping the number of threads on the CPU folding, or is this good? (The machine is running 80% on the CPU right now.)

- Joined

- Jan 10, 2012

sounds like you're all good to me.

nvidia, each gpu will load a thread at 100%, core_17, core_18 and core_21. The new version 17 of core_21 can perform multi-threaded sanity checks, which speed up the wu progress by not allowing the gpu to idle while the sanity checks are performed.

The CPU projects often have problems with the larger prime numbers, such as "7" and higher.

Suggest you try running the CPU with 8 threads, which will allow 2 threads dedicated to the GPU and 2 threads for the intermittent sanity checks.

The CPU projects often have problems with the larger prime numbers, such as "7" and higher.

Suggest you try running the CPU with 8 threads, which will allow 2 threads dedicated to the GPU and 2 threads for the intermittent sanity checks.

- Joined

- Jul 17, 2003

Sounds like we have a little symantics playing here. One thread per GPU will work just fine. In fact, if you let the F@H client choose for you, it will take one "core" (physical or virtual) per GPU and leave the rest to actually fold. On a 6 core i7 with HT, F@H treats it as 12 cores. Use 10 to fold and 2 for the GPUs.

Good show all.

Good show all.

- Joined

- Jan 10, 2012

thanks for splaining that don, I was not clear on the core/thread thing.

jrclocker, what driver are you using?

you have a kepler and a Maxwell installed.

jrclocker, what driver are you using?

you have a kepler and a Maxwell installed.

- Joined

- Jan 10, 2012

there is a way to run two drivers in the same rig.

I think torin3 knows how to do that, perhaps he'll chime in on that.

the 660 might be held back by the Maxwell driver.

I think torin3 knows how to do that, perhaps he'll chime in on that.

the 660 might be held back by the Maxwell driver.

Please read my explanation more carefully. The older cores only use one thread per gpu. The newer core_21 v17 is multi thread for the sanity check. In other words, the gpu can continue calculations fully occupying a thread and use additional thread for the sanity check. The older core can only use a single thread, so gpu calc is idle while the check is performed, which is why the load drops on the gpu every so often. If all the CPU threads are busy when the new core does the sanity check, something is going to be interrupted, either the smp or the gpu, not sure which. With the 980ti, you do not want anything to interfere with the gpu calc. Best to leave an extra core or 2 available for the GPUs to ensure no contention delays. Not sure which folding process, gpu or cpu, will take priority for the sanity check if no idle thread available. Smp does not like being interrupted either, so possible smp10 with thread contention may be slower than smp8. If you decide to perform some testing, be sure the comparisons are with same wu running on each device.

- Joined

- Jan 10, 2012

so it's 2 "threads" per gpu now?

I am thinking the thread requirement for core_21 v17 should be 1 thread per gpu + 2 for the extra threads. I observed "top" for a while on two of my hosts and never saw more than 2 extra threads being use by fah at the same time. However, I do not have any 980ti, which could use the extra treads more frequently.

on a linux host, i7-2600k cpu (4c/8t), 2x-gtx970, driver 346.96, 8GB ram

running projects 11703 and 11705

top showed user fahclient using 6 different threads (all with different PID numbers)

2 threads FahCore_21 at 100% each (continuous)

2 threads fahclient at 0.3-0.7% each (frequent)

2 threads fahcorewrapper at 0.3% each (less frequent)

I only observed up to 4 threads using CPU at same time.

on a linux host, i7-930 cpu (4c/8t), 3x-gtx650Ti-boost, driver 304.131, 6GB ram

running projects 11703, 11703 and 11705

top showed user fahclient using 8 different threads (all with different PID numbers)

3 threads FahCore_21 at 100% each (continuous)

2 threads fahclient at 0.3-0.7% each (frequent)

3 threads fahcorewrapper at 0.3% each (much less frequent)

I only observed up to 5 threads using CPU at same time.

on a linux host, i7-2600k cpu (4c/8t), 2x-gtx970, driver 346.96, 8GB ram

running projects 11703 and 11705

top showed user fahclient using 6 different threads (all with different PID numbers)

2 threads FahCore_21 at 100% each (continuous)

2 threads fahclient at 0.3-0.7% each (frequent)

2 threads fahcorewrapper at 0.3% each (less frequent)

I only observed up to 4 threads using CPU at same time.

on a linux host, i7-930 cpu (4c/8t), 3x-gtx650Ti-boost, driver 304.131, 6GB ram

running projects 11703, 11703 and 11705

top showed user fahclient using 8 different threads (all with different PID numbers)

3 threads FahCore_21 at 100% each (continuous)

2 threads fahclient at 0.3-0.7% each (frequent)

3 threads fahcorewrapper at 0.3% each (much less frequent)

I only observed up to 5 threads using CPU at same time.

- Joined

- Sep 25, 2015

- Thread Starter

- #17

I'm almost tempted to get 2 of these mini GTX 750 Ti ($109 on Amazon right now) to replace the old GTX 660 I'm running. They pull all of their power from the PCI bus...so 2 of these will consume as much power as my current GTX 660:

http://www.amazon.com/EVGA-GeForce-...ie=UTF8&qid=1454036069&sr=1-2&keywords=750+ti

Does anybody have any ppd estimates on a GTX 750 Ti running at stock?

http://www.amazon.com/EVGA-GeForce-...ie=UTF8&qid=1454036069&sr=1-2&keywords=750+ti

Does anybody have any ppd estimates on a GTX 750 Ti running at stock?

So what your basically sayin Hayesk is using CPU to fold could slow down GPU on certain WU's, I wonder if the loss of PPD on the GPU is greater than a CPU can create, so it might actually be better to leave the CPU free to be available at all time for the GPUs and not folding, or only fold on a couple of cores/threads so it has all it could need available? I'm not sure how this is different for AMD rigs, but I've seen some new processes pop up during some WU's and it seems my PPD slows also (don't fold on the CPU either), guess AMD really does suck for folding, lol. Either way I'ma keep chugging along the best I can.

Similar threads

- Replies

- 2

- Views

- 453

- Replies

- 5

- Views

- 730