- Joined

- Apr 25, 2002

- Location

- San Antonio, TX

Welcome to Overclockers Forums! Join us to reply in threads, receive reduced ads, and to customize your site experience!

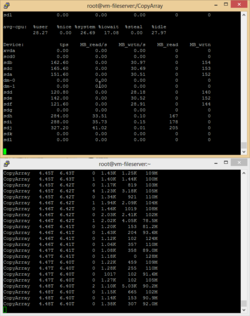

Device: tps MB_read/s MB_wrtn/s MB_read MB_wrtn

xvda 7.50 0.13 0.00 1 0

scd0 0.00 0.00 0.00 0 0

sdb 201.70 0.00 49.13 0 491

sdc 228.10 0.00 48.84 0 488

sda 217.30 0.00 49.20 0 492

dm-0 7.50 0.13 0.00 1 0

dm-1 0.00 0.00 0.00 0 0

sdd 213.50 0.00 48.89 0 488

sde 211.30 0.00 49.54 0 495

sdf 205.90 0.00 49.13 0 491

sdg 0.00 0.00 0.00 0 0

sdh 527.90 65.96 0.00 659 0

sdi 512.20 64.03 0.00 640 0

sdj 517.00 64.61 0.00 646 0

sdk 278.50 0.00 69.62 0 696

sdl 279.60 0.00 69.90 0 699

Yes, I am running the IT firmware to pass through the disks to the operating system.

Oh, absolutely.Related to Rackmount Overkill ???