-

Welcome to Overclockers Forums! Join us to reply in threads, receive reduced ads, and to customize your site experience!

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Project: Rackmount Overkill

- Thread starter Automata

- Start date

- Joined

- Oct 5, 2008

- Location

- Cumbria (UK)

Then get offline and do your homework! lol

But dont be away to long! Your a BIG help on this forum. Shame that PERC 5/i I got was a dudd I was thinking it may be the RAM, But ive bought a 512MB PC2-DIMM off ebay just to make sure. So far I have been sent a 512MB DDR1 PC3200 ECC DIMM, a DDR2 1GB PC2-3200R , then the same DIMM as the first! I know it shouldn't put me off the 5/i, but it has. Thats why I'm thinking FreeNAS.

I was thinking it may be the RAM, But ive bought a 512MB PC2-DIMM off ebay just to make sure. So far I have been sent a 512MB DDR1 PC3200 ECC DIMM, a DDR2 1GB PC2-3200R , then the same DIMM as the first! I know it shouldn't put me off the 5/i, but it has. Thats why I'm thinking FreeNAS.

But dont be away to long! Your a BIG help on this forum. Shame that PERC 5/i I got was a dudd

- Joined

- May 15, 2006

- Thread Starter

- #1,023

I'm taking breaks after doing a bit of work, and I got a bit of time to do it.

With as few drives as you have, software RAID would probably be best cost wise (no monetary cost since you can do it through the motherboard) and it keeps things simple.

With as few drives as you have, software RAID would probably be best cost wise (no monetary cost since you can do it through the motherboard) and it keeps things simple.

- Joined

- Oct 5, 2008

- Location

- Cumbria (UK)

Should I use my RocketRAID 2220 on a PCI 32bit 33Mhz slot, or just use the SATA controller on the Asus M5A88-V EVO?

- Joined

- May 15, 2006

- Thread Starter

- #1,025

PCI would be limited to 125 MB/sec transfer rate and I think your hard drives could well exceed that. If you have the SATA controller on the board, I would suggest using that instead. Leave the old hardware to the old computers.

- Joined

- Oct 5, 2008

- Location

- Cumbria (UK)

Thats what I was thinking. I'm hoping to leave the old power hogs (p4's!) Since I'm only home two days a week I want my server to use as little power as possible.

- Joined

- Jul 9, 2011

- Location

- NC

I want one of those

one day.... one day.

one day.... one day.

- Joined

- May 15, 2006

- Thread Starter

- #1,030

And crazy cable making is complete. This thing is slick. Again, more pictures.

Pile oftrash wires before I mangle them create the cable.

Since the PCIe connectors on the power supply are 6 pin and the CPU connectors are 8 pin, I have to double up two of the wires, which are the ones shown here.

All the wires done on the PCIe side of the connector.

The final cable. 6 pin on the left, CPU 8 pin on the right.

Here is how the cable looks while installed.

And of course, nothing explodes when I plug it in.

But, not all good news. I'm running into some difficulty with the new hardware, but I think it is the power supply. I'm using a "new" (it is a year old, but very low usage) Corsair HX650w and the entire system is randomly shutting down, even in the BIOS and completely idle. Additionally, it randomly killed power to my hard drive/DVD while booting up, which cause it to lock during the Windows boot screen. If this keeps giving me issues tonight, I'll do a backup of the server and pull that one's power supply for now. I may need to get a bit bigger one, for the future. I'm currently finishing the Windows installation, but I suspect it is just waiting for the worst millisecond to turn off. I also tested the voltages on the power supply and it has me worrying a bit. While it isn't out of ATX spec (5%), it is closer than I want to see. 12v is around 12.3v and 5v is around 5.2v.

On the upside, these Dynatron heatsinks/fans are crazy good. I'm pretty sure the fans are actually Delta or some other high end manufacturer. At idle, they sit around 1600 RPMs and are surprisingly quiet. I set the BIOS to go full throttle mode and they are Delta loud and move a ton of air. I'm quite impressed.

Pile of

Since the PCIe connectors on the power supply are 6 pin and the CPU connectors are 8 pin, I have to double up two of the wires, which are the ones shown here.

All the wires done on the PCIe side of the connector.

The final cable. 6 pin on the left, CPU 8 pin on the right.

Here is how the cable looks while installed.

And of course, nothing explodes when I plug it in.

But, not all good news. I'm running into some difficulty with the new hardware, but I think it is the power supply. I'm using a "new" (it is a year old, but very low usage) Corsair HX650w and the entire system is randomly shutting down, even in the BIOS and completely idle. Additionally, it randomly killed power to my hard drive/DVD while booting up, which cause it to lock during the Windows boot screen. If this keeps giving me issues tonight, I'll do a backup of the server and pull that one's power supply for now. I may need to get a bit bigger one, for the future. I'm currently finishing the Windows installation, but I suspect it is just waiting for the worst millisecond to turn off. I also tested the voltages on the power supply and it has me worrying a bit. While it isn't out of ATX spec (5%), it is closer than I want to see. 12v is around 12.3v and 5v is around 5.2v.

On the upside, these Dynatron heatsinks/fans are crazy good. I'm pretty sure the fans are actually Delta or some other high end manufacturer. At idle, they sit around 1600 RPMs and are surprisingly quiet. I set the BIOS to go full throttle mode and they are Delta loud and move a ton of air. I'm quite impressed.

Last edited:

- Joined

- May 15, 2006

- Thread Starter

- #1,031

Spoke too soon on the Windows install. It loaded up and booted fine, then programs started crashing. I've never seen the "The application is not responding. Do you want to end it?" be locked up itself. I gave up after two minutes. I'm going to get a full backup of my OS drive and shut down the server.

- Joined

- May 15, 2006

- Thread Starter

- #1,032

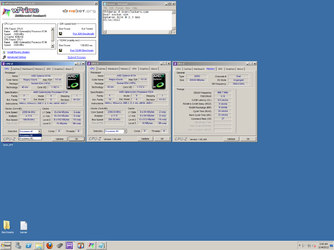

Looks like my "known working" power supply wasn't as known working as I originally thought. I pulled the HX620w out of my file server and it works great. This server so much faster than my IBM x3650's, it isn't even funny. I'm doing benchmarks for the benchmarking team now. I'll post up results later.

Last edited:

- Joined

- May 15, 2006

- Thread Starter

- #1,034

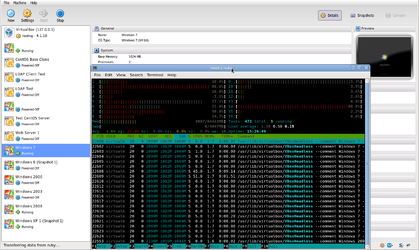

Board installed. It barely fit. I had to unscrew the fan divider and push it towards the front of the case to install it. CentOS 6.2 is already install, I just need to SSH in and get everything setup. It is going to take me a bit.

- Joined

- May 15, 2006

- Thread Starter

- #1,036

Server is fully functional. CUPS is working now that I enable the option to share printers (derp), my rsync scripts are working properly and Icinga is now sending me emails again.

For the server, I've been considering setting up a huge network pipe to use the extra NICs I have. There is a PCI slot on the motherboard, which I could install a 10/100 card to for management purposes. I could then team all four gigabit network NICs.

If anyone was curious how much the new board takes (just the board, no hard drives), it is roughly 220w idle and 340w at full load (peak was 372w) without any power management . That is measured with a Kill-a-watt, so the numbers are after power supply inefficiencies.

For the server, I've been considering setting up a huge network pipe to use the extra NICs I have. There is a PCI slot on the motherboard, which I could install a 10/100 card to for management purposes. I could then team all four gigabit network NICs.

If anyone was curious how much the new board takes (just the board, no hard drives), it is roughly 220w idle and 340w at full load (peak was 372w) without any power management . That is measured with a Kill-a-watt, so the numbers are after power supply inefficiencies.

Last edited:

- Joined

- May 15, 2006

- Thread Starter

- #1,037

I feel bad putting so many of my own posts in a row. I re-did the network wiring for a few reasons. The first was my fault: I put the switch in the front of the rack. This creates a ton of extra wiring and makes it difficult to work with. I flipped it to the back of the rack. Additionally, I switched the power strips to the left side of the rack since there is where all the systems plug in. I then created new cables (cannibalizing longer ones) to fit the new setup. While I was doing this, I added a 10/100 PCI network card to the server, which is going to be my "service port" or fall back. The other four gigabit NICs are going to be aggregated into one connection (mode=4, 802.3ad) to give me around 465 mb/sec total throughput.

For the IBM servers, I may do the same thing with the two gigabit NICS. For Perl (my Icinga server), I'll probably do a redundancy mode (mode=1) since I don't need the throughput.

For the IBM servers, I may do the same thing with the two gigabit NICS. For Perl (my Icinga server), I'll probably do a redundancy mode (mode=1) since I don't need the throughput.

Code:

e1000e 0000:06:00.0: eth0: (PCI Express:2.5GT/s:Width x1)

e1000e 0000:06:00.0: eth0: Intel(R) PRO/1000 Network Connection

e1000e 0000:06:00.0: eth0: MAC: 1, PHY: 4, PBA No: D50854-002

e1000e 0000:05:00.0: eth1: (PCI Express:2.5GT/s:Width x1)

e1000e 0000:05:00.0: eth1: Intel(R) PRO/1000 Network Connection

e1000e 0000:05:00.0: eth1: MAC: 3, PHY: 8, PBA No: FFFFFF-0FF

e1000e 0000:04:00.0: eth2: (PCI Express:2.5GT/s:Width x1)

e1000e 0000:04:00.0: eth2: Intel(R) PRO/1000 Network Connection

e1000e 0000:04:00.0: eth2: MAC: 3, PHY: 8, PBA No: FFFFFF-0FF

e1000e 0000:02:00.0: eth3: (PCI Express:2.5GT/s:Width x1)

e1000e 0000:02:00.0: eth3: Intel(R) PRO/1000 Network Connection

e1000e 0000:02:00.0: eth3: MAC: 1, PHY: 4, PBA No: D50854-002- Joined

- Oct 14, 2007

This thing keeps getting better and better looking Thiddy!

I presume you have a spool of that red ethernet cable and are making your own as you go?

I presume you have a spool of that red ethernet cable and are making your own as you go?

- Joined

- May 15, 2006

- Thread Starter

- #1,040

The only way to learn about it is to use it.Sexy! Wish I knew some Linux as well as I do windows, or Id try virtual box or Xen.

Instead of ESXi....which is working for me, but its semi picky about its hardware.

Thanks. I purchased two 1000ft spools a few years ago, so I've been making my own cables as I go.This thing keeps getting better and better looking Thiddy!

I presume you have a spool of that red ethernet cable and are making your own as you go?

Similar threads

- Replies

- 2

- Views

- 1K

- Replies

- 1

- Views

- 782

- Replies

- 7

- Views

- 3K