- Joined

- Jul 20, 2002

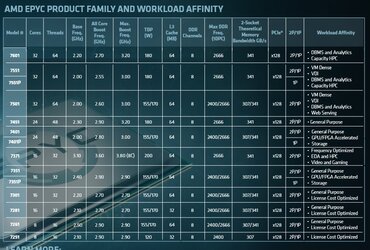

I've been looking at some older model AMD EPYC CPU's (EPYC Rome 2nd Gen 7000 Series) , they're still expensive (~$460), but they have 128 lanes of PCIe 4.0, 128MiB L3 cache, 8 cores/16 threads and 8 DDR4 memory channels. Comparatively, the RYZEN 7 7800X3D is $449.00, but has a lot less PCIe 4.0 lanes and memory channels.

1. Considering the gigantic L3 cache (same as the 7950x3D) how would such CPU's work for gaming?

2. Would it be possible to tune the 8 DDR4 memory channels so you had all the bandwidth of the latest dual channel DDR5 rigs but less latency?

3. do the motherboards for such CPU's still use standard ATX PSU connectors?

4. are the motherboards for such CPU's eATX format? Or some specialized format?

1. Considering the gigantic L3 cache (same as the 7950x3D) how would such CPU's work for gaming?

2. Would it be possible to tune the 8 DDR4 memory channels so you had all the bandwidth of the latest dual channel DDR5 rigs but less latency?

3. do the motherboards for such CPU's still use standard ATX PSU connectors?

4. are the motherboards for such CPU's eATX format? Or some specialized format?