- Joined

- Mar 7, 2008

- Thread Starter

- #21

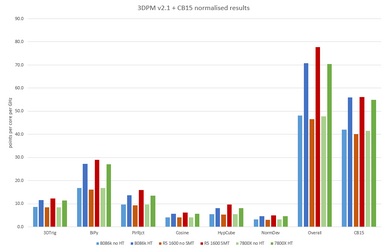

Just done a run on 8086k, and while the overall result wasn't surprising, the sub results are... more on this later. I just dusted off my 1600 system, and letting Win10 catch up on updates before I can run on that.

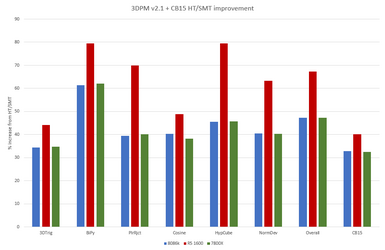

Oh, the 8086k at stock (4.3 GHz all core turbo), in CB15 I got 1442 with HT, and 1086 without, for a 32% increase. Best of 3 runs for each. In the Ryzen review that had the 8700k at 1303 at 4.0 GHz, which would be 1401 scaled to 4.3 to match mine, so that's about the same. For the smaller 22% difference, I would have to score 1148 to 1182 without HT. So, either I'm doing something wrong, or there is something else going on there. On that note, I'll re-run it some more to double check.

Oh, the 8086k at stock (4.3 GHz all core turbo), in CB15 I got 1442 with HT, and 1086 without, for a 32% increase. Best of 3 runs for each. In the Ryzen review that had the 8700k at 1303 at 4.0 GHz, which would be 1401 scaled to 4.3 to match mine, so that's about the same. For the smaller 22% difference, I would have to score 1148 to 1182 without HT. So, either I'm doing something wrong, or there is something else going on there. On that note, I'll re-run it some more to double check.