- Joined

- Jul 20, 2002

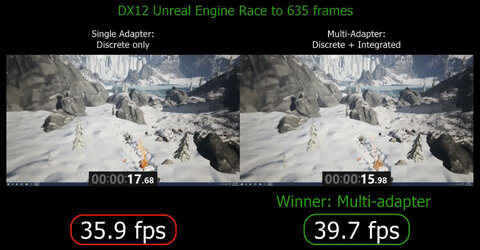

http://www.pcper.com/reviews/Graphics-Cards/BUILD-2015-Final-DirectX-12-Reveal

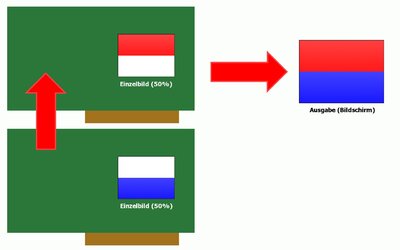

There are two modes of multiadapter functionality: implicit, explicit (which has two modes of its own).

So would this feature of DX12 mean SLI/Crossfire would become obsolete, at least for DX12 games?

"The Unlinked Explicit Multiadapter, is interesting because it is agnostic to vendor, performance, and capabilities -- beyond supporting DirectX 12 at all. This is where you will get benefits even when installing an AMD GPU alongside one from NVIDIA."

There are two modes of multiadapter functionality: implicit, explicit (which has two modes of its own).

So would this feature of DX12 mean SLI/Crossfire would become obsolete, at least for DX12 games?

"The Unlinked Explicit Multiadapter, is interesting because it is agnostic to vendor, performance, and capabilities -- beyond supporting DirectX 12 at all. This is where you will get benefits even when installing an AMD GPU alongside one from NVIDIA."