- Joined

- Jan 9, 2006

Just got this thing installed and ran a few tests to see how this ghetto $999 card compares to the $1500 "real" ones. Here's my girl in her new home for the first time.

- - - Auto-Merged Double Post - - -

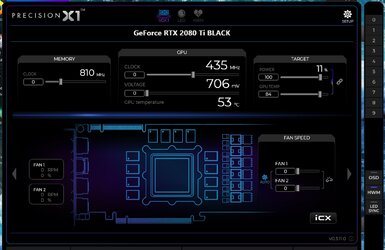

This is how it sits at idle. a bit warm at 53c but makes no noise and seems to be fine.

EDIT: It looks like i was starving my case of air flow a bit and heat was building up. I turned my case fans up from 450rpm to 590rpm and temps on everything dropped by about 10c with no noticeable noise. the card idles at 41c now.

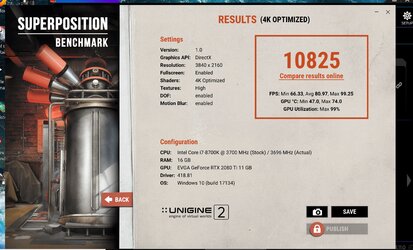

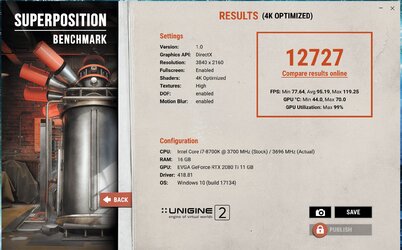

Here's a stock settings run of superposition at 4k to set a baseline. Max temps were 74c with the core starting out at about 1740mhz and ending around 1675mhz after heating up for awhile.

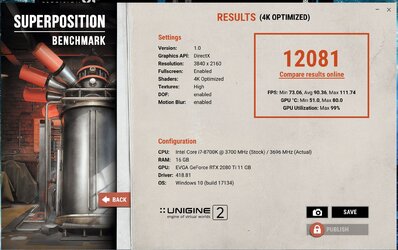

Here's another 4k run after running the evga X1 auto overclock with the power and temp sliders maxed. It ended up at +193. Clocks started at 1920mhz and ended up in the mid/upper 1800's after heating up.

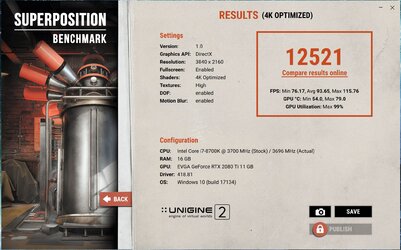

After messing with the ram a bit I settled on +1250 or 16500mhz. trying 17000mhz caused artifacts.

And last but not least is another 4k superposition run with +193/+1250 and sliders maxed again but this time with fans at 100%. This took the max temp from about 80c to about 70c and kept the clocks around 1900mhz for the most part. A good cooler should be able to keep it over 2000mhz under load no problem I'm sure.

- - - Auto-Merged Double Post - - -

This is how it sits at idle. a bit warm at 53c but makes no noise and seems to be fine.

EDIT: It looks like i was starving my case of air flow a bit and heat was building up. I turned my case fans up from 450rpm to 590rpm and temps on everything dropped by about 10c with no noticeable noise. the card idles at 41c now.

Here's a stock settings run of superposition at 4k to set a baseline. Max temps were 74c with the core starting out at about 1740mhz and ending around 1675mhz after heating up for awhile.

Here's another 4k run after running the evga X1 auto overclock with the power and temp sliders maxed. It ended up at +193. Clocks started at 1920mhz and ended up in the mid/upper 1800's after heating up.

After messing with the ram a bit I settled on +1250 or 16500mhz. trying 17000mhz caused artifacts.

And last but not least is another 4k superposition run with +193/+1250 and sliders maxed again but this time with fans at 100%. This took the max temp from about 80c to about 70c and kept the clocks around 1900mhz for the most part. A good cooler should be able to keep it over 2000mhz under load no problem I'm sure.

Last edited: