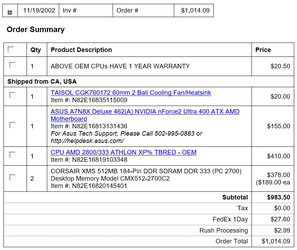

Worse than that. Look at my invoice. I don't have have a screenshot of the original invoice. Unfortunately, Newegg doesn't archive them more than ten years old. However, back then I copied the information from the invoice into a Word document. Here is a screenshot (with personal information removed) of that document.Foolish decision. A mistake of gigantic proportions. LoL

What you should have done is gone to the AMD CPU section of ocforums where I was on, 8 hours a day in 2003.

I would have told you to under no circumstances buy the 2800+.

I am looking through my receipts, it seems I bought Thoroughbred B 2100+ for $62, proof below. I remember the $49 sale.

The 2800+ had next to no overclocking headroom, whereas the CPU below, seven times cheaper, overclocked routinely to 2.3 GHz and beyond. EASILY bypassing the 2250MHz frequency of the "top of the line" 2800+, the most expensive Throroughbred B ever released. It had no overclocking headroom. This was such a waste of money, MisterEd!

LoL

View attachment 360610

You said "What you should have done is gone to the AMD CPU section of ocforums where I was on, 8 hours a day in 2003."

There are two problems with that.

1. I bought the Athlon XP 2800+ in 2002 the previous year. Since I was one of the first ones to receive it there wasn't anyone to say not to get it. Maybe if I would have waited six months I would have not bought it.

2. I didn't join this forum until 2004. By that time it didn't matter about the XP 2800+.

BTW, as you can see below I also bought an ASUS A7N8X Deluxe motherboard. That was probably one of the first AMD dual-channel memory motherboards. A lot of people at the time had problems with dual channel. That was why I was overly cautious and paid so much for the Corsair XMS RAM. I read it should work for dual channel. Note dual-channel memory was so new that dual-channel memory kits did not exist. Until they did some sellers were individually checking pairs of RAM so they could sell them together.

Last edited: