I dont think I have witnessed that sentiment (acceptable b/c its Nvidia). There are a couple of games the 7970 wins, but the rest the 680 takes it (Read: Anandtech review, Tom's, Techpowerup).

In reading the Anand review, it beats out the GTX 580 by an average of like 30-40%. Thats big. Prior to its release, the GTX580 was selling (in the US) from $400-$530 (non watercooled).

Now its $360 - $500 (non watercooled).

I dont understand this... I would imagine it would catch up and possibly beat it. BUT, (like fractions) do to one side what you do to another. When you overclock the 680, I would imagine it still beat out the 7950.

You need to look at the overclockers uk forum then lol its proper swinging handbags !

Like I said though the gtx 680 reference card stock is 1066core mem 6000, core boost is around 1110-/= depending on tdp and temperature.

In comparison the 7970 reference stock in reviews is 925 core mem 5500. So quite a big difference. As i'm sure you're aware it'd be easier to do our own reviews on the hardware we own, but i cant afford a 7970 and 680 to compare.

Please don't think i'm trying to derial this thread by the way I'm still working out with reviews and findings to the performance difference betwen the two cards. The problem with different review sites is they all have

different hardware ie cpu, ram etc. and different ways to measure .

Like techpoweup sometimes dont use msaa 4x in some benches, where anandtech do.

For the 1536 mb 580's (aircooled) the prices havent really changed here in the uk at around 320- 340 gbp.

The only reason I brought up the 7950, is because for its price average = 330-350 gbp its around 80-100 gbp

cheaper than a 7970 and 680.

Martini currently owns a 7950 oc, and he has just bought a 680. He is going to put them back to back. Of course

the luck relies upon on the silicon overclocking, but hopefully he'll be able to work out the max oc of both

cards. The end result will be how much of a punch the 7950 offers for the money.

This is only a snippet of info I was able to attain so far, as im at work,

but on guru 3d it shows how much the 7970 scaled in 2 games from overclocking.

I'm not biasing this info it's just the only info I have found at the moment.

Summarised Crysis 2: 1920 x1200

DirectX 11

High Resolution Texture Pack

Ultra Quality settings

4x AA

Level - Times Square (2 minute custom time demo)

Standard 7970 61 fps

Asus duII oc 1000x5600 66 fps

Asus duII oc'd 1250x6000 76fps

std 680 1006 1058 6000 63fps

680 oc'd 1264 1264 6634 70fps

---------------------------------------------------------------------------------

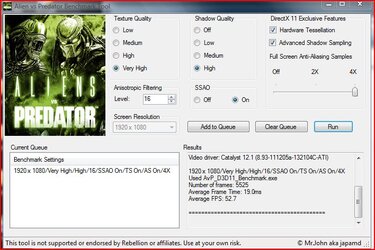

Alien vs pred 1920x1200 4x aa 16af

Standard 7970 55 fps

Asus duII oc 1000x5600 61 fps

Asus duII oc'd 1250x6000 71 fps

std 680 1006 1058 6000 52 fps

680 oc'd 1264 1264 6634 58 fps

-------------------------------------------------------------------------------------

Yes this is only 2 games, I'm well aware of the potential of the 680 in bf3 and other games vs the 7970. This is

just to prove how much the 7970 can scale when overclocked and how benchmarks showing the std 7970 vs the 680

could show different results.

For the links from my summary here they are

http://www.guru3d.com/article/asus-radeon-hd-7970-directcu-ii-review/23

http://www.guru3d.com/article/geforce-gtx-680-review/25

Like I said if i owned both cars id bench them with a wide variation of games.

but its hard to cross reference different review sites with their fps because the results differ. Just like my pc would someone elses.

True.

True.