OP

- Joined

- Sep 28, 2015

- Thread Starter

- #21

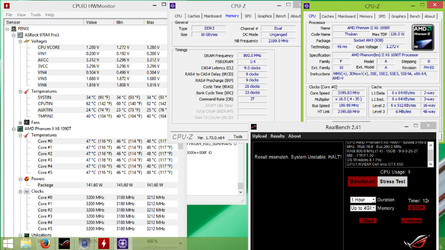

Since nobody at the ROG forum has had any suggestions and since ASRock's tech support basically just suggested I use some other test than MemTest86, I've decided to move on. Maybe there's a multiprocessing bug in the UEFI and maybe it will get fixed, but it doesn't seem to affect anything apart from MemTest86. I don't know if the RealBench stress test issue is related to the MemTest86 issue, but since that's the only stress test I fail - and I fail it even with five of my six cores disabled from BIOS as well as with stock settings - I'm inclined to think it's a software issue. Or maybe it's a GPU issue, who knows...

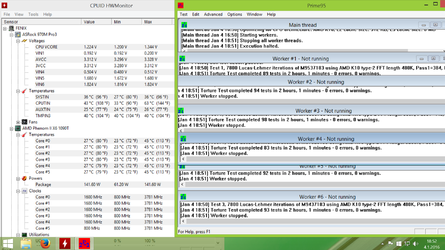

In any case, I've spent some time this week playing with my RAM settings. Seems like my current settings are a keeper, at least at stock voltages and CPU-NB & HT @ 2200 MHz . 8-8-9-25-1T, 1600 MHz and FSB : DRAM 1:4 proved to be MaxxMem2 stable, but failed SuperPi 32M. 8-8-9-25-2T failed Prime95. While 9-9-9-25-1T didn't seem to lead to any errors within an hour or two of testing, I decided to play it safe and keep the CR at 2T. Instead, when I get better case fans and a better CPU fan, I'll bump the CPU-NB and HT up to maybe 2400 MHz. According to MaxxMem2 and AMD OverDrive, this would give me comparable memory performance to running my RAM at 8-8-9-25-1T. Of course, I need to see where I OC my CPU first, since I'll likely bump the non-turbo clock speed up once I improve the cooling. In any case, this just isn't a processor you want to chase memory related HWBOT records with. Someone had OC'd the NB to over 4GHz and had the 4GB of RAM at CL5 or something and still the memory was relatively slow. Luckily RAM speed rarely plays any significant role outside synthetic tests.

In any case, I've spent some time this week playing with my RAM settings. Seems like my current settings are a keeper, at least at stock voltages and CPU-NB & HT @ 2200 MHz . 8-8-9-25-1T, 1600 MHz and FSB : DRAM 1:4 proved to be MaxxMem2 stable, but failed SuperPi 32M. 8-8-9-25-2T failed Prime95. While 9-9-9-25-1T didn't seem to lead to any errors within an hour or two of testing, I decided to play it safe and keep the CR at 2T. Instead, when I get better case fans and a better CPU fan, I'll bump the CPU-NB and HT up to maybe 2400 MHz. According to MaxxMem2 and AMD OverDrive, this would give me comparable memory performance to running my RAM at 8-8-9-25-1T. Of course, I need to see where I OC my CPU first, since I'll likely bump the non-turbo clock speed up once I improve the cooling. In any case, this just isn't a processor you want to chase memory related HWBOT records with. Someone had OC'd the NB to over 4GHz and had the 4GB of RAM at CL5 or something and still the memory was relatively slow. Luckily RAM speed rarely plays any significant role outside synthetic tests.