-

Welcome to Overclockers Forums! Join us to reply in threads, receive reduced ads, and to customize your site experience!

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Enter The Matrix: Slice out and get the best part from your hard drives

- Thread starter bing

- Start date

- Joined

- Aug 29, 2002

I know that HD Tune, HD Tach & ATTO are used for testing. The question I have is. Has there ever been any certain settings set fourth for benching?

For Atto... the standard seems to be: 0.5 to 8192 for Transfer size; 256Mb for Total Length and 10 for Queue Depth.

For HD-Tach, run the Long Bench test rather than the short one.

That way, it will be comparable to virtually all the other tests in this thread.

B

- Joined

- May 20, 2002

New Matrix drivers have been posted on Intel's site, version 8.2

Thanks!

- Joined

- Sep 22, 2004

I'm thinking of a WD6400AAKS x 4 Intel Matrix Raid setup

I'm thinking the same thing Audio.

The bench with the higher burst rate is at 128k stripe the other is at 64k stripe, both unformatted.

I went ahead with the 64k stripe since I decided to format with 64k also... seemed logical... also since this array is used solely for large media files... avi, mkv, and dvd rips to supply to my HTPC setup over gigabit LAN.

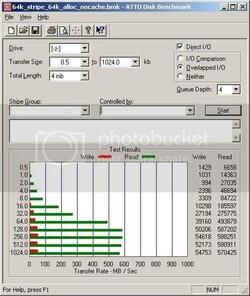

EDIT: Here are my ATTO Benchmarks, on all default settings, on the same RAID-5 volume. 64k stripe and 64k allocation.

First, with write-back cache disabled

Then, with write-back cache enabled

Last edited:

- Thread Starter

- #1,525

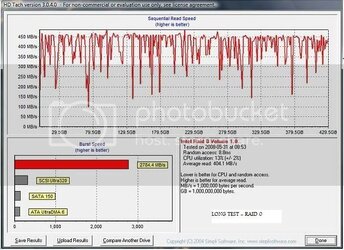

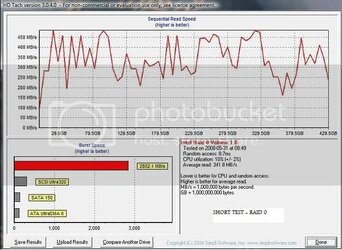

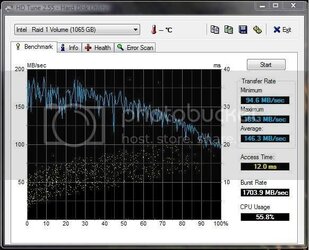

I'm thinking the same thing Audio.Here's a shot of my current 4 x 320gb setup, taken when I was benching. This is a mixed array of WD 320gb drives from two generations. Two are WD3200KS and two are the newer WD3200AAKS. I have enough external storage space right now to backup what I've got, so I'm thinking now may be the time to go ahead and move to the 4 x 640gb setup.

The bench with the higher burst rate is at 128k stripe the other is at 64k stripe, both unformatted.

I went ahead with the 64k stripe since I decided to format with 64k also... seemed logical... also since this array is used solely for large media files... avi, mkv, and dvd rips to supply to my HTPC setup over gigabit LAN.

Thanks for sharing the results.

What interesting is the stripe size 128K vs 64K, looks like the 128K size doesn't suffer higher cpu utilization than the 64K with that higher burst rate.

Wonder why you still choosed 64K strip size, since larger strip size has more advantages, especially for those big files.

- Joined

- Sep 22, 2004

Thanks for sharing the results.

What interesting is the stripe size 128K vs 64K, looks like the 128K size doesn't suffer higher cpu utilization than the 64K with that higher burst rate.

Wonder why you still choosed 64K strip size, since larger strip size has more advantages, especially for those big files.

I guess the reason I chose the 64k stripe was because I was going to format with 64k (the highest NFTS can go) as well... and since burst rate really doesn't mean a whole lot in real-world performance, the average read and random access are virtually identical.

I also added my ATTO benchmarks to my previous post... what I find interesting is the differences one sees with write-back cache off and on.

Lastly, I'm a little confused with options in different places to enable caching on my array volumes. In the screen shot below you see the volume Raid5Vol1 in the Matrix Storage Console has Write-Back Cache Enabled. Yet when I look at the properties of this volume through the Device Manager I see that Windows believes caching is not enabled. I've also included my Driver version... and if I haven't mentioned it before, this is on XP 64-bit.

I see some of you in this thread showing screen shots where you look to be enabling caching for your volumes in the Policies tab for each volume. So I guess I'm asking...

Where should I be enabling caching? Intel Matrix Console? Windows Volume Policies? Both?

- Joined

- Apr 23, 2002

Write-caching is automatically enabled and can't be disabled if I remember correctly. Windows doesn't pick up on it for whatever reason, so the checkbox doesn't do anything. 32 or 64-bit OS is irrelevant. Of course you can check it if you'd like.I see some of you in this thread showing screen shots where you look to be enabling caching for your volumes in the Policies tab for each volume. So I guess I'm asking...

Where should I be enabling caching? Intel Matrix Console? Windows Volume Policies? Both?

- Joined

- Sep 22, 2004

Ok, that makes perfect sense... I thought that since Windows is talking to the volumes through the Intel driver, and that the Matrix Console is what manages how that driver behaves, then Windows just doesn't know how that driver is already configured. Thus, no check in the write caching.

I guess those who are using the options on the Policies tab from Windows are doing so because maybe they don't want to install the Matrix Console software. I like the Matrix Console personally, but this is my first Intel RAID so others are undoubtedly more experienced and surely have their reasons.

I guess those who are using the options on the Policies tab from Windows are doing so because maybe they don't want to install the Matrix Console software. I like the Matrix Console personally, but this is my first Intel RAID so others are undoubtedly more experienced and surely have their reasons.

- Joined

- Apr 29, 2002

Is there any significant performance gain using 2x Raptors in RAID 0 as a boot/OS/app drive or am I better off just using boot select during POST and installing various OSes on two single drives?

Raptor 1: XP x86 & Vista x64

Raptor 2: Server 2003 x64 & Server 2008 x64

versus

Matrix RAID 0: XP+S2k3+Vista+S2k8

Raptor 1: XP x86 & Vista x64

Raptor 2: Server 2003 x64 & Server 2008 x64

versus

Matrix RAID 0: XP+S2k3+Vista+S2k8

- Joined

- May 17, 2004

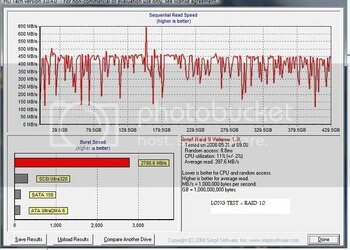

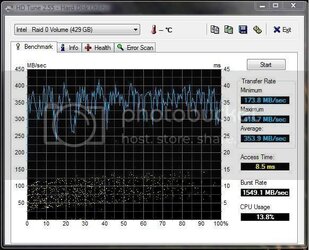

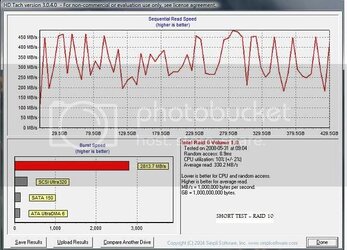

Well, here's my x4 640Gb WDs in Vista Prem 64bit: First is a Raid 0 400Gb (100Gb from each HD) and the second is the rest of the space in Raid 10.

It would've been faster and maybe lower access times for the Raid 0 volume if I had used a smaller size, but I wanted to also put my games here too... It's much faster than my previous x1 160Gb Seagate....

Any input? ...besides making the Raid 0 volume into 200Gb (50gb each HD), anything else that would make things faster and worth installing things again...?

It would've been faster and maybe lower access times for the Raid 0 volume if I had used a smaller size, but I wanted to also put my games here too... It's much faster than my previous x1 160Gb Seagate....

Any input? ...besides making the Raid 0 volume into 200Gb (50gb each HD), anything else that would make things faster and worth installing things again...?

Any input?

You might want to run the long test in HD Tach - the short test doesn't give great results.

- Joined

- Sep 22, 2004

Is there any significant performance gain using 2x Raptors in RAID 0 as a boot/OS/app drive or am I better off just using boot select during POST and installing various OSes on two single drives?

Raptor 1: XP x86 & Vista x64

Raptor 2: Server 2003 x64 & Server 2008 x64

versus

Matrix RAID 0: XP+S2k3+Vista+S2k8

I would personally install on single drives... I'm not a fan of RAID'ing my OS.

Not sure what kind of real world speed improvement you would see in RAID-0 over a single Raptor.

- Joined

- Aug 29, 2002

I would personally install on single drives... I'm not a fan of RAID'ing my OS.

Not sure what kind of real world speed improvement you would see in RAID-0 over a single Raptor.

edited...

The rig that has just been posted should match any Raptor rig, and provide a whole heap more storage and redundancy for large volumes of data.

There is plenty of technology available now that allows good robust systems for imaging and backups, etc, and the raid 10 array alone allows for 1 or in some cases 2 drives to fail (depending on which 2) and still be there once the O/S drive is rebuilt.

Last edited:

- Joined

- Apr 29, 2002

I'll probably just go with single Raptors for now but eventually I'll want to try a 4x640 0/1+0 configuration and put my Raptors on another system. I just love all those bad @$$ STR graphsI would personally install on single drives... I'm not a fan of RAID'ing my OS.

Not sure what kind of real world speed improvement you would see in RAID-0 over a single Raptor.

- Joined

- May 17, 2004

- Joined

- Aug 29, 2002

Been getting asked for a while now off and on, for a really simple guide to setting up a Matrix Array system.

Hope it's okay... http://www.ocforums.com/showthread.php?p=5658452#post5658452

Enjoy.

Having done all that, and now having a server in the house, I figured I would go back to single drives in my two main machines for a while. I purchased the same Seagate 500Gb 320NS drives (so they can go into the server with the others if I am unhappy), and I would have to say that I am really surprised at how much lag I am seeing.

This is on a fresh install of Vista, with only the basics installed. Outlook is especially laggy... when I open it up, I have to wait several seconds for it to allow me to change folders, etc., something I am not at all used to with the Matrix setups.

Might have to look at a couple of 150 Raptors or maybe even an SSD.

edit... just disovered a really great read in the virtues of Raid Vs single drive. You've gotta read this. http://www.ocforums.com/showthread.php?t=564714

Hope it's okay... http://www.ocforums.com/showthread.php?p=5658452#post5658452

Enjoy.

Having done all that, and now having a server in the house, I figured I would go back to single drives in my two main machines for a while. I purchased the same Seagate 500Gb 320NS drives (so they can go into the server with the others if I am unhappy), and I would have to say that I am really surprised at how much lag I am seeing.

This is on a fresh install of Vista, with only the basics installed. Outlook is especially laggy... when I open it up, I have to wait several seconds for it to allow me to change folders, etc., something I am not at all used to with the Matrix setups.

Might have to look at a couple of 150 Raptors or maybe even an SSD.

edit... just disovered a really great read in the virtues of Raid Vs single drive. You've gotta read this. http://www.ocforums.com/showthread.php?t=564714

Last edited:

Hello all

Wow, what an impressive thread... Have been reading it for more than two days (lots of pages!).

Hello guys, I'll soon get a P45+ICH10R with 4 Barracuda 7200.11 (32meg cache) + Q6600.

My first intent was to create two RAID 5 volumes (because I thought I couldn't mix the RAID modes), but I'm clearly heading now with :

- One RAID0 partition of 100gig using the four drives

- One RAID5 partition using the remainder

I have few questions though:

1) That strip size should I use for both partitions ? Looks like 64kb is fine if you have a good CPU, is that right ?

2) I don't really understand the benefits of RAID 10 over RAID 5, RAID 10 is supposed to be faster in write ?

3) How the performances of RAID 5 compared to RAID 0 ? I saw a lots of benchmark, but I would really like to have a "real world" (even if we're inside the matrix ) feeling.

) feeling.

I didn't see ICH10R benchmarking results, so I'll post mine as soon as I'll have everything setup (on Win2K8 x64 + hyper V).

Thanks guys!

Wow, what an impressive thread... Have been reading it for more than two days (lots of pages!).

Hello guys, I'll soon get a P45+ICH10R with 4 Barracuda 7200.11 (32meg cache) + Q6600.

My first intent was to create two RAID 5 volumes (because I thought I couldn't mix the RAID modes), but I'm clearly heading now with :

- One RAID0 partition of 100gig using the four drives

- One RAID5 partition using the remainder

I have few questions though:

1) That strip size should I use for both partitions ? Looks like 64kb is fine if you have a good CPU, is that right ?

2) I don't really understand the benefits of RAID 10 over RAID 5, RAID 10 is supposed to be faster in write ?

3) How the performances of RAID 5 compared to RAID 0 ? I saw a lots of benchmark, but I would really like to have a "real world" (even if we're inside the matrix

I didn't see ICH10R benchmarking results, so I'll post mine as soon as I'll have everything setup (on Win2K8 x64 + hyper V).

Thanks guys!

- Joined

- Apr 21, 2006

Ive got the Abit IP35 Pro with the ICH9R chipset, and I want to setup my matrix raid as you guys do here with the high performance RAID0 first partition, and the lower performance RAID1 2nd partiton.

Right now I'm running a RAID0 using the full space of my 2x Seagate 7200.11 500GB drives.

When I run HDTach, I get sequential read of no more than 250GB at the front, which then drops as you move out to the full 1TB size. I've done some cleanup on my system, and got it down to 33GB (this has my OS, apps and games).

Im thinking of putting that into a 80-100GB RAID0 partiton, then using the remaining space for RAID1 storage.

My concern is, why do you think I'm getting only 250GB max read speed, even at the front of the platter (with many others getting 450GB/s with other non raptor drives)? I thought the 32MB cache on my drives would help performance, but it looks like those 640GB WD drives are kicking my butt! Is my performance low for my RAID/drives?

I'm running ORTHOS right now to do a final 24hr stability test of my 514x8 OC (11.5hrs and counting!), so I cant post a screenshot at the moment, but I will when I can.

I know you guys need more info to be able to help out (or maybe my read speed is correct for my disks/RAID...?), so I'll tell you anything you need to know.

Thanks!

EDIT - also, I've got my 4GB swapfile sitting on another 500GB disk in my system.

1) Is it still faster to put that on another single disk instead of having it on the RAID0 slice?

2) If so, would it make sense to cut out a 4GB slice of that HD just for the pagefile, to make sure it is at the end of the platter?

3) Is 4GB page a good size w/ 4GB of RAM? Ive read that with 4GB ram, you should actually make the page smaller (though ive got the room to spare).

Thanks again!

Right now I'm running a RAID0 using the full space of my 2x Seagate 7200.11 500GB drives.

When I run HDTach, I get sequential read of no more than 250GB at the front, which then drops as you move out to the full 1TB size. I've done some cleanup on my system, and got it down to 33GB (this has my OS, apps and games).

Im thinking of putting that into a 80-100GB RAID0 partiton, then using the remaining space for RAID1 storage.

My concern is, why do you think I'm getting only 250GB max read speed, even at the front of the platter (with many others getting 450GB/s with other non raptor drives)? I thought the 32MB cache on my drives would help performance, but it looks like those 640GB WD drives are kicking my butt! Is my performance low for my RAID/drives?

I'm running ORTHOS right now to do a final 24hr stability test of my 514x8 OC (11.5hrs and counting!), so I cant post a screenshot at the moment, but I will when I can.

I know you guys need more info to be able to help out (or maybe my read speed is correct for my disks/RAID...?), so I'll tell you anything you need to know.

Thanks!

EDIT - also, I've got my 4GB swapfile sitting on another 500GB disk in my system.

1) Is it still faster to put that on another single disk instead of having it on the RAID0 slice?

2) If so, would it make sense to cut out a 4GB slice of that HD just for the pagefile, to make sure it is at the end of the platter?

3) Is 4GB page a good size w/ 4GB of RAM? Ive read that with 4GB ram, you should actually make the page smaller (though ive got the room to spare).

Thanks again!

Last edited:

- Joined

- Aug 29, 2002

Wow, what an impressive thread... Have been reading it for more than two days (lots of pages!).

Hello guys, I'll soon get a P45+ICH10R with 4 Barracuda 7200.11 (32meg cache) + Q6600.

My first intent was to create two RAID 5 volumes (because I thought I couldn't mix the RAID modes), but I'm clearly heading now with :

- One RAID0 partition of 100gig using the four drives

- One RAID5 partition using the remainder

I have few questions though:

1) That strip size should I use for both partitions ? Looks like 64kb is fine if you have a good CPU, is that right ?

2) I don't really understand the benefits of RAID 10 over RAID 5, RAID 10 is supposed to be faster in write ?

3) How the performances of RAID 5 compared to RAID 0 ? I saw a lots of benchmark, but I would really like to have a "real world" (even if we're inside the matrix) feeling.

I didn't see ICH10R benchmarking results, so I'll post mine as soon as I'll have everything setup (on Win2K8 x64 + hyper V).

Thanks guys!

Hi guys

First up... Nockawa... A BIG Welcome to the forum. If you managed to read all the info in the thread... you've done well.

P45 rig... nice.

Re your questions...

1) All of the testing seems to indicate that the default sizes are the best sizes, unless you are doing something specific, like it's a Video Editing system or something, so go with the defaults.

2) Raid10 is a bit faster than Raid 5, in that it is actually Raid0 (across 2 drives) but those two drives are exactly mirrored by the other two.

3) Real world.. Raid0 beats Raid5 every time. Try copying a large file from one location to another, say from My Doc's to Desktop on the Raid0 volume, and then repeat the same process from somewhere on the Raid5 volume to somewhere else on the Raid5 volume... you'll see the difference.

I think you're right about the Raid10... none yet. Several have used the new drivers and they seem to have made a slight improvement to ICH9R from memory, so yes please... benchies!

Ive got the Abit IP35 Pro with the ICH9R chipset, and I want to setup my matrix raid as you guys do here with the high performance RAID0 first partition, and the lower performance RAID1 2nd partiton.

Right now I'm running a RAID0 using the full space of my 2x Seagate 7200.11 500GB drives.

When I run HDTach, I get sequential read of no more than 250GB at the front, which then drops as you move out to the full 1TB size. I've done some cleanup on my system, and got it down to 33GB (this has my OS, apps and games).

Im thinking of putting that into a 80-100GB RAID0 partiton, then using the remaining space for RAID1 storage.

My concern is, why do you think I'm getting only 250GB max read speed, even at the front of the platter (with many others getting 450GB/s with other non raptor drives)? I thought the 32MB cache on my drives would help performance, but it looks like those 640GB WD drives are kicking my butt! Is my performance low for my RAID/drives?

I'm running ORTHOS right now to do a final 24hr stability test of my 514x8 OC (11.5hrs and counting!), so I cant post a screenshot at the moment, but I will when I can.

I know you guys need more info to be able to help out (or maybe my read speed is correct for my disks/RAID...?), so I'll tell you anything you need to know.

Thanks!

EDIT - also, I've got my 4GB swapfile sitting on another 500GB disk in my system.

1) Is it still faster to put that on another single disk instead of having it on the RAID0 slice?

2) If so, would it make sense to cut out a 4GB slice of that HD just for the pagefile, to make sure it is at the end of the platter?

3) Is 4GB page a good size w/ 4GB of RAM? Ive read that with 4GB ram, you should actually make the page smaller (though ive got the room to spare).

Thanks again!

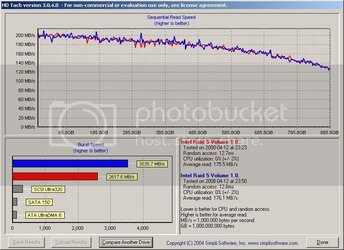

Re your 250Gb max... I've two machines running single 500Gb 32Mb cache drives atm, so I'll do a bench on one of them and let you know, but I suspect you might be comparing yours unfairly/unequally.

+++edit... Just ran HDTach over my 2nd rig (normal boot with everything going, incl sidebar, Nortons, etc), Burst speed=189.6Mb/sec and Average Read=88.6Mb/sec ... a nice gentle decline from 115Mb/sec down to about 55Mb/sec.

I think your 250Mb/sec is just fine!

I actually let Windows manage my swapfile now... the difference seems to be negligible, and in fact I have noticed that (when I used to set it) whenever I had the swap file too big... it dragged windows down in speed. I would maybe try reducing it to say 2Gb then 1 Gb then 500Mb then let windows manage it and see what the differences are... might surprise you.

B

Last edited:

Similar threads

- Replies

- 28

- Views

- 4K