Upping the voltage may help you to sustain the higher core clocks for a tad bit longer, but....it's also going to generate heat faster. It's a double edged sword. You have to find the balance between sustainable core clocks and temps, or at least, the highest sustainable core clocks for the benchmark you're running. With Firestrike you'll be able to sustain the higher core clocks throughout the benchmark, because they're relatively short. Timespy, however, takes longer, and your clocks will reduce to account for the build up of heat by the end of the bench. Heaven and Valley are even longer, so expect quite a bit of drop off by the time it's done running.

Very simply.....the GPU core while cool runs more efficiently and will need less volts to run a specific clock. As the core heats up it becomes less efficient and will need more volts to sustain that clock...which in turn causes more heat, and at some point it'll downclock itself another step. So, by raising the voltage to it's maximum available voltage, it'll be able to sustain the clocks a little longer even with the GPU heating....but, again....it will eventually get to the point that, that clock will need more voltage than you can give it, and it'll downclock again.

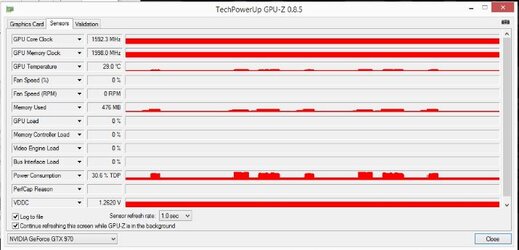

Watch the voltage and clocks the next time you run Heaven....you'll see what I mean.

I also have a couple of the 1080 FTWs. I've never had to disconnect the power from the GPU to get the switch to work. It make sense, though. I have had it take a couple of power cycles to get it to take effect.