-

Welcome to Overclockers Forums! Join us to reply in threads, receive reduced ads, and to customize your site experience!

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

FEATURED AMD ZEN Discussion (Previous Rumor Thread)

- Thread starter Johan45

- Start date

- Joined

- Jul 14, 2003

Considering that all is going to multithreading and 4 cores is already standard then I simply see no reason to push next 2 core cpu into gamers/enthusiasts market. 4 cores is minimum for a gaming PC and it's what is scalling the best with most games. 2 cores are much slower in many titles regardless if there is HT or not. HT adds 0-50% performance but here are applications which are performing worse with HT enabled ( not many of them ). Simply I wish to see 4, 4+HT, 6, 6+HT, 8, 8+HT, 12, 12+HT, 16 and 16+HT versions of ZEN on the desktop market ... somehow I doubt in all that above 8 cores but I just wish to play with something like 16c+HT ZEN if it performs as leaks are saying.

Also rumours say that we will see ZEN in the middle of Dec... at the same time that it will be presented at CES in Jan. Hard to say what is true and if we will see it before middle of Jan in stores.

Unfortunately, games are still not really taking advantage of more than 4 cores. Took them this long to really get around to 4 cores, 6+ with HT or not probably won't see much gains. Like the i5 vs i7 for gaming comparisons and how they perform about the same in most games (at the same clockspeed). As games take further advantage of more and more cores, it seems that even the old FX cpus see an improvement. Its what they've been waiting for all this time after all lol.

To those talking about history of CPUs and architectures, here's a bit of a secret:

There has been 0 new novel ideas since the AMD Athlon X2. That was when multi-core on a single die took off. Before that Net-burst was a different concept compared to what others where doing. There was nothing new about Net-burst, it just happened to be very good at single threaded delivery. Actually the P4 is the change over when software was more advanced than hardware, and now hardware is more advanced than software (consider how many years it took for M$ to catch up in multi-threaded kernal capabilities and better rendering techniques thanks to DX12).

CPU Architecture has been mostly the same all of its life. Just a bit of an addition here and removal there, and general clean up here and there. The x86 architecture has noticeable increases of performance boosts with every generation, but if you look at the progress of the architecture and compare it, you'll notice that all the same parts have been there, they have just gotten better.

When will CPUs have a cataclysmic change? Around the time Neural Nets can be implemented along with the x86 core.

There has been 0 new novel ideas since the AMD Athlon X2. That was when multi-core on a single die took off. Before that Net-burst was a different concept compared to what others where doing. There was nothing new about Net-burst, it just happened to be very good at single threaded delivery. Actually the P4 is the change over when software was more advanced than hardware, and now hardware is more advanced than software (consider how many years it took for M$ to catch up in multi-threaded kernal capabilities and better rendering techniques thanks to DX12).

CPU Architecture has been mostly the same all of its life. Just a bit of an addition here and removal there, and general clean up here and there. The x86 architecture has noticeable increases of performance boosts with every generation, but if you look at the progress of the architecture and compare it, you'll notice that all the same parts have been there, they have just gotten better.

When will CPUs have a cataclysmic change? Around the time Neural Nets can be implemented along with the x86 core.

- Joined

- Mar 7, 2008

How radical does something have to be to call it a novel new idea? On the X2 example above, was it that surprising? Multi-socket systems were around and very affordable at the time. Moving two cores together is a natural evolution, and we've been doing more of the same, by moving in the cache into CPU, moving the memory controller in to CPU, moving GPU into CPU (you know what I mean) and more and more IO is getting moved into the CPU. SOC are also widely available.

GPUs and "deep learning" seems to be a trend area at the moment, but is it that different from X86? Quantum computing would certainly rewrite the rule books about a lot of things, but is still out of reach.

Even sticking to conventional computers, I've wondered if CPUs themselves could benefit from going more async internally. That is, why fixed clocks? If a block can do something faster, let it. Taken to an extreme, the concept of clock as we know it may need to be removed.

Maybe less of a forward idea, analog computers are a real thing if you look far enough in history. It doesn't have to be digital. How to make use of it in the modern world, is another matter...

GPUs and "deep learning" seems to be a trend area at the moment, but is it that different from X86? Quantum computing would certainly rewrite the rule books about a lot of things, but is still out of reach.

Even sticking to conventional computers, I've wondered if CPUs themselves could benefit from going more async internally. That is, why fixed clocks? If a block can do something faster, let it. Taken to an extreme, the concept of clock as we know it may need to be removed.

Maybe less of a forward idea, analog computers are a real thing if you look far enough in history. It doesn't have to be digital. How to make use of it in the modern world, is another matter...

Neural Networks and Deep Learning are near one in the same but they all refer to the same technology. This type of architecture is radically different and novel (although not a new concept, its been around since the 1950s). Hardware has gotten to the point that Neural Networks can be ran at real time, and used to analyze data at real time. I can talk hours on this stuff as I'm gearing up to do this at my job.

As for CPUs, the multi-core concept was novel because it had never been done before. It required a lot of new parts to be added onto the x86 architecture, and included a heavy amount of changes in the materials world. Now SoC has been around for a long time, and you could consider the IMC and GPU combination as a bit novel, but GPUs are just a different type of CPU, so nothing really different.

Multi-core and multi-threaded CPUs are already async. No core relies on the other, but the execution pipeline is synced. GPUs relied on all cores being in sync because the data that is given to it must be processed as such.

Digital is just really fast Analog. And really there is no such thing as square waves once you go past a certain frequncy Most of the "square" waves I see are very much sinusoidal.

Most of the "square" waves I see are very much sinusoidal.

As for CPUs, the multi-core concept was novel because it had never been done before. It required a lot of new parts to be added onto the x86 architecture, and included a heavy amount of changes in the materials world. Now SoC has been around for a long time, and you could consider the IMC and GPU combination as a bit novel, but GPUs are just a different type of CPU, so nothing really different.

Multi-core and multi-threaded CPUs are already async. No core relies on the other, but the execution pipeline is synced. GPUs relied on all cores being in sync because the data that is given to it must be processed as such.

Digital is just really fast Analog. And really there is no such thing as square waves once you go past a certain frequncy

- Joined

- Mar 7, 2008

I'm not into the detail of what entails the current GPU driven deep learning solutions. From my limited understanding, GPU implementation seems to be all about low precision FLOPS, which appears to be a brute force approach as opposed to a more direct implementation in hardware.

In CPUs, I'm thinking going async at a much finer level, taken to an extreme, there would be no reference clock at all. That would be a radical divergence from what we have now.

Of course when we talk about digital or analog computing, we're talking about data representation, as opposed to the underlying physics. I work in the electronics business as my day job, and it still amazes me the high speed stuff works at all... I work at the other end of the frequency scale.

In CPUs, I'm thinking going async at a much finer level, taken to an extreme, there would be no reference clock at all. That would be a radical divergence from what we have now.

Of course when we talk about digital or analog computing, we're talking about data representation, as opposed to the underlying physics. I work in the electronics business as my day job, and it still amazes me the high speed stuff works at all... I work at the other end of the frequency scale.

Non-Syncronous Pipelines are a thing in CPU research, however this is a very hard concept to work with. Research in that field has been long and little progress. I would have to dig up some notes to see whats going on in the field of research, as I haven't looked at it in a long time.

GPUs are used for NNs because of their ability to do matrix multiplication at a very high speed. Its one of the strengths of GPUs, and one of the major strengths for FPGAs as well (if you're interested in NNs, look at the progress in FPGA world. Its better suited for NNs than GPU/CPU GPPPU combinations).

Ahh so by analog you mean having a value in between 0 and 1. This is possible with NNs as they are designed around the sigmoid formula which gives a decimal value as their answer between 0 and 1. I like to call NNs that are attached to CPUs as the probability core.

GPUs are used for NNs because of their ability to do matrix multiplication at a very high speed. Its one of the strengths of GPUs, and one of the major strengths for FPGAs as well (if you're interested in NNs, look at the progress in FPGA world. Its better suited for NNs than GPU/CPU GPPPU combinations).

Ahh so by analog you mean having a value in between 0 and 1. This is possible with NNs as they are designed around the sigmoid formula which gives a decimal value as their answer between 0 and 1. I like to call NNs that are attached to CPUs as the probability core.

- Joined

- Dec 18, 2000

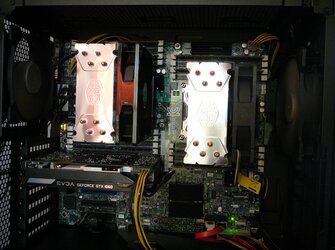

Well the temporary dual E5-2670 rig is up and running. The photo isn't the best but if I use the flash its even worse. Due to the PCI-e x16 slot being positioned directly in front of the CPU1 memory, only a mini GPU will fit (roughly 7.5" will fit). But, if I keep it for long, the best I would put in it would be a GTX 1070 anyway, so, no problem. I have my mini GTX 1060 sitting in there to get it up and running. Had Zen been out, I would have built one of them, but it isn't.

Here's what the motherboard with CPUs and memory looked like before going in the case.

Here's what the motherboard with CPUs and memory looked like before going in the case.

- Joined

- Dec 18, 2000

Could the lower 8x slot be used instead if you wanted to go full length?

Yes you could use both of the blue slots. I just prefer to run x16 and I already had the 6.7" EVGA mini 1060 so I just slapped it in. I've been running the Aida 64 stress test for the past few hours, which also is using 95% of the 64 GB memory, and the CPUs are sitting at around 56C @ 3 GHz running at a constant 100% load. Tha Carbide 400C case I picked up for $40 at my local Microcenter looks nice enough and has a full window on side panel, but only 3 of the motherboard mounting holes matched up, so I had to do some workarounds to get it secured. I'd been using a Supermicro 665W ATX server PSU for my X79 and X99 builds so I already had that laying around. The Intel S2600CP2J mobo requires above 0.3A be available on the -12V rail or it won't boot and the Supermico PSU is spec'd at 0.5A so I was good to go. For cooling I'm using my trusty old Hyper 212 Plus with a Hyper 212 EVO I picked up for $20 on eBay. A test run on Cinebench R15 scored 2003.

Last edited:

- Joined

- Dec 18, 2000

Just ran an old golf game that uses a lot of CPU resources. I just got the missing LGA 2011 parts for the Hyper 212 EVO in the mail today, so its been only running a few hours, and mostly just running the AIDA64 System Stress Test. I installed an old 80 GB Intel SSD that was preloaded with Windows 7 Pro to get it going quickly. It booted right up even though the last system I used in on was an AMD A10 7860K/ASRock 970 setup I was played with for a few days last month. After booting into Windows, I removed the AMD drivers and installed the nVidia ones and it was up and running fine.

Quote Originally Posted by Woomack

Considering that all is going to multithreading and 4 cores is already standard then I simply see no reason to push next 2 core cpu into gamers/enthusiasts market. 4 cores is minimum for a gaming PC and it's what is scalling the best with most games. 2 cores are much slower in many titles regardless if there is HT or not. HT adds 0-50% performance but here are applications which are performing worse with HT enabled ( not many of them ). Simply I wish to see 4, 4+HT, 6, 6+HT, 8, 8+HT, 12, 12+HT, 16 and 16+HT versions of ZEN on the desktop market ... somehow I doubt in all that above 8 cores but I just wish to play with something like 16c+HT ZEN if it performs as leaks are saying.

Also rumours say that we will see ZEN in the middle of Dec... at the same time that it will be presented at CES in Jan. Hard to say what is true and if we will see it before middle of Jan in stores.

Does anyone have some Benchmarks links where HT adds 50% performance?

I have no test results but I was comparing some applications on virtual machines where all threads are visible as cores and performance was about 50% higher after enabling HT. I remember there were some benchmarks showing it but I don't remember what. In most applications can't see it so much and I remember there were some issues with HT on older systems where performance was even worse after enabling HT. I guess that new OS fixed that as I haven't heard about these issues for some longer.

In most new games I see big difference between 2 and 4 cores but not so much above that. However most games that I play can use more than 4 cores and in some like Civilization you can see the difference at more than 4 cores. I assume it will only go to more threads as the most profitable consoles have 6 cores+ right now and most games are designed for consoles and later improved for PC with better textures or other features. Still modern consoles are standard PC with different OS.

In most new games I see big difference between 2 and 4 cores but not so much above that. However most games that I play can use more than 4 cores and in some like Civilization you can see the difference at more than 4 cores. I assume it will only go to more threads as the most profitable consoles have 6 cores+ right now and most games are designed for consoles and later improved for PC with better textures or other features. Still modern consoles are standard PC with different OS.

Last edited:

- Joined

- Mar 7, 2008

The only case I can think of right now where HT gave 50% boost was in an old distributed computing project called Life Mapper. It doesn't exist any more. I've never seen above 50% boost so wonder if that is some practical limit.

For modern "HT friendly" software I typically see 30-40% boost.

For modern "HT friendly" software I typically see 30-40% boost.

- Joined

- Jul 31, 2004

How radical does something have to be to call it a novel new idea? On the X2 example above, was it that surprising? Multi-socket systems were around and very affordable at the time. Moving two cores together is a natural evolution, and we've been doing more of the same, by moving in the cache into CPU, moving the memory controller in to CPU, moving GPU into CPU (you know what I mean) and more and more IO is getting moved into the CPU. SOC are also widely available.

GPUs and "deep learning" seems to be a trend area at the moment, but is it that different from X86? Quantum computing would certainly rewrite the rule books about a lot of things, but is still out of reach.

Even sticking to conventional computers, I've wondered if CPUs themselves could benefit from going more async internally. That is, why fixed clocks? If a block can do something faster, let it. Taken to an extreme, the concept of clock as we know it may need to be removed.

Maybe less of a forward idea, analog computers are a real thing if you look far enough in history. It doesn't have to be digital. How to make use of it in the modern world, is another matter...

Getting async multithreaded jobs right in software is already hard, and you want it to be implemented in a general purpose CPU? Might take a while

Well the temporary dual E5-2670 rig is up and running. The photo isn't the best but if I use the flash its even worse. Due to the PCI-e x16 slot being positioned directly in front of the CPU1 memory, only a mini GPU will fit (roughly 7.5" will fit). But, if I keep it for long, the best I would put in it would be a GTX 1070 anyway, so, no problem. I have my mini GTX 1060 sitting in there to get it up and running. Had Zen been out, I would have built one of them, but it isn't.

Looks like a perfect spot for a R9 Nano

The only case I can think of right now where HT gave 50% boost was in an old distributed computing project called Life Mapper. It doesn't exist any more. I've never seen above 50% boost so wonder if that is some practical limit.

For modern "HT friendly" software I typically see 30-40% boost.

Spare resources for HT will be between 0 and <approaching-50> percent. It will never be greater than 50%, because then your "normal" and "HT" threads will have semantically switched places. I'm too lazy to write out the math

Last edited:

- Joined

- Mar 7, 2008

Getting async multithreaded jobs right in software is already hard, and you want it to be implemented in a general purpose CPU? Might take a while

Not what I was saying as this could help even a single thread. Right now, functions have to be pipelined and move through that pipeline according to the clock, regardless how long it actually takes. The pipeline speed is then constrained to the slowest part. If each block is not tied to a common clock, and simply passes data onto the next block when ready, time could be saved. Scheduling will probably be scary unless new thinking is used to better use this. This is not new technology, just that it isn't used in CPUs as we know it. There are some tricks using clock multiples which arguably are doing something kinda along that line, but this would turn it up to 11.

Spare resources for HT will be between 0 and <approaching-50> percent. It will never be greater than 50%, because then your "normal" and "HT" threads will have semantically switched places. I'm too lazy to write out the math

Again we're not thinking the same here. Say you have one core, which does up to 100% amount of work by itself. You then add HT to that core. How much work can you then do? I'm saying in best case I've seen, you gain 50%, that is, you do 150% work compared to without HT. At least, that is the maximum I've seen so far. I would be interested if anyone knows of anything that gets more than that 50% boost.

Similar threads

- Replies

- 9

- Views

- 1K

- Replies

- 108

- Views

- 6K

. It should be a good multi CPU gaming Rig.

. It should be a good multi CPU gaming Rig.