-

Welcome to Overclockers Forums! Join us to reply in threads, receive reduced ads, and to customize your site experience!

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

4070 SUPER

- Thread starter Flamethrower1972

- Start date

- Joined

- Nov 14, 2002

- Location

- Nashville

I will still take nearly any AMD card in the same price point as you get more vram, and more perf then the competing nvidia card. The only not totally crap card IMO is the 4080 Super for $1000, its only $100 more than the XTX that it looses to.4070 super vs 4070 ti I will take the 4070ti.

Honestly, who cares man?more vram

With a midrange card you will run out of useable horsepower before vram usually, am I wrong?

Honestly, who cares man?

With a midrange card you will run out of useable horsepower before vram usually, am I wrong?

You are pretty much right. With some exceptions, most games at 4K use no more than 6-10GB VRAM. Exceptions won't run well at max details on mid-shelf cards, so you need lower details or cards that come with 16GB+ anyway.

As I said many times, RTX4070 is perfect for me, just because I can play everything at 1440p and max details, and it uses 80-100W less than anything close in performance from AMD or older Nvidia gen. I guess I'm one of the few people who defend RTX4070, but I feel like most who complain about it don't even own this card.

- Joined

- Mar 7, 2008

I think I bought mine slightly before you did. My reasons are slightly different but it was, and still will be the best option until Super comes along. For me, it was the 3080 I couldn't buy last gen. It performs similar to a 3080, more VRAM, gets the latest hardware and features at the same time as a lower price. Lower power consumption was a bonus but not a deciding factor for me. At the time, 7900XT was nearest AMD offering in RT performance but cost closer to the faster 4070 Ti. I do weigh RT performance more heavily because it is where we need all the power we can get. More raster perf for me is lower value because it was already at a good enough level. AMD are winning the wrong race for me.I guess I'm one of the few people who defend RTX4070, but I feel like most who complain about it don't even own this card.

When it comes out, I think the upper mainstream sweet spot will be the 4070 Super. No point me updating from a 4070 and I'll be seeing what jump we get from 50 series or RDNA4 before deciding.

I love my 4070Ti, for me it is an awesome card. I defend mine all the time to people who don't own one lol. I got really lucky with mine, and got it on sale last summer for less than it retails for now, and hundreds less than anything the competition had at the time.. or else I might have been swayed into trying one.I guess I'm one of the few people who defend RTX4070, but I feel like most who complain about it don't even own this card.

- Joined

- Jan 4, 2024

- Location

- Indiana

- Thread Starter

- #27

I have watched utube vids that show the 4070 super vs 4070 ti they were in FPS and to me the price gap is worth it.Not if the prices go down on the 4070 ti I will get that insted.

Post magically merged:

I dp not like amd I have had there cards in the past and to me they sucked!!!! Another Massive DisasterI will still take nearly any AMD card in the same price point as you get more vram, and more perf then the competing nvidia card. The only not totally crap card IMO is the 4080 Super for $1000, its only $100 more than the XTX that it looses to.

- Joined

- Nov 14, 2002

- Location

- Nashville

100% I am not suggesting that anyone WITH a 4070 made a bad call, but if someone was buying today, next week, or even this month I would suggest going AMD Radeon. I run a mix of GPUs in my home/lab/gaming and the AMD cards from the RX 5700 on have all been rock solid, while the Nvidia cards outside of my A4000 have been temperamental at best.I love my 4070Ti, for me it is an awesome card. I defend mine all the time to people who don't own one lol. I got really lucky with mine, and got it on sale last summer for less than it retails for now, and hundreds less than anything the competition had at the time.. or else I might have been swayed into trying one.

Granted my house is highly linux biased, so having MESA support directly from AMD makes a big difference vs Nvidia's closed source additions they make to the kernel and distribute. I think were somewhere around 2/7 Windows/Linux.

As for the "who cares" about VRAM everyone does, games are designed for consoles first, and they have 16gb of RAM so every game being developed since the PS5 and Series X came out is going to have to cut down textures and reduce visual quality to fit into a card with less than 16gb. 12gb is now at or below entry level since AMD announced the 7600 XT with 16gb for $239 last week.

So unless all you need is to light up a monitor, buying anything with less than 16gb is ewaste. If you have to get a cheap card, your better off using the IGP on the new AMD 8000 series or any Intel non F chip for the last 2 years.

Best & Worst GPUs of 2023 for Gaming: $100 to $2000 Video Cards | GamersNexus

GPUs Best & Worst GPUs of 2023 for Gaming: $100 to $2000 Video Cards November 23, 2023 Last Updated: 2023-12-03 Our picks for the best GPUs for all price brackets at the end of 2023. The Highlights Intel is holding its own in the entry-level and value categoriesAMD looks best in the...

Anyone getting nvidia is going to be happy, they are good cards and im not saying that they are bad, im just saying they are currently not as good a value if buying new. Dollar for Dollar AMD offers more performance, and more RAM, its simply fact and its shown in all the testing. Now if the tradeoffs of higher price, and less vram are worth it for your games because either they support DLSS and RTX or are co-developed with Nvidia then hell yeah its probably worth paying a slight premium because in that specific use case AMD would be a worse value.

- Joined

- Mar 7, 2008

Consoles don't work like that. XSX/PS5 have 16GB of ram total, shared between OS, game code and graphics data. They have to decide how to divide that 16GB between all three use cases. XSX has a reported 13.5GB available to the game after the OS. I haven't located claims for the PS5 but it is likely similar. Then you have the XSS. That has 10GB of total ram. If games have to work on that too, it lowers the bar considerably. I actually wish it didn't exist as I feel it is holding back PC gaming because game devs have to target too low if they want to release on XB.As for the "who cares" about VRAM everyone does, games are designed for consoles first, and they have 16gb of RAM so every game being developed since the PS5 and Series X came out is going to have to cut down textures and reduce visual quality to fit into a card with less than 16gb. 12gb is now at or below entry level since AMD announced the 7600 XT with 16gb for $239 last week.

PCs work differently in that code and graphics data are stored in different pools. A typical gaming system has had 16GB or more of system ram for a long time, and depending on the GPU 8GB or more assuming a mid-range or higher gaming system. If a 16GB console allocates more than 12GB to graphics, it would have less than 2GB for code. 12GB GPUs have no problem matching or beating console performance at the same quality, and will likely remain the case for the current generation. If you look at actual direct testing between console games and PC releases of the same game, console releases tend to be comparable to PC "low" to "medium" settings more often than not. They simply don't have the power to provide higher quality and maintain framerate at the same time. That will likely be a problem for the 7600XT too.

The 7600XT pricing above has digits swapped. It's $329 according to AMD slide. The $60 over the 7600 8GB gets you the extra VRAM and marginally higher clocks at higher total power consumption. To me it sounds like the 4060 Ti 8GB vs 16GB question all over again. It will be interesting to see how many actually buy it when it gets released. It might have more value for non-gaming uses but I think a low budget builder will not realise the potential from 16GB so will tend towards 8GB model.

AMD is the niche use case in PC gaming, not Nvidia. Based on latest Steam Hardware Survey (Dec. 2023) all AMD is around 16%. RTX GPUs by themselves make up over 45%. I wish I could give a better breakdown but they don't list models below 0.15% share and the only current gen AMD GPU that makes the cut is the 7900 XTX. Share of all other models is insignificant.Dollar for Dollar AMD offers more performance, and more RAM, its simply fact and its shown in all the testing. Now if the tradeoffs of higher price, and less vram are worth it for your games because either they support DLSS and RTX or are co-developed with Nvidia then hell yeah its probably worth paying a slight premium because in that specific use case AMD would be a worse value.

AMD only appear to be ahead on performance if you focus on raster games, but fall behind with raytracing which isn't going away. RT is being used in more games and some console games have started using RT as standard with no pure raster path. Because of the low performance bar AMD have set on consoles, they will remain usable with RT but for the premium experience Nvidia is the way to go. In a similar way you might value VRAM, other non-game performance features are just more usable on Nvidia than AMD and people do value them. You might pay less for AMD, but you get less overall too. If it is sufficient for your needs, great. Enjoy.

- Joined

- Aug 14, 2014

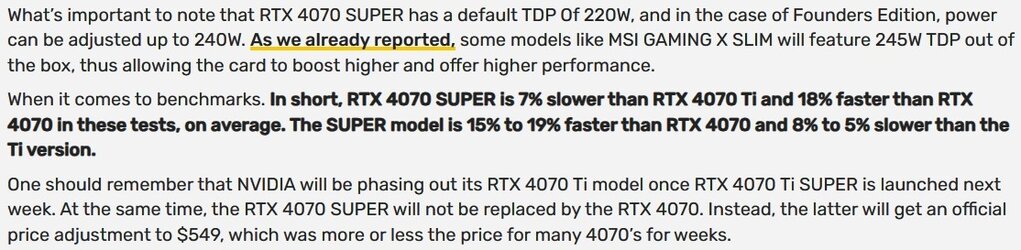

Benchmarks

videocardz.com

videocardz.com

NVIDIA GeForce RTX 4070 SUPER 3DMark leak shows 18% gain over RTX 4070 - VideoCardz.com

NVIDIA RTX 4070 SUPER 3DMark test leak We have a very first look at the first SUPER GPU of this generation. Tomorrow marks the end of the embargo for NVIDIA’s RTX 4070 SUPER reviews (at least some of them). This card is powered by the AD104 GPU featuring 7168 CUDA cores, nearly the full...

- Joined

- Aug 14, 2014

Waifu cards incoming, 4070s, 4070tis and 4080s, 4090 deluxe edition sometime after ⇾ https://www.techpowerup.com/317882/yeston-previews-sakura-rtx-40-super-gpus-with-white-pcb-design

I am with you 100%I think I'm just too old to get into anime... Missed the boat as a kid (except for perhaps, Voltron? Wheeled Warriors?). Not my cup of tea.

I like the white... but the anime theme, with heaps of cleavage or not, I just can't get behind. I guess a 47 y.o US male isn't the demographic they are going for, LOL.I am with you 100%

- Joined

- Aug 14, 2014

- Joined

- Nov 14, 2002

- Location

- Nashville

the Efficiency wins are one of the real arguments for the new cards, nvidia is definitely driving more frames per watt with this generation.Benchmarks

View attachment 364105

NVIDIA GeForce RTX 4070 SUPER 3DMark leak shows 18% gain over RTX 4070 - VideoCardz.com

NVIDIA RTX 4070 SUPER 3DMark test leak We have a very first look at the first SUPER GPU of this generation. Tomorrow marks the end of the embargo for NVIDIA’s RTX 4070 SUPER reviews (at least some of them). This card is powered by the AD104 GPU featuring 7168 CUDA cores, nearly the full...videocardz.com

- Joined

- Mar 7, 2008

Nvidia GeForce RTX 4070 Super review: more frames for less money

Nvidia's RTX 4070 Super gets the full Digital Foundry review treatment, looking at performance, power efficiency, RT, u…

- Joined

- Nov 14, 2002

- Location

- Nashville

I think that is one of my biggest hangups is none of the features are supported via wayland. No Ray Tracing, no DLSS, no HDR, nada so since Nvidia is forcing me to pick between raster performance and raster performance AMD is the leader at each price point.Skimming this write up. In RT games, more often than not it sends the 7900XT packing. In raster AMD is more competitive, with the 7800XT giving a good fight at a lower price point, assuming you don't find value in NV's other features.

Nvidia GeForce RTX 4070 Super review: more frames for less money

Nvidia's RTX 4070 Super gets the full Digital Foundry review treatment, looking at performance, power efficiency, RT, u…www.eurogamer.net

Last edited:

- Joined

- Mar 7, 2008

I had to look up what that even was. Linux thing. SteamOS doesn't have that problem does it? So maybe it matters in a tiny niche but totally irrelevant for the overwhelming majority.I think that is one of my biggest hangups is none of the features are supported via wayland. No Ray Tracing, no DLSS, no HDR, nada so since Nvidia is forcing me to pick between raster performance and raster performance AMD is the leader at each price point.

Similar threads

- Replies

- 1

- Views

- 648

- Replies

- 30

- Views

- 1K

- Replies

- 20

- Views

- 2K