We are not, lol. The thread is about a 4070 Super, lol. So let's be clear...........Linux on these items is by your choice, correct?

The phones come with android or apple. Tablets too (or windows). No clue what touchscreen device, and the Pi is Pi. About the only thing you listed that's native to Linux. IoT nor those devices won't use a gaming graphics card, right?

As this is a thread about a desktop gaming graphics card, I find the merits of you talking points laregly irrelevant within the scope as well. Straw man argument, sorry.

I dont think its strawman, but I given you and I have different views on what counts as an influencing data point I can see why you are thinking this way.

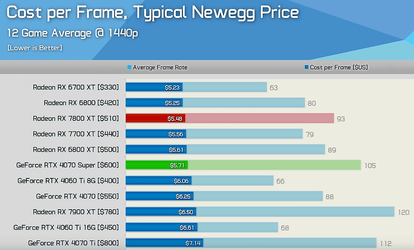

I am dismissing all of nvidia's features because they refuse to make them available to me, so I am judging them only on what they do give me which is raster performance. dollar to dollar AMD walks away with the win across every price point next to nvidia for what I can buy and use today.

You are using RT/DLSS as these features are available and the advantage to Nvidia over AMD with those enabled is clear. If you are looking for the most RT performance per dollar nvidia wins, if you are looking for most performance per watt, nividia wins.

So im not really arguing that people should use linux, im using to define and defend why I dont/cant value RT and DLSS so they are not factors in my decision making process.

I will also argue that FSR is superior technology because it runs on both AMD and Nvidia cards, runs on both Windows and Linux, can be implimented at lower cost than DLSS, and can be turned on for games that dont even have support for it with only minimum quality loss in things like menues and HUDs. DLSS is often only added when Nvida specifically pays for it to be added to a game, and I would prefer to improve quality in other ways rather than scaling up, but that is again me.

I again, dont think that people who have nvidia cards made bad choices, nor do I think people should not buy them. I simply thing calling the crown for overpriced midrange cards that are/have been vram limited based on RT performance alone is not great for everyone. We need to present the numbers fairly and then weigh them based on each persons specific requriements.

Raster is IMO the baseline since it is all games across all operating systems, then you can add value if your specific set of games gets RT or DLSS, you can add specific value if your power is expensive and you need efficency over raw performance. I add additioanl value based on operating system support but that is again specific to me, and everyone who owns a Series X or PS5.