- Joined

- Jul 20, 2006

If the benchmark works fine, then everything else isn't important.

I would play it if it was a new Fallout.

That's literally, totally, and COMPLETELY wrong.

Of COURSE the Benchmark works! That's what it's designed to do... CD Projekt Red wouldn't have been down like 300 million dollars or whatever it was if the actual GAME functioned as well as the Benchmark.

So I think some of that "everything else" might be important.

No. I thought I was clear about that, lol. I wasn't talking about experience playing the game(s), but how people read, interpret, and present information to others. You don't need to play the game to know if something got 'stomped' (or...didn't), for example.

Let's leave this thread for actually discussing the game. My bad, folks. I may start a thread to crowdsource some info at a later time.

Actually discussing... the game... that you haven't played? I mean the irony here is...

ANYWAY!

I have actually played the game...

I'd have to look back at my earlier posts to see if I was still on the 2060 Super when I had the lag problem in that one major battle where I was constantly falling through the floor and the "boss" of that battle kept lagging in and out of my sight.

I'm curious whether, as Kenrou suggested, my old, terrible Samsung Q0 was the cause of that lag, or maybe the problems I was having, back then, with my power supply.

If I'm correct, since then, I have replaced the Samsung garbage with a brand new M2 drive and the old 550 watt power supply with an XFX 850 watt one (or 750... I forget...) and of course the 2060 Super with the 4060.

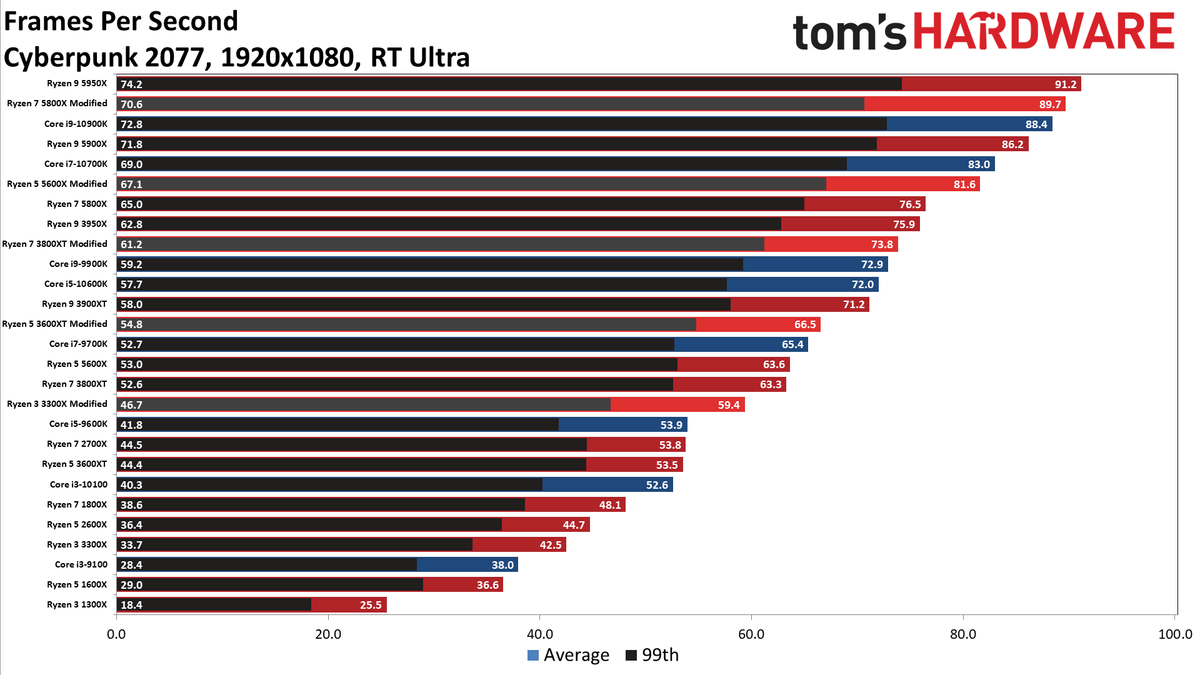

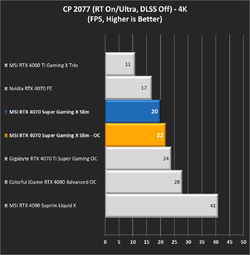

Your previous theory was that, somehow, my "low core count" was hurting me in this game. (Though likely, we agreed, not at 4K).

I guess the only way to know for sure would be to run that mission again and see what happens.

My day is already kinda weird... so maybe I'll do that right now.