- Joined

- Jun 13, 2003

- Location

- Lebanon, PA

Yeppir, the omega drivers are great AND the Sapphire runs at 1000 core, 1300 memory. The 970 IS faster but the 290 is no slouch.

Welcome to Overclockers Forums! Join us to reply in threads, receive reduced ads, and to customize your site experience!

Why are they wasting resources on those? They aren't humorous or entertaining. Just keep knocking it out of the park in price-to-performance and delivering on specsand leave the comedy to non-engineers hehe

so the r9 3xx has no chance to compete with the 9xx series from nvidia?

I thought it was both humorous AND entertaining xD

That isn't what the issue is, instability over 3.5GB. Its hitching because its writing out to the slower part of the ram.3.5 GB+ but if so it is acting unstable and i guess it could be fixed driver side.

I did giggle. That said, I wish they would spend more time on their GPUs and less time on marketing. Nobody likes a person that talks trash and can't back it up... Trueaudio... Mantle... We've been waiting for adoption and it hasn't been fast.I thought it was both humorous AND entertaining xD

Have been testing a lot now, but its hard to reproduce the issue, means it may have a issue at 3.5 GB+ but if so it is acting unstable and i guess it could be fixed driver side. The drivers are probably still on the beta side and not fully mature. Especially with some memory OC and improved drivers i guess it may work properly in most games even at above 3.5 GB, but there can be exceptions who knows.

Doesnt change the fact that Nvidia simply didnt spell out the truth for many months in a row but honestly... i guess in term they was fully open minded and was providing the correct specs from day 1 and telling everyone how it works and whatelse, i guess just a few people might be having issues. It is truly the dirty dishonest approach that made many users upset and i do fully understand it.

Performance wise, both partys got good stuff. AMD going the "volcano-way" and they are perfectly honest because it is even in the name "volcanic islands". While Nvidia the cold way... unfortunately even cold "by heart" and thats not how it gonna be work any good in marketing terms. They have to take customers serious, tell them the full stuff, simply dont make an fool out of them and all may be fine.

Considering the crazy "power usage" of many AMD cards: I cant make a standart statement because in my mind the high end super performance hardware nowadays is generally way more thirsty and in need of way more cooling than the stuff 10+ years ago. So that matter has "improved" at absolutly any spot or company. Its true that AMD is currently "worst case" but anyone who is truly serious about a "green approach" and is having a lot of high end hardware with high power consumption that cant be avoided: High performance = high power, simply the rule. Well to anyone that is still taking "green specs" serious they may be able to find certain solutions and i was able to find solutions: All my high end hardware is used right below the roof of a house, that means i can use the heat in the winter and generally when its cold very effective as a "room heater" and in the summer there is use in order to make the room more dry so i can dry my clothes way more effective and very efficient without the use of energy-hogs such as "tumblers" or a "dehumidifier"; i have no need for such devices by simply using my "hardware room". So i have many energy that isnt truly wasted at all... and can be used for many other stuff, not only "TFLOP-performance". The energy does affect the room in a way how i want it to be, so i have a gain in many terms and a reduced waste. To me it is critical that a room is warm and especially with low humidity, because i have tons of tea stored in that room and in term i get mold on the tea... it is able to destroy a super-load of very expensive tea. The hardware is a very effective device that are providing the correct "climate" in order to produce a safe environment to those sensitive goods. So, nope, Ninjacore, im not having anything in the basement except my bathroom... and i hope i was now perfectly accurate why it isnt the case.

Sitting here waiting on all those returned GTX 970's in Amazon Warehouse and newegg openbox.

Looking for a pair of EVGA GTX 970 SSC's....

*twiddles thumbs*

They directly compete. It's just a different way of doing things. Right now you can buy a card in the 970 that beats 290x in the many cases while using significantly less energy and generating a ton less heat. But at most you are talking handfuls of FPS in either direction. So you either want less heat and power usage or you don't, for whatever reason.

That used to be the 'space race' between these two companies. Things were getting smaller and they were both trying to squeeze the most they could while keeping power and heat down. AMD kind of took its eye off the ball when they shifted to consoles and simply went brute force with the last generation of cards they released.

There is nothing subtle about the 290/x. Big ram with big 512bit memory bus. Nvidia's 256bit answer was far more refined and actually advances things with new compression techniques and other goodies. Some are even speculating that the 970's offloading off less intensive tasks to that 512mb of slower Vram is the future. It isn't the first time Nvidia has done it and while they cannot do hardware side to 'fix' the 970, they can conceivably do some things with drivers to better manage what gets offloaded to the slower Vram.

That was in their first official statement. Followed by a retraction to cover their butts if they can't deliver.

The GPU market is as much a two-party system as is the US' political system. We need to be able to have good reasons to buy AMD as much as an old school conservative like myself needs a good reason to vote Republican again. I haven't seen any in either case for awhile now.

The 970 thing is still not a great reason. If money was not an option you'd simply toss more money Nvidia's way and grab a 980. That's not a great position for AMD, or the consumer.

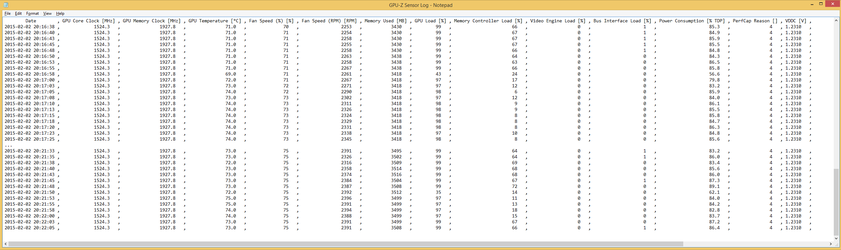

also should note that this was on a z97 board with 4790k running at 4.4ghz with 8gb of ram at 2400@10-12-12-31

Rather good explanation:I really think because 4 GB is a important marketing factor they surely tried to hide the 3.5 GB issue. They sold over 2 million units so the 970 was big success, and it would clearly be lower success when full spec revealed.