-

Welcome to Overclockers Forums! Join us to reply in threads, receive reduced ads, and to customize your site experience!

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

GTX470 and GTX480 Clocks ?

- Thread starter JJG

- Start date

- Thread Starter

- #22

lol i guess it dosent say its a good or bad announcement

Big announcement:

"We're canceling fermi but the next one is EVEN BETTER and will be released in 3Q 2010! So who cares? It'll be awesome!"

- Joined

- Jul 3, 2006

- Location

- UK

As I've said myself.. It's "potentially" and "if they make target clocks with the full 512 SPs."

I stand by the guesswork. A GTX 480 - assuming they can make clocks, and assuming all 512 shaders are working - will perform close to a 5970. Whether Nvidia can actually produce the cards is another matter.

Yes but what you're doing is providing a guess for the maximum theoretical performance and insinuating that will be its practical performance.

If this was how it worked, the 4870X2 would be faster than the GTX295 in everything, comparing raw theoretical GFLOPS performance.

The guesswork is a bit too sensationalist. Let me put it to you in another way:

"FERMI could be so fast that ATI will only be able to compete by releasing the 6870".

Now, even though ATI will be bringing out the "Northern Islands" GPUs later this year, FERMI was specced and finalised long before the 5800 GPUs were released. At this point Nvidia can only tweak clock speeds, or cut down the cores, they can't and haven't gone back to the drawing board with it. FERMI is set to run as fast as it was specced Q4'09, or slower.

Remember, FERMI is causing Nvidia problems. This points to two possible eventualities, either FERMI is very fast but very expensive and supply is very limited, or we will see a cut down version.

- Joined

- Aug 24, 2007

Yes but what you're doing is providing a guess for the maximum theoretical performance and insinuating that will be its practical performance.

If this was how it worked, the 4870X2 would be faster than the GTX295 in everything, comparing raw theoretical GFLOPS performance.

The guesswork is a bit too sensationalist. Let me put it to you in another way:

"FERMI could be so fast that ATI will only be able to compete by releasing the 6870".

Now, even though ATI will be bringing out the "Northern Islands" GPUs later this year, FERMI was specced and finalised long before the 5800 GPUs were released. At this point Nvidia can only tweak clock speeds, or cut down the cores, they can't and haven't gone back to the drawing board with it. FERMI is set to run as fast as it was specced Q4'09, or slower.

Remember, FERMI is causing Nvidia problems. This points to two possible eventualities, either FERMI is very fast but very expensive and supply is very limited, or we will see a cut down version.

All true, of course. Bear in mind I wrote that little piece before the 5970 was released and before numbers were even available. It scaled just a tiny bit better than I was expecting, but still only beat the GTX 295 by 40% (IIRC) at 2560x1600, and about 30% at 1920x1200. If Fermi makes 512 SPs, that's 113% more shaders than a GTX 285, and we already know a pair of those in SLI beat a 295. It's still 7% more shaders than a GTX 295, and there *should* be no dual-gpu penalty attached. The conclusion I drew (and still draw) from all that?

If Fermi makes clocks, it'll come close to, or beat the 5970. If it doesn't, it's just gonna be a boring 295-on-a-card. I didn't base my conclusion on anything other than extrapolation on current generation peformance with a sprinkling of speculation. I've never claimed anything more.

- Joined

- Jul 3, 2006

- Location

- UK

All true, of course. Bear in mind I wrote that little piece before the 5970 was released and before numbers were even available. It scaled just a tiny bit better than I was expecting, but still only beat the GTX 295 by 40% (IIRC) at 2560x1600, and about 30% at 1920x1200. If Fermi makes 512 SPs, that's 113% more shaders than a GTX 285, and we already know a pair of those in SLI beat a 295. It's still 7% more shaders than a GTX 295, and there *should* be no dual-gpu penalty attached. The conclusion I drew (and still draw) from all that?

If Fermi makes clocks, it'll come close to, or beat the 5970. If it doesn't, it's just gonna be a boring 295-on-a-card. I didn't base my conclusion on anything other than extrapolation on current generation peformance with a sprinkling of speculation. I've never claimed anything more.

I see your point, but there seems to be some inconsistencies with past trends. We all want great performance parts, but maybe you're just jumping the gun a bit?

Nvidia will be aiming to double performance of the original GTX280, not the GTX285. With this, also bear in mind the benchmarks of the 280 vs the 9800 GX2 - link. Just as SLI and crossfire do not lead to linear performance gains, the same also applies when increasing the number of shader cores.

I idea of a GTX480 beating a 5970 is just a bit optimistic. I wouldn't be surprised if the GTX480 ends up a little slower but noticeably cheaper than the 5970.

It will be interesting to see how production of the GTX400s is affected also, as Nvidia is dependent on TMSC as well.

- Joined

- Aug 24, 2007

I see your point, but there seems to be some inconsistencies with past trends. We all want great performance parts, but maybe you're just jumping the gun a bit?

Nvidia will be aiming to double performance of the original GTX280, not the GTX285. With this, also bear in mind the benchmarks of the 280 vs the 9800 GX2 - link. Just as SLI and crossfire do not lead to linear performance gains, the same also applies when increasing the number of shader cores.

I idea of a GTX480 beating a 5970 is just a bit optimistic. I wouldn't be surprised if the GTX480 ends up a little slower but noticeably cheaper than the 5970.

It will be interesting to see how production of the GTX400s is affected also, as Nvidia is dependent on TMSC as well.

In the case of the GX2 vs the 280, there's a few things to bear in mind. The GX2 has 256 SPs compared to 240 for the 280. The 280 also has no performance quirks related to being SLI on a card, which is the reason I even bothered upgrading from the GX2. WoW microstutter (and regular stutter even) went away completely. Those benches were also launch drivers, 177.34, and the whole GTX series has seen vast improvements since then, while GX2 has basically stood still.

- Joined

- Jul 3, 2006

- Location

- UK

In the case of the GX2 vs the 280, there's a few things to bear in mind. The GX2 has 256 SPs compared to 240 for the 280. The 280 also has no performance quirks related to being SLI on a card, which is the reason I even bothered upgrading from the GX2. WoW microstutter (and regular stutter even) went away completely. Those benches were also launch drivers, 177.34, and the whole GTX series has seen vast improvements since then, while GX2 has basically stood still.

How much performance are you talking here, I don't disagree with you but AFAIK the GTX280 didn't really pull ahead of the GX2, the GX2 just reached EOL and was outdated in other areas, other than performance.

- Joined

- Aug 24, 2007

How much performance are you talking here, I don't disagree with you but AFAIK the GTX280 didn't really pull ahead of the GX2, the GX2 just reached EOL and was outdated in other areas, other than performance.

I'm just going by numbers I have seen in patch notes over time. 177 to 192+ series drivers have had countless little "up to 18% improved performance in (whatever game)" sprinkled around. Nothing I can quantify, of course, but gains nonetheless. If I get bored, I'll install those 177's and do some benches, then switch back to the most recent and run them again, just to see. Getting a little off topic here, though

edit: You know, I'm going to do it now with Crysis and Far Cry 2. I'll start a thread for it.

- Thread Starter

- #29

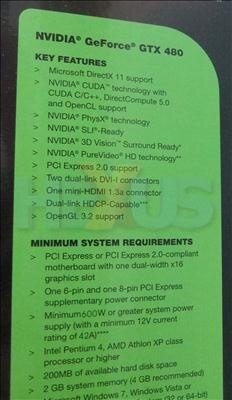

found a little more info and some possible box pics

Source

http://www.hexus.net/content/item.php?item=22654

Source

http://www.hexus.net/content/item.php?item=22654

- Joined

- Jan 17, 2008

- Location

- Overland Park, KS

found a little more info and some possible box pics

Source

http://www.hexus.net/content/item.php?item=22654

Looks 'shopped

- Thread Starter

- #32

here is a video of the box, i was able to pause and see 480... you be the judge

http://tv.hexus.net/show/2010/03/CeBIT_2010_Tarinder_is_on_the_Fermi_hunt/

http://tv.hexus.net/show/2010/03/CeBIT_2010_Tarinder_is_on_the_Fermi_hunt/

Last edited:

- Joined

- Aug 24, 2007

Looks 'shopped

How can ya tell? I'm not good at spotting that sort of thing.

- Joined

- Jan 6, 2005

How can ya tell? I'm not good at spotting that sort of thing.

I'd like to know too, because hexus has always been pretty decent for reviews.

- Joined

- Nov 26, 2005

- Location

- Concord, NC

I think the box is real, but it may have been made-up to get some exposure to that no-name company. I've never heard of the "Colorful" name before in the gfx card arena.

- Thread Starter

- #36

i really havent see them around either but tomshardware reviewed on a card made by them a little while ago, a 8600 gt hd

http://www.tomshardware.com/reviews/graphics-cards-luxury-trimmings,1722-8.html

http://www.tomshardware.com/reviews/graphics-cards-luxury-trimmings,1722-8.html

- Joined

- Dec 16, 2007

- Location

- Chicago, IL

I need to see how that thing does F@H.

Apparently Nvidia completely re-did the geometry shader engine and un-cored the clocks (not 100% sure). They said geometry performance would be 5-10x higher than GT200, and that is sayin something for molecular dynamics.

Apparently Nvidia completely re-did the geometry shader engine and un-cored the clocks (not 100% sure). They said geometry performance would be 5-10x higher than GT200, and that is sayin something for molecular dynamics.

- Joined

- Dec 16, 2007

- Location

- Chicago, IL

Who do you think is going to be doing all this science in 20 years?

A generation of gamers will transcend into scientists and scholars. The only thing science will be is simulation and computation soon as physical experiments become either impossible or have been done already or unsolvable in finite time.

Can't wait.

A generation of gamers will transcend into scientists and scholars. The only thing science will be is simulation and computation soon as physical experiments become either impossible or have been done already or unsolvable in finite time.

Can't wait.

- Joined

- Nov 1, 2001

- Location

- New Iberia, LA

But besides the science this gpu is supposed to be able to do, I want more info on power consumption and thermals. Since I pay the bills for my electricity and I also have to keep my gpu cool while running CUDA work, I want to know whether this gpu will be excessive on either account. If it's a power gulping nuclear furnace then I won't touch it with a 10 foot pole and the science will just have to do without me jumping on the Fermi bandwagon and deal with my last gen 260GTX.

I really can't wait until some benches on this are released. But I wonder if the lack of leaked benches on this gpu are portending to problems something like the ATI R600 gpu saw when it was being developed. Late, hot, difficult to manufacture and relatively poor poor performance compared to the hype that was initially released. I guess the future will tell all.

I really can't wait until some benches on this are released. But I wonder if the lack of leaked benches on this gpu are portending to problems something like the ATI R600 gpu saw when it was being developed. Late, hot, difficult to manufacture and relatively poor poor performance compared to the hype that was initially released. I guess the future will tell all.

Similar threads

- Replies

- 3

- Views

- 2K

- Replies

- 4

- Views

- 457

- Replies

- 31

- Views

- 5K