- Joined

- Oct 5, 2008

- Location

- Cumbria (UK)

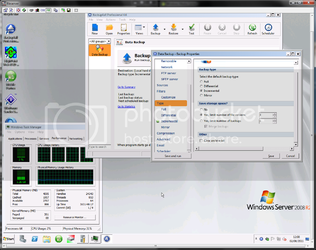

Surely this can't be normal:

Over 10 hours to complete a backup? And that is just with the "deleting ZIP files part" as nothing much had changed on the server yesterday. (I had to cancel the backup so I could open the settings page to take screen shots)

One CPU is maxed out all the time it is doing this (So I dont think the program is multi-threaded).

I thinking that it could be because I have the save storage space enabled:?

Would I be better off choosing one of these options or are they totally un-related?

I am pretty much at a loss as to what some of these options really do.

The backup in question is the backup job for my 2TB data array with all my music, docs etc on it.

I cant put up with it taken 11+ hours but I don't want to disable a feature that would stop me from getting back a deleted file.

ie: If I delete a file off my Data drive I would atleast want it to stop on the backup drive for a few days incase I needed to retrieve the file or it was deleted in error if you get what I mean.

Over 10 hours to complete a backup? And that is just with the "deleting ZIP files part" as nothing much had changed on the server yesterday. (I had to cancel the backup so I could open the settings page to take screen shots)

One CPU is maxed out all the time it is doing this (So I dont think the program is multi-threaded).

I thinking that it could be because I have the save storage space enabled:?

Would I be better off choosing one of these options or are they totally un-related?

I am pretty much at a loss as to what some of these options really do.

The backup in question is the backup job for my 2TB data array with all my music, docs etc on it.

I cant put up with it taken 11+ hours but I don't want to disable a feature that would stop me from getting back a deleted file.

ie: If I delete a file off my Data drive I would atleast want it to stop on the backup drive for a few days incase I needed to retrieve the file or it was deleted in error if you get what I mean.