dominick32 said:

rather than use the Matrix Implementation of a Raid 0/ Raid 1/5 array (which is Intels great idea)on the same physical drives.

Just a small point ... the idea is far from new. Hardware based cards (and people using hardware based cards) have been doing this for years. Just for some reason it's never been implemented in chipset software software RAID yet.

dominick32 said:

I do agree with the previous posters based on my experience with raided hard drives in various configurations and different controllers. In terms of synthetic performance and sustained transfer rate, the matrix array produced 30 MB/s more in HDTach and about 60 to 70 MB/s more in the upper portions of ATTO Diskbench than the exact same WD150 Raptors X 2 on NVRaid (Plain Raid 0). That would be a comparison of 2 X WD150 Raptors on my old NVRaid setup vs 2 X WD150 Raptors on my Matrix Setup. This was achieved by simply creating slices on the Matrix (rather than partitions) and enabling write back caching through Intels control panel.

FWIW, ATTO is almost completely useless for measuring performance as soon as you have cache somewhere along the line.

With regard to the HDTach numbers, the key thing here is that you had the WB cache enabled for the Matrix RAID test. Although WB cache should (in theory) have no effect on read performance bing showed that it did in one of his other threads. I remember in earlier Matrix RAID implementations there being something about it being for RAID5 only. I'm not sure what it says now (in the main window or helpfile) but obviously it changes something for the RAID0 arrays.

One thing that it could be doing is full-stripe reads when it has WB caching enabled, and non-full-stripe reads with it disabled, which would make some sense. Doing this should hurt it's performance in an IOMeter run, so it'd be interesting to see. Alternatively, HDTach could just be tickling the Matrix RAID driver slightly wrong when it's not in WB caching mode. Particularily with caching hardware cards, I've found that HDTach's measurement methods do not work well. h2bench generally provided a more consistant/logical number, though in some cases I had use my own STR benchmark and tweak it for the specific card/RAID type to get it working well. I would do some more investigation, but the computer I was using has ascended to the Linux plane of existance, which kinda stuffs up the ability to use the Windows drivers ...

dominick32 said:

1. Is a standard "plain raid 0" 20GB boot partition the same as a "Matrix Raid 0" boot slice?

You probably wont't be able to measure this using vanilla HDTach or most other disk benchmarking software since they tend to operate on a device level. I've written a thing that makes partitions appear as devices (required for testing Windows' RAID) that I can clean up for public consumption if you want. It'd also be good to get a comparison with Windows' stripe volumes as well.

dominick32 said:

2. Does Intels Matrix Write Back Caching produce any real world performance gains over "plain raid 0" disregarding the proven synthetic benchmarks that I posted in HDTach and ATTO?

I think also some trials need to be done using other STR benchmarking tools - Winbench and h2bench use different methods to HDTach and often get different results.

dominick32 said:

finally the most important question:

3. Are there any gains or benefits running the Matrix with a dual slice Raid0/Raid0 if you do not plan on using the Raid0/Raid1or5 implementatin that Intel designed the matrix for?

Indeed.

krag said:

Its pointless to argue with you any further. I have better things to do with my time.

Like making other vauge posts with benchmark number coming from nonstandard places resulting in impossibly high results? OK, go have fun ...

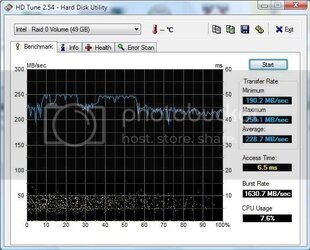

Seriously though, if you do make a post when you use a nonstandard method (or if the "standard" method is ambiguous) then say eactly what you did and what number you are reporting. The "standard method" for getting a MB/sec number from HDTach is to use the average sustained read speed. Since 215 MB/sec average (or even maximum) sustained read speed is impossible to get with two Raptors, you obviously did not report these numbers.

krag said:

edit...it is not impossible for raptors to put out more than 85Mbs...thats why we use raid O!

Sorry, I forgot to expand the start of that paragraph. It is impossible for a *single* Raptor drive to have a STR of more than 85 MB/sec (give or take a bit for the pedantics who precisely measure it to be 86.41532 MB/sec or whatever). Using RAID0 does not increase the performance of the component drives. Since the STR of two drives cannot be more than the sum of the STR of the two individual drives, the maximum STR for two Raptors (no matter how they are hooked together) is 170 MB/sec.

However, i did not measure my access times with HDTUNE, perhaps HDTUNE produces faster synthetic access times???

However, i did not measure my access times with HDTUNE, perhaps HDTUNE produces faster synthetic access times???