- Joined

- Jan 7, 2006

I'm contemplating a mild overclock (4.4 maybe) with my build, I've been on a stock cooler for the last few years and obviously I'd be looking into buying something simple like a 212 EVO, but even that's like $30 US + maybe more case modifications/fan installations. So there is really more than one question being asked here.

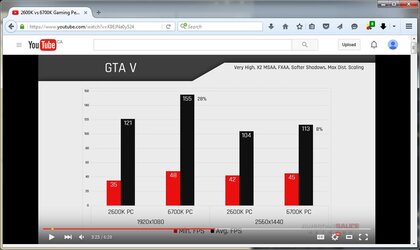

Beyond the expectations of 4.4 on my current board. There is also the question of 10% being worth it for the purpose of trying to break a CPU bottleneck in newer games.

I recently started Rise of the Tomb Raider, and for the first time I've found a game that runs all 8 CPU cores @ 60%-90% and bottlenecks my GTX980Ti @ 60% usage in some areas. 1080p or 3440x1440 +1.20 DSR factor, I'll get the same 30-35 Frame rate. I've not before this point experienced a game that would bottleneck the GPU below 60 fps With all the eye candy. This is more than a mild bottleneck.

So, I'm stuck wondering if I should expect more than a 10% gain in FPS with a 10% CPU overclock ? If the answer is no than clearly it's not worth buying gear for a mild overclock. I know nobody wants to guess what kind of performance gains this will yield, so I'll simply ask this. What would you do?

Beyond the expectations of 4.4 on my current board. There is also the question of 10% being worth it for the purpose of trying to break a CPU bottleneck in newer games.

I recently started Rise of the Tomb Raider, and for the first time I've found a game that runs all 8 CPU cores @ 60%-90% and bottlenecks my GTX980Ti @ 60% usage in some areas. 1080p or 3440x1440 +1.20 DSR factor, I'll get the same 30-35 Frame rate. I've not before this point experienced a game that would bottleneck the GPU below 60 fps With all the eye candy. This is more than a mild bottleneck.

So, I'm stuck wondering if I should expect more than a 10% gain in FPS with a 10% CPU overclock ? If the answer is no than clearly it's not worth buying gear for a mild overclock. I know nobody wants to guess what kind of performance gains this will yield, so I'll simply ask this. What would you do?