Intel, as usual, compares their default TDP (not turbo) to AMD's TDP. The only problem is that the Ryzen 7840U is up to 30W TDP, and the Core Ultra 7 165H is up to 115W TDP. If we even compare AMD's CPU+iGPU TDP separately (as they do, for some reason, in earlier chips), then it will still be less than 60W vs 115W for Intel. If we unlock TDP for 780M, then it keeps max clocks for much longer and performs better. This is why some last-generation laptops and mini PCs have 100W TDP options, even though CPUs have ~60W TDP limit.

Intel shows integrated Arc GPU for Meteor Lake is 10% faster than AMD Radeon 780M, across 33 games tested

Also 54% faster than previous gen Core i7-1370P's Iris Xe Graphicswww.tomshardware.com

Intel says it beats 780m currently, and yep, like usual, next gen from a competitor will beat it.

I'd like to see fps numbers instead of marketing.

I'm not saying that new Intel iGPUs are bad or worse than AMD's. I generally see a problem comparing CPUs/GPUs when they're highly limited by the computer's cooling or power design.

AMD 780M has been available on mobile computers for some months. Intel Core Ultra is not available in anything, if I'm right. 8000 series APUs will have their premiere soon too, but if I'm right, they will still use the 780M.

I remember when Intel was trying to prove their IGP is for gamers, showing a video made on discrete graphics. It wasn't even funny as all who knew how it performed also knew it was impossible to make it run so fast. It was just sad that a company like Intel shows fakes and pins official statements to that. Since then, they have made a huge improvement, but it's still far from graphics for modern games. AMD goes with their IGPs into handheld consoles, where it works pretty well because of much better results at lower TDP. Intel IGPs are barely ever used for gaming. At least something more demanding than Sims. Steam still shows that Intel IGP is one of the most popular graphics. It's because of how popular simple games are. Now we could think about the definition of a gamer. When we say gaming, then we generally think about more demanding titles and AAA games.

I don't follow that argument. If a 3060 is good enough to game, why not a 4060? A problem I see on forums are some posters. They often talk about what should have been, and they're not actually playing games. 3060 is at least comparable to current gen consoles and will give a great experience on any modern game if appropriate settings are used. There is a bit of a PCMR mentality but consoles are closer to low presets, and some PC gamers seem to resist considering anything below high. If iGPU is good enough, then 2x that is still good enough.

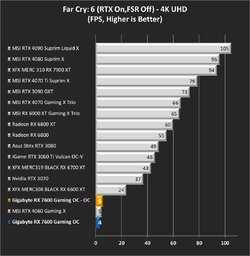

I didn't say that RTX4060 isn't good for gaming. It's just not much better than RTX3060 (looking at user needs), and it gets hiccups in titles that use higher VRAM when, for some reason, the RTX3000 series does not (even when cards have the same VRAM). I was reviewing RTX4060 8GB and RX7600 8GB. Both were pretty bad at anything above 1080p. They're designed for 1080p, and neither manufacturer is hiding that, so it's no problem. Both work well at 1080p. However, considering prices and what these cards have to offer, people much more often think about a secondhand RTX3060, which is 12GB, or RTX3060Ti/3070, or XBOX/PS5. They often consider skipping the RTX4000/RX7000 series. Even on OCF, we had threads about it, but more about the one step higher shelf, so mainly questions about RTX3070/4070 or RX7800/6800XT.

Most people don't buy only a graphics card, so RTX4050 will be a part of a $1k PC, where for half price, you get a console that gives a better experience. Those who upgrade older computers don't consider RTX4050 as an upgrade. RTX4060/RX7600 with their 8x PCIe bus are also not the best options for older computers.

Last edited:

Sorry, quote from the video, thought you'd know when it's me by now, I'm never oh so classy in my writing

Sorry, quote from the video, thought you'd know when it's me by now, I'm never oh so classy in my writing