- Joined

- Apr 9, 2006

- Location

- Falcon Heights, MN

So I have 3 WD black 1.5 tb drives in a raid5 hooked up to my ASRock Extreme4 that has a Z77 chipset. They are all sata3 but plugged into the sata2 ports on the board. I have a SSD populating one of the sata3 ports (on the Z77).

Couple issues right now that I can't seem to remedy or find answers to.

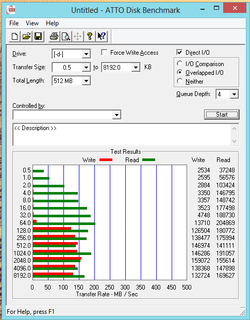

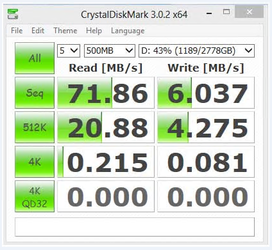

1) The raid 5 seems slow. I have an ATTO benchmark of it attached. Looks really weird to me. Anyone see something like this before? Anyone rocking the same setup as me but rocking some way better speeds? I am thinking this must be related to stripe size at this point (which is 64kb on this raid right now).

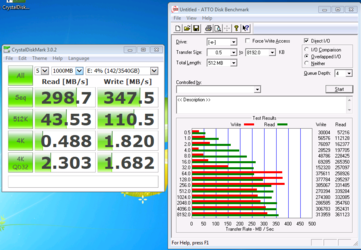

2) When you set the 6 sata ports (2x sata3, 4x sata2) on the board to be RAID it apparently doesn't allow any other drives connected to be ahci enabled? At least that is what it appears. I have my SSD plugged into one of the sata3 ports and it just will not do ahci. Does anyone else who has this board confirm that this is or is not your experience as well?

Couple issues right now that I can't seem to remedy or find answers to.

1) The raid 5 seems slow. I have an ATTO benchmark of it attached. Looks really weird to me. Anyone see something like this before? Anyone rocking the same setup as me but rocking some way better speeds? I am thinking this must be related to stripe size at this point (which is 64kb on this raid right now).

2) When you set the 6 sata ports (2x sata3, 4x sata2) on the board to be RAID it apparently doesn't allow any other drives connected to be ahci enabled? At least that is what it appears. I have my SSD plugged into one of the sata3 ports and it just will not do ahci. Does anyone else who has this board confirm that this is or is not your experience as well?

Attachments

Last edited: